| Issue |

A&A

Volume 705, January 2026

|

|

|---|---|---|

| Article Number | A68 | |

| Number of page(s) | 9 | |

| Section | Astronomical instrumentation | |

| DOI | https://doi.org/10.1051/0004-6361/202556107 | |

| Published online | 06 January 2026 | |

Deep-learning-based prediction of precipitable water vapor in the Chajnantor area

1

Facultad de Ingeniería, Universidad Católica de la Santísima Concepción, Alonso de Ribera

2850,

Concepción,

Chile

2

Departamento Ingeniería Informática y Ciencias de la Computación, Universidad de Concepción,

Concepción

4070409,

Chile

3

Facultad de Ingeniería, Universidad del Bío-Bío, Collao

1202,

Concepción,

Chile

4

Departamento de Electrónica e Informática, Universidad Técnica Federico Santa María - Sede Concepción,

Arteaga Alemparte 943,

Concepción,

Chile

5

Departamento de Ingeniería Eléctrica, Universidad Católica de la Santísima Concepción, Alonso de Ribera

2850,

Concepción,

Chile

6

Centro de Energía, Universidad Católica de la Santísima Concepción, Alonso de Ribera

2850,

Concepción,

Chile

7

National Astronomical Observatories, Chinese Academy of Sciences,

Beijing

100101,

China

8

Nanjing Institute of Astronomical Optics & Technology, Chinese Academy of Science,

Nanjing

210042,

China

9

CAS Key Laboratory of Astronomical Optics & Technology, Nanjing Institute of Astronomical Optics & Technology,

Nanjing

210042,

China

10

Chinese Academy of Sciences South America Center for Astronomy, National Astronomical Observatories, CAS,

Beijing

100101,

China

11

Instituto de Astronomía, Universidad Católica del Norte,

Av. Angamos 0610,

Antofagasta,

Chile

12

CePIA, Departamento de Astronomía, Universidad de Concepción,

Casilla 160 C,

Concepción,

Chile

★ Corresponding author: This email address is being protected from spambots. You need JavaScript enabled to view it.

Received:

25

June

2025

Accepted:

22

November

2025

Context. Astronomical observations at millimeter and submillimeter wavelengths strongly depend on the amount of precipitable water vapor (PWV) in the atmosphere, which directly affects the sky transparency and decreases the signal-to-noise ratio of the signals received by radio telescopes.

Aims. Predictions of PWV at different forecasting horizons are crucial to supporting the telescope operations, engineering planning, scheduling and observational efficiency of radio observatories installed in the Chajnantor area in northern Chile.

Methods. We developed and validated a long short-term memory (LSTM) deep-learning-based model to predict PWV at forecasting horizons of 12, 24, 36, and 48 hours using historical data from two 183 GHz radiometers and a weather station in the Chajnantor area.

Results. We find that the LSTM method is able to predict PWV in the 12- and 24-hour forecasting horizons with a mean absolute percentage error of ~22% compared to ~36% for the traditional Global Forecast System method used by the Atacama Pathfinder Experiment, and the root mean square error is reduced by ~50%.

Conclusions. We present a first application of deep learning techniques for preliminary predictions of PWV in the Chajnantor area. The prediction performance shows significant improvements compared to traditional methods in 12- and 24-hour time windows. We also propose strategies to improve our method on shorter (<12 hour) and longer (>36 hour) forecasting timescales.

Key words: atmospheric effects / site testing

© The Authors 2026

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. This email address is being protected from spambots. You need JavaScript enabled to view it. to support open access publication.

1 Introduction

Astronomical observations at millimeter and submillimeter wavelengths heavily depend on atmospheric conditions, particularly the amount of precipitable water vapor (PWV). This parameter describes the thickness of the water column, measured in millimeters, that would result from collapsing all the water vapor contained in the atmospheric column along the observer’s line of sight at a specific geographical location. The presence of PWV directly affects sky transparency, decreasing the signal-to-noise ratio of the signals received by radio telescopes and increasing the integration time required to detect faint astronomical sources (Radford 2011). High levels of PWV can render scientific observations in certain bands impossible.

The Chajnantor area, located in the Atacama Desert in northern Chile, is recognized as one of the best sites in the world for (sub)millimeter astronomical observations due to its high altitude (~5000 m) and extreme atmospheric dryness (Bustos et al. 2014; Radford & Peterson 2016). This location hosts cutting-edge observatories such as Atacama Large Millimeter/submillimeter Array (ALMA), Atacama Pathfinder EXperiment (APEX), Cosmology Large Angular Scale Surveyor (CLASS), Simons Observatory, and many others. Predicting PWV enables engineers and astronomers to optimize operations and the use of valuable telescope time by scheduling their observing frequencies for times with the most favorable atmospheric conditions, thus maximizing scientific return (Cortés et al. 2020). The highly variable nature of PWV at these altitudes requires reliable predictive tools. In this context, accurate monitoring and forecasting of PWV is crucial to supporting telescope operations, engineering planning, and observational scheduling.

Traditionally, PWV has been estimated using, for example, radiometers operating at specific water vapor emission lines (e.g., 183 GHz), radiosondes, and global navigation satellite systems (GNSSs). Radiometers can provide continuous and direct measurements of PWV, including at remote high-altitude locations with extreme environmental conditions (Otárola et al. 2010, 2019; Valeria et al. 2024). Using GNSSs, properly calibrated, is a powerful technique for obtaining PWV by converting zenith tropospheric delays into water vapor content measurements with high accuracy (Wu et al. 2021; Chen et al. 2021). Castro-Almazán et al. (2016) calibrated GNSS-based PWV estimates with radiosonde profiles to obtain the PWV at observatories in the Canary Islands. Recent advances in weighted mean temperature modeling have further improved GNSS retrievals as demonstrated by Sleem et al. (2024), who developed a high-resolution empirical model based on ERA5 data, a state-of-the-art atmospheric reanalysis produced by the European Centre for Medium-Range Weather Forecasts (ECMWF). Also, Li et al. (2024) show that real-time single-station GNSSs can deliver reliable PWV estimates extending applications to coastal and offshore regions. A comprehensive review by Vaquero-Martínez & Antón (2021) summarizes three decades of GNSS meteorology, emphasizing its crucial role in PWV monitoring, numerical model validation, and climate analysis. While these methods provide valuable real-time measurements, they primarily address PWV monitoring rather than proactive forecasting.

Forecasting PWV often relies on numerical weather prediction (NWP) models, such as the Global Forecast System (GFS). While NWP models provide broad geographical coverage, they typically lack the fine-grained spatial and temporal resolution needed to accurately capture the rapid, localized variations in atmospheric moisture at high-altitude observatory sites. This limitation significantly impacts the accuracy of short-term forecasts, which are critical for scheduling. PWV forecasts by Marín et al. (2015) in the Chajnantor area based on Geostationary Operational Environmental Satellites and GFS data had errors of 33% in the 0.4–1.2 mm range compared with PWV observations. The GFS model is currently being applied at the APEX telescope (Güsten et al. 2006) and provides 5-day forecasts in 6-hour step resolution.

Previous studies have rigorously evaluated approaches both on GNSSs and weather research and forecasting (WRF) for PWV estimation and forecasting at major astronomical observatories. For instance, Pérez-Jordán et al. (2018) validated the WRF model for PWV forecasting at the Roque de los Muchachos Observatory. Similarly, Pozo et al. (2016) assessed the performance of the WRF model at the Chajnantor Plateau, finding that forecast errors are greater than 1.5 mm in 10% of cases and do not exceed 0.5 mm 65% of the time.

Similar forecasting approaches using NWP models have also been successfully implemented at other major astronomical sites. For example, at the Very Large Telescope at Cerro Paranal and the Large Binocular Telescope at Mount Graham, using the Meso-NH mesoscale atmospheric model, has improved the European Centre for Medium-Range Weather Forecasts by a factor of 2 (Turchi et al. 2019). Likewise, the WRF model has been validated at Observatorio del Roque de los Muchachos (Giordano et al. 2013) and at APEX telescope (Pozo et al. 2016). All this demonstrates the widespread adoption of NWP tools in the astronomical community for PWV predictions.

Global navigation satellite system data have also been increasingly assimilated into NWP systems. For example, Li et al. (2023) demonstrate that assimilating GNSS PWV measurements into the WRF model improved humidity field representation by up to 26% in Australia during heavy rainfall events. Similarly, Wu et al. (2021) report that GNSS assimilation across China significantly enhanced long-term PWV climatology and precipitation forecasts. These results underline the dual role of GNSSs both as an independent observation system and as a source of assimilation data for NWP.

In response to the inherent limitations of traditional techniques and the insufficient resolution of NWP models for site-specific forecasting, alternative and advanced data-driven approaches have emerged as powerful predictive tools. In particular, deep learning models have shown the ability to capture nonlinear dependences and temporal patterns in complex atmospheric datasets. Among these, long short-term memory (LSTM) networks stand out for their capacity to handle sequential data and forecast variables with high accuracy. Studies of recent applications in atmospheric science report that such models often outperform traditional statistical and physical methods when trained with local, high-resolution data (Xiao et al. 2022; Hou et al. 2023; Yan et al. 2024).

Together, these advances highlight the current diversity of methods: GNSSs provide accurate retrievals and assimilation capacity, NWP models such as WRF offer physically based forecasts, and deep learning approaches add the potential to capture nonlinear dependences in site-specific high-frequency datasets. The present study contributes by applying a deep learning framework to continuous 183 GHz radiometer data combined with meteorological records.

In this context, the objective of the present study is to develop and validate a preliminary deep-learning-based model to predict PWV on 12-, 24-, 36- and 48-hours forecasting horizons using one year of 183 GHz radiometer and meteorological data for the Chajnantor area. In Section 2, we present the data and methodology. Section 3 describes the LSTM model. Section 4 presents the results obtained and strategies to improve our method and Section 5 presents our conclusions.

2 Data and methodology

The dataset employed in this study spans from July 13, 2023, to October 14, 2024 (460 days), covering one full annual cycle at the Chajnantor Plateau. It integrates measurements from two 183 GHz radiometers and a meteorological station.

2.1 183 GHz radiometer data

We used PWV data collected by two 183 GHz radiometers. One radiometer is located at the APEX telescope in Llano de Chajnantor at 23°00′20.8″ S, 67°45′33.0″ W and an altitude of 5105 masl. The second radiometer is operated by the Universidad Católica de la Santísima Concepción (UCSC) in collaboration with the Universidad de Concepción (UdeC) and is located at the CLASS telescope on Cerro Toco at 22°57′34.6″ S, 67°47′13.8″ W and 5200 masl. The horizontal distance between the two sites is approximately 5.9 km.

Both instruments provide continuous data. PWV from APEX (PWV APEX) is recorded as 1-minute averages, while the UCSC radiometer (PWV UCSC) samples every 2 seconds and is averaged to 1-minute intervals, yielding approximately 662 400 records per variable. This configuration allows redundancy and cross-validation of the measurements, given the small altitude difference between the two sites (~100 m). To evaluate our model at different atmospheric conditions, PWV values were stratified into three categories: <1 mm (33.4% of the records), 1–2 mm (31.1%), and >2 mm (35.5%). We also studied the PWV range 0.4–1.2 mm to compare with current GFS predictions.

2.2 Meteorological data

The meteorological data were obtained from the automated weather station at the APEX telescope site. This station provides continuous monitoring of local atmospheric conditions such as relative humidity (RH) in percentage, temperature (Temp) in degrees Celsius, wind speed (WS) in meters per second, and wind direction (WD) in degrees. The raw data were collected at one-minute resolution, synchronized with the radiometric series.

These observations complement radiometer measurements by providing atmospheric context. Together, they enable an integrated analysis of PWV variability with local meteorological conditions, essential for both operational forecasting and scientific applications. Meteorological data, PWV measurements, and GFS forecast data from APEX are publicly available1.

2.3 Data preprocessing

To ensure the reliability of the predictive model, a preprocessing workflow was applied to the raw dataset. The workflow involved several sequential steps applied to the initial 1-minute resolution data: (1) data cleaning and outlier removal; (2) linear interpolation to fill missing values; (3) temporal averaging to 3-hour intervals; (4) dataset selection of meteorological variables; (5) Fourier analysis; (6) wavelet-based denoising filter; and (7) z-score normalization of all features.

2.3.1 Data cleaning

Outliers were identified using the interquartile range method, which detects anomalous points lying beyond 1.5 times the interquartile distance below Q1 or above Q3. Instrumental data flagged as ±999 were also removed before interpolation with time-aware linear methods.

2.3.2 Imputation of missing values

The dataset used in this study, composed of PWV UCSC, PWV APEX, Temp, RH, WS, and WD, contained missing data in several variables. PWV UCSC had 0.7% of missing values, while PWV APEX had 20.7%. Temp, RH, WS, and WD each had 8.1% missing entries. These gaps were distributed throughout the year and likely resulted from instrumental issues, such as sensor saturation, temporary outages, or data logging interruptions.

To ensure continuity in the time series, missing values were imputed using linear interpolation. This method was selected for its simplicity and its effectiveness in estimating short, isolated gaps (typically under 30 minutes) without introducing artificial trends or discontinuities.

Since the data were subsequently averaged into 3-hour intervals, the interpolation had minimal influence on the overall signal structure. This approach helped to preserve temporal consistency across variables and ensured that the LSTM model received complete, synchronized input sequences.

2.3.3 Correlation and input parameter selection

A Pearson correlation analysis was performed to guide the variable selection process, retaining only those with a statistically significant relationship to the target variable, PWV APEX. The Pearson correlation coefficient (r) is defined as

![$\[r=\frac{\sum_{i=1}^n\left[\left(x_i-\bar{x}\right)\left(y_i-\bar{y}\right)\right]}{\sqrt{\sum_{i=1}^n\left(x_i-\bar{x}\right)^2 \sum_{i=1}^n\left(y_i-\bar{y}\right)^2}}.\]$](/articles/aa/full_html/2026/01/aa56107-25/aa56107-25-eq1.png) (1)

(1)

Where xi and yi are the samples of each variable, ![$\[\bar{x}\]$](/articles/aa/full_html/2026/01/aa56107-25/aa56107-25-eq2.png) is the mean of the x values,

is the mean of the x values, ![$\[\bar{y}\]$](/articles/aa/full_html/2026/01/aa56107-25/aa56107-25-eq3.png) is the mean of the y values, and n is the total number of observations. PWV APEX corresponds to y.

is the mean of the y values, and n is the total number of observations. PWV APEX corresponds to y.

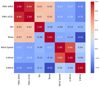

The analysis seen in Figure 1 revealed an expected strong positive correlation between PWV APEX and PWV UCSC (r = 0.94), and moderate correlations with RH (r = 0.45) and Temp (r = 0.43). In contrast, WS and the U and V wind components showed negligible correlations (all |r| < 0.20) and were excluded from the model due to their limited predictive value. As a result, the selected input variables are: (1) PWV APEX, (2) PWV UCSC, (3) Temp, and (4) RH.

|

Fig. 1 Correlation matrix for PWV APEX, PWV UCSC, RH, Temp, WS, and U- and V-wind components. Warmer colors are positive correlations, while cooler colors are negative correlations. |

2.3.4 Temporal averaging

To reduce sensitivity to high-frequency noise and short-term variability, all selected time series were averaged into 3-hour intervals, a resolution that facilitates the identification of broader atmospheric trends (12–48 h horizons) and aligns with operational forecasting models such as GFS. These four variables were resampled and synchronized on the same temporal basis.

After the previous steps of data preprocessing, the data are resampled to 3-hour temporal resolution. For the radiometric data, PWV APEX ranged from 0.14 to 8.21 mm, with a mean of 2.00 mm and a median of 1.46 mm, while PWV UCSC ranged from 0.12 to 6.03 mm, with a mean of 1.72 mm and a median of 1.33 mm. For the meteorological data, RH ranged from 0.26% to 100.2%, with a mean of 30.2% and a median of 24.3%, while Temp ranged from −17.05 °C to 10.55 °C, with a mean of −1.65 °C and a median of −1.38 °C.

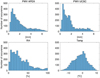

The distribution of the values is shown in Figure 2. The data series contains a total of 3672 records per variable and the binning for PWV is 0.2 mm, for RH is 4%, and for Temp is 1 °C.

|

Fig. 2 Number of occurrences for each variable after data cleaning, imputation of missing values, and 3-hour averaging. |

|

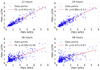

Fig. 3 Power spectral density plots from the FFT analysis of PWV APEX, PWV UCSC, RH, and Temp. The x-axis represents frequency in cycles per year. |

2.3.5 Fourier analysis

To better understand the dominant periodicities and their cyclic behavior in PWV and meteorological variables, a fast Fourier transform (FFT) was applied. The FFT obtained for each variable is seen in Figure 3, revealing clear peaks at frequencies corresponding to a yearly cycle (1 per year), daily cycles (365 cycles per year), and sub-daily harmonics, indicating that these signals contain predictable recurring patterns. These insights supported the decision to use sequential models such as LSTM, which are capable of capturing temporal dependences.

2.3.6 Wavelet denoising filter

To improve the signal-to-noise ratio, a wavelet-based denoising technique was applied using the Symlet 4 (Sym4) wavelet function. This wavelet was selected after testing alternatives such as Daubechies 4 (db4), Coiflet 4 (coif4), and Haar, offering the best balance between noise reduction and preservation of key signal trends.

In this context, noise in the PWV data primarily stems from instrumental fluctuations and abrupt environmental changes, which introduce high-frequency variations that can obscure relevant temporal patterns. These distortions reduce the model’s ability to learn consistent relationships.

The wavelet filter effectively suppresses high-frequency noise while retaining low-frequency components crucial for trend analysis. As a result, the smoothed signals exhibit reduced short-term variability and improve model training stability and accuracy.

2.3.7 Data normalization

All selected input variables were normalized using z-score standardization (zero mean and unit variance). This step ensures that differences in magnitude among variables do not bias the model training process and allows the LSTM network to converge more efficiently. With all variables normalized and the input structure defined, the four selected datasets at 3-hour intervals were prepared for training and evaluation using the deep learning model.

3 Deep learning model: LSTM

In this study, a deep learning model based on recurrent neural networks, specifically long short-term memory (LSTM), was developed for the prediction of PWV. These networks are particularly suitable for sequential data analysis due to their ability to handle both short- and long-term temporal relationships. This is made possible by their memory cell-based architecture, which surpasses the limitations of traditional recurrent networks, such as gradient vanishing or explosion (Hochreiter & Schmidhuber 1997; Hou et al. 2023; Haputhanthri & Wijayasiri 2021; Jain et al. 2020).

The model uses as input the selected predictors (PWV UCSC, Temp, RH), together with historical values of the target variable (PWV APEX), to forecast future PWV APEX values. To ensure the integrity of the predictive process and avoid biases known as “data leakage”, only past values were used as inputs and targets, replicating realistic forecasting conditions.

The network was configured to analyze temporal windows of 48 steps, equivalent to 6 days of data. The architecture includes two hidden layers of 120 neurons each. This configuration was selected after conducting multiple tests, where different hidden layer sizes were compared. The final setup was chosen based on the best performance in terms of lowest root mean square error (RMSE) and highest coefficient of determination (R2) across the 12 and 24 h forecasting horizons.

The Adam optimizer was employed for its ability to dynamically adjust the learning rate, which improves model convergence (Yang & Wang 2022). This provided the best balance between convergence speed and performance compared to other optimizers such as AdamW, stochastic gradient descent, and RMSprop. The mean squared error was employed as the loss function.

Figure 4 presents the architecture of the proposed LSTM model. The model takes as input four variables (Features): PWV APEX (1), PWV UCSC (2), Temp (3), and RH (4) recorded over 48 time-steps. The output consists of predicted values of future PWV APEX at four distinct time horizons: 4, 8, 12, and 16 time-steps ahead, which correspond to 12, 24, 36, and 48 hours into the future, respectively (multi-output).

The architecture includes two stacked LSTM layers, each with 120 hidden units (neurons), which process the input sequence to extract relevant temporal patterns. These are followed by a fully connected output layer that maps the final LSTM outputs to the predicted values. This configuration was selected for its ability to effectively capture complex temporal dynamics while maintaining efficient training performance.

|

Fig. 4 Diagram of the LSTM architecture. |

3.1 LSTM architecture

The LSTM network is a type of recurrent neural network designed to address the limitations of standard recurrent neural networks when modeling long sequences. It incorporates a memory cell regulated by three gates (input, forget, and output) that control the flow of information over time. These gates determine which information is retained, discarded, or passed to the next time step.

The following formulation is adapted from standard LSTM definitions, using the approach by Hou et al. (2023). At each time step t, the gates are calculated as follows:

![$\[i_t=\sigma\left(W_i \cdot\left[h_{t-1}, x_t\right]+b_i\right)\]$](/articles/aa/full_html/2026/01/aa56107-25/aa56107-25-eq4.png) (2)

(2)

![$\[f_t=\sigma(W_f \cdot\left[h_{t-1}, x_t\right]+b_f)\]$](/articles/aa/full_html/2026/01/aa56107-25/aa56107-25-eq5.png) (3)

(3)

![$\[o_t=\sigma\left(W_o \cdot[h_{t-1}, x_t\right]+b_o).\]$](/articles/aa/full_html/2026/01/aa56107-25/aa56107-25-eq6.png) (4)

(4)

In these expressions, xt is the input vector at time step t, and ht−1 is the hidden state from the previous time step. The weight matrices Wi, Wf, Wo and the bias vectors bi, bf, bo are learned during training. The sigmoid activation function σ constrains the gate outputs to values between 0 and 1, effectively controlling how much information passes through each gate. The candidate cell state ![$\[\tilde{C}_{t}\]$](/articles/aa/full_html/2026/01/aa56107-25/aa56107-25-eq7.png) , which represents new information to be considered for memory update, is computed as:

, which represents new information to be considered for memory update, is computed as:

![$\[\tilde{C}_t=\tanh \left(W_c \cdot\left[h_{t-1}, x_t\right]+b_c\right).\]$](/articles/aa/full_html/2026/01/aa56107-25/aa56107-25-eq8.png) (5)

(5)

Here, Wc and bc are the weight matrix and bias associated with the candidate state, and tanh is the hyperbolic tangent activation function. The cell state Ct is updated by combining the previous state with the candidate state, modulated by the forget and input gates, respectively:

![$\[C_t=f_t \odot C_{t-1}+i_t \odot \tilde{C}_t.\]$](/articles/aa/full_html/2026/01/aa56107-25/aa56107-25-eq9.png) (6)

(6)

The symbol ⊙ denotes the Hadamard (element-wise) product, allowing for selective control over each element of the state vector. Finally, the hidden state ht, which also serves as the output of the LSTM cell, is computed using the output gate and the updated cell state:

![$\[h_t=o_t \odot \tanh \left(C_t\right).\]$](/articles/aa/full_html/2026/01/aa56107-25/aa56107-25-eq10.png) (7)

(7)

This mechanism enables the LSTM network to maintain long-term dependences in sequential data, while adaptively filtering relevant information at each time step.

3.2 LSTM model implementation

The model was implemented in MATLAB R2024b and Python 3.8.20 for cross-validation. The dataset was split into training (80%) and validation (20%). The training data covered the period from July 13, 2023, to July 14, 2024, while the validation data ranged from July 15, 2024, to October 14, 2024. Training was conducted over 180 epochs using the Adam optimizer.

3.3 LSTM model evaluation

The model’s performance was evaluated using multiple metrics, including the correlation coefficient (r), coefficient of determination (R2), RMSE, mean absolute error (MAE), and mean absolute percentage error (MAPE).

The R2 measures the proportion of variability in the actual data explained by the model. Values close to 1 indicate an excellent fit. R2 is defined as:

![$\[R^2=1-\frac{\sum_{i=1}^n\left(\hat{y}_i-\bar{y}\right)^2}{\sum_{i=1}^n\left(y_i-\bar{y}\right)^2}\]$](/articles/aa/full_html/2026/01/aa56107-25/aa56107-25-eq11.png) (8)

(8)

where yi denotes the observed (true) values, ![$\[\hat{y}_{i}\]$](/articles/aa/full_html/2026/01/aa56107-25/aa56107-25-eq12.png) is the predicted value for each

is the predicted value for each ![$\[i, \bar{y}\]$](/articles/aa/full_html/2026/01/aa56107-25/aa56107-25-eq13.png) is the mean of the observed values, and n is the total number of observations.

is the mean of the observed values, and n is the total number of observations.

The RMSE was also employed to measure the average magnitude of errors between predictions and actual values, giving greater weight to larger errors. Its interpretation is intuitive, as it is expressed in the same units as the target variable (i.e., in millimeters).

![$\[\text { RMSE }=\sqrt{\frac{1}{n} \sum_{i=1}^n\left(y_i-\hat{y}_i\right)^2}.\]$](/articles/aa/full_html/2026/01/aa56107-25/aa56107-25-eq14.png) (9)

(9)

Additionally, the MAE was used as a complementary metric, representing the average absolute difference between predicted and actual values. Unlike RMSE, MAE treats all errors equally without emphasizing larger deviations, making it useful for assessing overall prediction performance.

![$\[\text { MAE }=\frac{1}{n} \sum_{i=1}^n\left|y_i-\hat{y}_i\right|.\]$](/articles/aa/full_html/2026/01/aa56107-25/aa56107-25-eq15.png) (10)

(10)

Finally, the MAPE was calculated to express the average error as a percentage relative to the actual values. This metric is particularly useful for evaluating model performance across different scales, although it may be less stable when actual values approach zero. To further assess the model’s performance, we generated a set of plots, including the evolution of loss during training, predicted versus actual value comparisons, and scatter plots with regression lines.

![$\[\text { MAPE }=\frac{1}{n} \sum_{i=1}^n\left|\frac{y_i-\hat{y}_i}{y_i}\right| \times 100 \%.\]$](/articles/aa/full_html/2026/01/aa56107-25/aa56107-25-eq16.png) (11)

(11)

Performance of the LSTM model for different forecasting horizons.

4 Results and discussion

This section presents the results obtained from LSTM predictions of PWV APEX compared with real future values of PWV measured at APEX. The performance was evaluated for different forecasting of 4, 8, 12 and 16 steps, corresponding to forecasting horizons of 12, 24, 36, and 48 hours.

4.1 Comparative evaluation of different forecasting horizons

Table 1 presents the performance of the LSTM model across the four forecasting horizons. The results show a strong correlation r and high R2 for short-term predictions (12 and 24 h) of real PWV values. In these two forecasting horizons, RMSE is ~0.35 mm, MAE ~0.24 mm, and MAPE ~22%.

The prediction accuracy decreases as the forecasting horizon increases to 36 and 48 h. Quantitatively, the correlation coefficient decreases from r = 0.95 (12 h) and r = 0.93 (24 h) to r = 0.69 (36 h) and r = 0.56 (48 h), while R2 drops from 0.90 and 0.87 to 0.46 and 0.23, respectively. RMSE values increase from 0.33 mm (12 h) and 0.38 mm (24 h) to 0.77 mm (36 h) and 0.92 mm (48 h), and MAPE increases dramatically from 21.1% (12 h) and 23.2% (24 h) to over 60% (36 and 48 h). These indicators highlight that both absolute and relative errors grow rapidly once forecasts extend beyond one day. An RMSE greater than 0.9 mm at 48 h implies a considerably large uncertainty in atmospheric conditions, potentially impacting the feasibility or quality of these predictions.

This indicates that LSTM model can reliably predict a significant portion of the observed PWV in time frames of 12 and 24 h, suggesting the utility of these predictions for operational tasks at observatories sensitive to PWV conditions.

4.2 Comparison between predicted and real PWVs

Figure 5 illustrates the temporal comparison of the values predicted by the LSTM model (PWV LSTM) and the real observed values recorded by APEX (PWV APEX) across the four forecasting horizons. The plots visually confirm that the model effectively captures the temporal dynamics and amplitude variations of PWV over time, particularly for the short-term forecasts (12 and 24 h) where the predicted series closely tracks the observations. A greater divergence between the predicted and real PWV series is observed at longer horizons (36 and 48 h), consistent with the increasing error metrics (r, R2, RMSE, and MAE) and the sharp rise in MAPE previously noted.

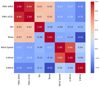

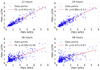

Figure 6 presents scatter plots of these predicted versus real PWV values for each forecasting horizon, including the regression line. The tight clustering of data points along the regression line for the 12 and 24 h horizons reinforces the strong correlation and higher accuracy metrics reported. As the forecasting horizon increases to 36 and 48 h, the scatter increases significantly, and the values of r and R2 drop substantially, indicating a weaker linear relationship and reduced predictive power, consistent with the quantitative metrics.

To analyze the distribution of prediction errors at different PWV levels, Figure 7 shows the differences between observed and forecasted PWV (ΔPWV = PWVobs − PWVpred) as a function of the observed PWV values for the 12 and 24 h horizons. The plots indicate that the errors remain centered around zero, with higher dispersion at larger PWV values.

To determine the performance and errors at different PWV values, we stratified RMSE values into three categories. For the 12 h horizon, RMSE was 0.20 mm under very dry conditions (PWV < 1 mm), 0.34 mm for intermediate conditions (1–2 mm), and 0.53 mm for PWV > 2 mm. For the 24 h horizon, errors increased slightly, with RMSE values of 0.22 mm, 0.39 mm, and 0.62 mm for the same categories, respectively. These results confirm that the model performs best under dry-to-moderate PWV conditions, which are the most relevant for astronomical observations, while prediction errors grow as atmospheric humidity increases.

|

Fig. 5 Comparison of PWV APEX (blue) and PWV LSTM (orange) for the different forecasting horizons. |

|

Fig. 6 Scatter plots for PWV APEX and PWV LSTM at the four different forecasting horizons, including the linear regression fit. |

|

Fig. 7 Scatter plots of ΔPWV (observed minus predicted) against observed PWVs for 12 h (top) and 24 h (bottom) forecasting horizons. The distribution remains centered near zero, with increasing dispersion at higher PWV values. |

4.3 Comparison of LSTM and GFS at 12 and 24 h

We compared our results with the GFS prediction model for the 12 and 24 h forecasting horizons, which represent the most robust outcomes of the LSTM model. The GFS data (PWV GFS) are the predictions obtained at APEX with a total of 5-day forecasting horizon in steps of 6-hour resolution. We analyzed the LSTM and GFS prediction results in the same period of time, same time-resolution, and same forecasting horizons for a direct comparison of these models. Considering the 12 and 24 h forecasting horizons to match with our LSTM predictions, Figure 8 compares both prediction models with PWV APEX at each forecasting horizon. The differences between the LSTM and GFS models with real values (PWV LSTM − PWV APEX and PWV GFS − PWV APEX) are shown in Figure 9.

Table 2 shows that the LSTM model is able to reduce RMSE in short-term forecasts (12 and 24 h), achieving a RMS ELST M of 0.328 and 0.376 mm, respectively. These are notably lower than RMS EGFS of 0.625 and 0.636 mm, significantly reducing the error by ~50%. Analyzing MAPE values, we find the overall error for LSTM to be within ~22% while for GFS are within ~36%.

However, for 36 and 48 h horizons, RMS ELST M increases to 0.768 and 0.916 mm, surpassing the GFS model with RMS EGFS of 0.662 and 0.644 mm, respectively. At high PWV values, the differences between LSTM with APEX become greater. To enable an analysis in the same PWV range (0.4–1.2 mm) as the GFS data reported in Marín et al. (2015), we compared LSTM and GFS predictions in Table 3.

In the 0.4-1.2 mm range, for the 12 h forecasting horizon, the RMSE for LSTM presents a reduction of ~34% compared with GFS and for 24 h, the reduction is ~36%. The RMSE for LSTM increases to ~60% for longer forecasting horizons at 36 and 48 h. MAPE values at the 12 and 24 h forecasting horizons are ~23 and 25% for LSTM, while GFS shows similar percentage values of ~35% in all four forecasting horizons. These results reinforce the improved performance of the LSTM predictions at different PWV levels compared with traditional GFS predictions at 12 and 24 h forecasting horizons. Larger discrepancies for LSTM are observed at longer forecasting horizons and the strategies proposed to enhance our model are discussed next.

|

Fig. 8 Comparison of PWV LSTM (orange), PWV GFS (green), and PWV APEX (blue) for 12 and 24 h horizons. |

|

Fig. 9 Comparisons of PWV LSTM (orange) and PWV GFS (green) with PWV APEX for 12 and 24 h horizons. |

Comparison of the RMSE (in mm) and MAPE (in %) from the LSTM and GFS predictions with PWV APEX.

Comparison of the different RMSE (in mm) and MAPE (%) values of the LSTM and GFS predictions in the 0.4–1.2 mm range.

4.4 Discrepancies at longer forecast horizons (36 and 48 h)

The comparison between predicted and observed PWVs at the 36 and 48 h horizons consistently shows a marked degradation in predictive accuracy, with larger scatter, weaker correlations, and increasing errors relative to shorter horizons. This loss of performance can be attributed to several factors: (1) the cumulative propagation of errors across longer prediction windows, which is intrinsic to sequential models; (2) the increasing influence of small-scale, high-frequency atmospheric processes that are not captured well by the limited input variables; and (3) the reduced information content available to constrain forecasts beyond one day in such a highly variable environment. These limitations are consistent with known challenges in atmospheric forecasting.

4.5 Strategies to improve short- and long-term forecasts

Future upgrades and strategies are suggested to improve our PWV predictions in short (<12 h) and long (>36 h) timescales, focusing on several key areas: (1) expand the current dataset to include multiple years of historical observations to enhance model generalization and stability; (2) incorporate additional meteorological variables that are correlated with PWV, including historical meteorological reanalysis data, satellite data, solar radiation, cloudiness, air pressure, and others that are available in the Chajnantor area; (3) explore shorter time-resolution datasets and forecast horizons, for example 10 minutes or 1 hour, and larger forecasting horizons up to 1 week or more; (4) test alternative deep learning architectures such as attention-based models andtTemporal convolutional networks; and (5) assess real-time operational deployment potential in coordination with observatories scheduling systems. These strategies highlight possible paths to strengthen the predictive capacity of the model. Considering these upgrades and strategies in our model, a future plan is to produce real-time predictions at different forecasting horizons available on a public web page.

5 Conclusions

This study presents a first investigation of the application of deep learning techniques (specifically LSTM networks) for preliminary predictions of PWV in the Chajnantor area. Using radiometric and meteorological data collected locally for more than 1 year, a site-specific LSTM-based forecasting model was developed and evaluated.

The LSTM model demonstrated higher predictive accuracy for 12- and 24-hour forecasting horizons: it improved the traditional GFS method used by APEX in all r, R2, RMSE, MAE, and MAPE metrics, reducing the prediction errors by ~50%. However, the LSTM model performance declined at the 36-and 48-hour horizon, with an increase in error variability and relative error. This degradation, while expected in time series forecasting, suggests that further improvements are needed before the model can reliably support longer-term astronomical observing planning. We will propose several strategies to upgrade and improve our model on shorter (<12 h) and longer (>36 h) timescale forecasting horizons in a future article.

These results highlight the potential of deep learning as a valuable tool for short-term operational PWV forecasting at high-altitude observatory sites. The ability to produce localized high-resolution forecasts tailored to specific atmospheric conditions is particularly advantageous in environments where global numerical weather models struggle to capture fine-scale moisture variability.

Although this study is site-specific, the proposed methodology can be generalized to other astronomical observatory locations. To do so, high-quality, high-resolution local PWV and meteorological data would be needed for model training. Furthermore, the input variables and preprocessing steps may need to be adapted to the specific atmospheric characteristics of each site. With sufficient data and careful retraining, the LSTM-based approach demonstrated here can be transferred to different geographic regions and used as a flexible forecasting tool for PWV predictions to support astronomical operations.

Acknowledgements

We acknowledge support from FIC-R IA 40036152-0, Gobierno Regional del Bio-Bío. We acknowledge support from China Chile Joint Research Fund CCJRF1803 and CCJRF2101. S.R. acknowledges support from ANID FONDECYT Iniciación 11221231. R.B. and S.R. acknowledge support from ANID FONDECYT Regular 1251819. J.C. acknowledges support from ANID FONDECYT Regular 1240843. P.G. is supported by Chinese Academy of Sciences South America Center for Astronomy (CASSACA) Key Research Project E52H540201. R.R. acknowledges support from ANID BASAL FB210003 (CATA) and from Núcleo Milenio TITANs (NCN2023-002). We also acknowledge the availability of meteorological data, PWV, and GFS forecasts provided by the APEX telescope website and by the ALMA observatory.

References

- Bustos, R., Rubio, M., Otárola, A., & Nagar, N. 2014, PASP, 126, 1126 [NASA ADS] [CrossRef] [Google Scholar]

- Castro-Almazán, J. A., Muñoz-Tuñón, C., García-Lorenzo, B., et al. 2016, Proc. SPIE, 9910, 99100P [CrossRef] [Google Scholar]

- Chen, B., Yu, W., Wang, W., Zhang, Z., & Dai, W. 2021, Earth and Space Science, 8, e2021EA001796 [Google Scholar]

- Cortés, F., Cortés, K., Reeves, R., Bustos, R., & Radford, S. 2020, A&A, 640, A126 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Giordano, C., Vernin, J., Vázquez Ramío, H., et al. 2013, MNRAS, 430, 3102 [Google Scholar]

- Güsten, R., Nyman, L. Å., Schilke, P., et al. 2006, A&A, 454, L13 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Haputhanthri, D., & Wijayasiri, A. 2021, in Moratuwa Engineering Research Conference (MERCon) (IEEE), 602 [Google Scholar]

- Hochreiter, S., & Schmidhuber, J. 1997, Neural Comput., 9, 1735 [CrossRef] [Google Scholar]

- Hou, X., Hu, Y., Du, F., et al. 2023, Astron. Comput., 43, 100710 [Google Scholar]

- Jain, M., Manandhar, S., Lee, Y. H., Winkler, S., & Dev, S. 2020, Proc. IEEE AP-S and USNC-URSI Meeting, 147 [Google Scholar]

- Li, H., Choy, S., Wang, X., et al. 2023, IEEE J-STARS, 16, 6876 [Google Scholar]

- Li, Y., Hu, T., Qi, K., et al. 2024, in 14th International Symposium on Antennas, Propagation and EM Theory (ISAPE) (IEEE), 1 [Google Scholar]

- Marín, J. C., Pozo, D., & Curé, M. 2015, A&A, 573, A41 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Otárola, A., Travouillon, T., Schöck, M., et al. 2010, PASP, 122, 470 [Google Scholar]

- Otárola, A., De Breuck, C., Travouillon, T., et al. 2019, PASP, 131, 045001 [NASA ADS] [CrossRef] [Google Scholar]

- Pozo, D., Marín, J. C., Illanes, L., Curé, M., & Rabanus, D. 2016, MNRAS, 459, 419 [Google Scholar]

- Pérez-Jordán, w., Castro-Almazán, J. A., & Muñoz-Tuñón, C. 2018, MNRAS, 477, 5477 [Google Scholar]

- Radford, S. J. E. 2011, RevMexAA, 41, 87 [Google Scholar]

- Radford, S. J. E., & Peterson, J. B. 2016, PASP, 128, 075001 [Google Scholar]

- Sleem, R. E., Abdelfatah, M. A., Mousa, A. E.-K., & El-Fiky, G. S. 2024, Sci. Rep., 14, 14608 [Google Scholar]

- Turchi, A., Masciadri, E., Kerber, F., & Martelloni, G. 2019, MNRAS, 482, 206 [Google Scholar]

- Valeria, L., Martínez-Ledesma, M., & Reeves, R. 2024, A&A, 684, A186 [NASA ADS] [CrossRef] [EDP Sciences] [Google Scholar]

- Vaquero-Martínez, J., & Antón, M. 2021, Remote Sens., 13, 2287 [Google Scholar]

- Wu, M., Jin, S., Li, Z., et al. 2021, Remote Sens., 13, 1296 [Google Scholar]

- Xiao, X., Lv, W., Han, Y., Lu, F., & Liu, J. 2022, Atmosphere, 13, 1453 [Google Scholar]

- Yan, X., Yang, W., Li, Y., et al. 2024, Athens J. Sci., 11, 165 [Google Scholar]

- Yang, M., & Wang, J. 2022, Procedia Comput. Sci., 199, 18 [CrossRef] [Google Scholar]

All Tables

Comparison of the RMSE (in mm) and MAPE (in %) from the LSTM and GFS predictions with PWV APEX.

Comparison of the different RMSE (in mm) and MAPE (%) values of the LSTM and GFS predictions in the 0.4–1.2 mm range.

All Figures

|

Fig. 1 Correlation matrix for PWV APEX, PWV UCSC, RH, Temp, WS, and U- and V-wind components. Warmer colors are positive correlations, while cooler colors are negative correlations. |

| In the text | |

|

Fig. 2 Number of occurrences for each variable after data cleaning, imputation of missing values, and 3-hour averaging. |

| In the text | |

|

Fig. 3 Power spectral density plots from the FFT analysis of PWV APEX, PWV UCSC, RH, and Temp. The x-axis represents frequency in cycles per year. |

| In the text | |

|

Fig. 4 Diagram of the LSTM architecture. |

| In the text | |

|

Fig. 5 Comparison of PWV APEX (blue) and PWV LSTM (orange) for the different forecasting horizons. |

| In the text | |

|

Fig. 6 Scatter plots for PWV APEX and PWV LSTM at the four different forecasting horizons, including the linear regression fit. |

| In the text | |

|

Fig. 7 Scatter plots of ΔPWV (observed minus predicted) against observed PWVs for 12 h (top) and 24 h (bottom) forecasting horizons. The distribution remains centered near zero, with increasing dispersion at higher PWV values. |

| In the text | |

|

Fig. 8 Comparison of PWV LSTM (orange), PWV GFS (green), and PWV APEX (blue) for 12 and 24 h horizons. |

| In the text | |

|

Fig. 9 Comparisons of PWV LSTM (orange) and PWV GFS (green) with PWV APEX for 12 and 24 h horizons. |

| In the text | |

Current usage metrics show cumulative count of Article Views (full-text article views including HTML views, PDF and ePub downloads, according to the available data) and Abstracts Views on Vision4Press platform.

Data correspond to usage on the plateform after 2015. The current usage metrics is available 48-96 hours after online publication and is updated daily on week days.

Initial download of the metrics may take a while.