| Issue |

A&A

Volume 703, November 2025

|

|

|---|---|---|

| Article Number | A291 | |

| Number of page(s) | 18 | |

| Section | Interstellar and circumstellar matter | |

| DOI | https://doi.org/10.1051/0004-6361/202453264 | |

| Published online | 27 November 2025 | |

Multiscale turbulence synthesis: Validation in 2D hydrodynamics

1

Laboratoire de Physique de l’École Normale Supérieure, ENS, Université PSL, CNRS, Sorbonne Université, Université Paris Cité,

75005

Paris,

France

2

LUX, Observatoire de Paris, Université PSL, Sorbonne Université,

75014

Paris,

France

3

Institut de recherche en astrophysique et planétologie Université Toulouse III – Paul Sabatier, Observatoire Midi-Pyrénées, Centre National de la Recherche Scientifique,

UMR5277,

Toulouse,

France

4

Sorbonne Université, CNRS, UMR7095, Institut d’Astrophysique de Paris,

98 bis Boulevard Arago,

75014

Paris,

France

5

DPHY, ONERA, Université Paris-Saclay,

91120

Palaiseau,

France

★ Corresponding author: This email address is being protected from spambots. You need JavaScript enabled to view it.

Received:

2

December

2024

Accepted:

28

June

2025

Context. Numerical simulations can follow the evolution of fluid motions through the intricacies of developed turbulence. However, they are rather costly to run, especially in 3D. In the past two decades, generative models have emerged that produce synthetic random flows at a computational cost equivalent to no more than a few time steps of a simulation. These simplified models qualitatively bear some characteristics of turbulent flows in specific contexts (incompressible 3D hydrodynamics or magnetohydrodynamics) but generally struggle with the synthesis of coherent structures.

Aims. We aim to generate random fields (e.g. velocity, density, magnetic fields) with realistic physical properties for a large variety of governing partial differential equations and at a small cost relative to time-resolved simulations.

Methods. We propose a set of simple steps applied to given sets of partial differential equations: we filter from large to small scales, derive a first order time evolution approximation from Gaussian random initial conditions during a prescribed coherence time, and finally sum over scales to generate the fields.

Results. We test the validity of our method in the simplest framework: 2D decaying incompressible hydrodynamical turbulence. We compare the results of 2D decaying simulations with snapshots of our synthetic turbulence. We first quantitatively assess the difference with standard statistical tools: power spectra, increments, and structure functions. These indicators can be reproduced by our method during up to about a third of the turnover timescale. We also consider recently developed scattering transforms statistics, which are able to efficiently characterise non-Gaussian structures. This reveals a more significant discrepancy; however, this can be bridged by bootstrapping. Finally, the number of Fourier transforms necessary for one synthesis scales logarithmically in the resolution, compared to linearly for time-resolved simulations.

Conclusions. We have designed a multiscale turbulence synthesis (MuScaTS) method to efficiently short-circuit costly numerical simulations to produce realistic instantaneous fields.

Key words: hydrodynamics / magnetohydrodynamics (MHD) / turbulence / ISM: structure

© The Authors 2025

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. This email address is being protected from spambots. You need JavaScript enabled to view it. to support open access publication.

1 Introduction

Turbulence has distinctive features that are hard to characterise quantitatively. Nevertheless, our experience tells us that a lot of information is encoded even in a single 2D projection of a tracer in a 3D turbulent flow. Indeed, when we vigorously push a puff of smoke, it soon develops convoluted motions and diverging trajectories blur the initial impulse, but nevertheless some characteristic signatures remain. When we look at a still picture of smoke trailing in the wake of a candle or an incense stick, our brain not only immediately recognises the context, it also grasps a sense for the fluid shearing and spiralling motions in vortices and gets a feeling of the large-scale wind direction. Astronomers who study the interstellar medium are very much in the same situation; the sky before their eyes is a still projection of tracers evolving through the fluid dynamics at play in these dilute media, which the astronomers attempt to infer.

Quantitative probes of the nature of the underlying fluid dynamics, however, are quite elusive. Turbulence research so far has only managed to isolate a few generic statistical properties shared by turbulent flows. In the case of incompressible hydrodynamics (HD), power-law scalings for the velocity power spectrum were first obtained by Kolmogorov (1941) using conservation arguments across scales. However, scaling laws for the velocity and magnetic fields power spectra are still a matter of debate, even in the relatively simple case of incompressible magnetohydrodynamic (MHD) turbulence (Iroshnikov 1963; Kraichnan 1965; Goldreich & Sridhar 1995; Schekochihin 2022). Then, anomalous scalings for the structure functions were discovered (Kolmogorov 1962), which led to a large legacy of intermittency models and observations of statistical properties on the increments of velocity, energy dissipation rates, vorticity, or magnetic fields (Frisch 1996; She & Leveque 1994; Grauer et al. 1994; Politano & Pouquet 1995). Finally, a few exact analytical results were predicted, such as the Kárḿan–Howarth–Monin 4/5th relation (de Karman & Howarth 1938; Monin 1959; Frisch 1996) in 3D incompressible HD and its analogues in compressible or MHD turbulence (Galtier & Banerjee 2011; Banerjee & Galtier 2013, 2014, 2016).

In contrast, time-resolved simulations can accurately predict the evolution of fluid motions from random initial conditions and we could try to assess what statistical laws control their outcomes. However, such simulations are rather costly to run, especially in 3D and in a developed turbulent regime, which limits the number of possible independent realisations of these experiments.

In the past two decades, several authors have developed efficient techniques to produce random fields that bear some of the known characteristics of turbulence. A natural starting step is simply to produce a Gaussian velocity field with a prescribed power spectrum (Mandelbrot & van Ness 1968). The application of a non-linear transformation to this fractional Gaussian field can then yield some degree of non-Gaussianity, such as inter-mittency. However, these non-Gaussianities may have nothing to do with actual physics. For instance, it is challenging to generate asymmetries in the longitudinal velocity increments that generate non-zero energy transfer, let alone transfer in the right scale direction.

Some techniques focus on advection. Rosales & Meneveau (2006) (multiscale minimal Lagrangian map, MMLM) and Rosales & Meneveau (2008) (multiscale turnover minimal Lagrangian map, MTML) transport ballistically an initial fractional Gaussian velocity field under its own influence in a hierarchy of embedded smoothing scales. They show this procedure generates anomalous scalings and energy transfer although they do not compare it quantitatively to experiments. Subedi et al. (2014) later extended the same approach in MHD. More recently Lübke et al. (2024) showed they could initiate the formation of some current sheets if they applied the same technique to an already non-Gaussian initial field.

Other techniques focus on deformation and neglect advection. Chevillard & Meneveau (2006) and Chevillard et al. (2011) deform a Gaussian field with a stretching matrix exponential to produce a tunable random field with given power spectrum and intermittency properties. Durrive et al. (2020) generalise the same ideas to incompressible MHD. However, these models fail to reproduce coherent structures seen in direct numerical simulations (DNS, see Momferratos et al. 2014; Richard et al. 2022), so Durrive et al. (2022) extend their model to include prescribed random dissipative sheets.

In this paper, we present a set of generic ideas that enable us to produce synthetic models for many types of turbulent flows (HD incompressible or isothermal, self-gravitating or not, incompressible MHD). We present our multiscale turbulence synthesis (MuScaTS) technique in Section 2 and compare it to previous models in Section 3. In Section 4, we carefully assess its validity in the simplest framework of 2D incompressible hydro-dynamical turbulence. With this aim, on one hand we compute the results of DNS evolving an initial Gaussian field after some finite time, and, on the other hand, we generate snapshots of synthetic turbulence using several variants of our method. In order to quantitatively compare the two, we computed power spectra, increments statistics, transfer functions and we finally assessed the quality of the textures we generated by computing scattering transforms statistics (see Allys et al. 2019). We discuss our results in Section 5 and develop the prospects of the MuScaTS method in Section 6.

2 Synthetic models of turbulence: The MuScaTS technique

In the following subsections, we present the ingredients of our framework: a multiscale filtering (2.1), and a generic form (2.2) for partial differential equations which survives filtering under some additional approximations (2.3). This allows us to build a synthetic flow with a sweep from large to small scales (2.4). We then discuss in more details our two main approximations regarding time evolution (2.4.1) and coherence time estimation (2.4.2).

2.1 Isotropic filtering

The purpose of this section is to define a continuous series of filters labelled by their respective scales. In particular, this allows us to reconstruct any zero mean field from its filtered components, and to separate it into a small and a large-scale components.

We consider tensor fields (such as the density or the velocity fields, for example) on a space domain Ɗ = ℝd of dimension d. For a field f, we define its filtered version fℓ at a scale ℓ through

(1)

(1)

where the sign * denotes convolution and φℓ is a bandpass filter that selects scales on the order of ℓ.

We define a continuous collection of filters labelled by all possible scales ℓ ∈ ℝ+ by dilation from a single mother filter φ through the formula

(2)

(2)

where the mother filter φ selects scales on the order of 1, i.e. its Fourier transform  is real positive, decays at small and large wavenumbers and peaks at around |k| = 1, where |k| denotes the norm of k. Note that since

is real positive, decays at small and large wavenumbers and peaks at around |k| = 1, where |k| denotes the norm of k. Note that since  , φ has zero mean and so has fℓ. The dilation formula in Fourier space then takes the form

, φ has zero mean and so has fℓ. The dilation formula in Fourier space then takes the form

(3)

(3)

We further assume isotropic filtering, i.e.  depends only on |k| through the function Φ(s) defined for s ∈ ℝ+ such that

depends only on |k| through the function Φ(s) defined for s ∈ ℝ+ such that

(4)

(4)

or equivalently, using a short-hand notation that we use throughout the paper for such integrals,

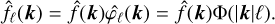

(6)

(6)

We assume that this integral is finite (0 < CΦ < +∞), which implicitly constrains the shape of our mother filter  because

because  should decay fast enough at both |k| → 0 and |k| → +∞ in order to keep this integral finite. Without loss of generality, we can assume that

should decay fast enough at both |k| → 0 and |k| → +∞ in order to keep this integral finite. Without loss of generality, we can assume that

(7)

(7)

by redefining Φ → Φ/CΦ. For example, the choice

(8)

(8)

results in a normalised CΦ.

In Appendix A, we show that the normalisation (7) allows us to reconstruct simply any field f (with zero mean) from its filtered components fℓ, namely we have the following reconstruction formula

(9)

(9)

the components of f at scales respectively smaller and larger than a given ℓ, so that

(12)

(12)

2.2 Scope of our method: Generic evolution equations

We present here a generic form of the partial differential equations for which the approximations inherent to our method can be more easily justified. Consider a n-components vector W characterising the state of the gas as a field on our space domain Ɗ. For example, for incompressible 3D fluid dynamics, we can take W = (w), with w the vorticity vector. Or we could use W = (w, ρ) with ρ the mass density for compressible HD.

We then consider evolution equations in the form

![${\partial _t}W + \left( {v \left[ W \right] \cdot \nabla } \right)W = S\left[ W \right] \cdot + D\left[ W \right],$](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq18.png) (13)

(13)

where v is an advection velocity vector (i.e. it has the same number of components d as the space dimension), S is a n × n deformation matrix and D is a term with n components which can (generally but not always) characterise the diffusion or dissipation terms. Here, the brackets [W] indicate that v, S and D are affine functions of the state variables field W. In other words, we require the non-linearities to be at most quadratic in the state vector W. We keep the freedom of v having a linear dependence on W, to accommodate future cases where the advection velocity might be more complicated, but all practical results presented in this paper are for v[W] = u, where u is the velocity component in the state vector W.

In the following paragraphs, we give a few explicit examples of evolution equations which fit our framework, for the vorticity and divorticity in incompressible 2D hydrodynamics, and for the vorticity in 3D incompressible hydrodynamics. We then list a few more examples without giving proof.

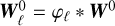

2.2.1 Incompressible 2D hydrodynamics

As a first example, consider the evolution equation for 2D incompressible hydrodynamics, which reads

(14)

(14)

where w is the vorticity component along the normal to the space domain, u is the fluid velocity and v is the kinematic viscosity coefficient. It takes the form (13) if we simply set W = (w), v[W] = u, the fluid velocity as expressed from w by the 2D Biot-Savart formula (without the boundaries’ term)

(15)

(15)

where  is the unit vector normal to the space domain Ɗ, S = 0 and D[W] = v∆w. Note that Equation (15) can be efficiently computed by using Fourier transforms.

is the unit vector normal to the space domain Ɗ, S = 0 and D[W] = v∆w. Note that Equation (15) can be efficiently computed by using Fourier transforms.

2.2.2 Divorticity in 2D hydrodynamics

In 2D incompressible hydrodynamics, it turns out one can also write evolution equations for the curl of the vorticity, also known as the divorticity (Kida 1985; Shivamoggi et al. 2024):

(16)

(16)

Taking the curl of the evolution equation of vorticity (14), it results in

(17)

(17)

where S is the velocity gradient matrix, so that S.B = u.∇B. This equation is in the form (13) when we set W = (B), and it is also strikingly similar to the equation for the evolution of vorticity in 3D, see (18) below. Note that the velocity field can be recovered from the divorticity by inverting the curl twice, which is a linear operation, and so the matrix S also depends linearly on the field B. We use Equation (17) as a test bed in 2D on how to handle the respective advection and deformation terms.

2.2.3 3D incompressible hydrodynamics

For 3D incompressible hydrodynamics, the evolution equation for the vorticity w reads

(18)

(18)

To show that this equation takes our generic form (13), we can set W = w ≡ ∇ × u the vorticity vector, and then (again, v[W] = u as explained in the introduction of Section 2.2)

(19)

(19)

(3D Biot-Savart formula without the boundaries’ term). Symbole S denotes the velocity gradient matrix and D[W] = v∆w is simply the diffusion term.

Note that if we write the incompressible Navier-Stokes equation for the velocity evolution, with the specific pressure p ≡ P/ρ (with P the thermal pressure and p the mass density):

(20)

(20)

it does not take the form (13). Indeed, for the choice W = u, the specific pressure field and its gradient can classically be recovered in the Leray formulation (Majda & Bertozzi 2001, Section 1.8) by solving for p in ∆p = -∂iuj.∂jui, but ∇p then cannot seem to be put into the form S[u].u with S[u] affine in u. However, this is not a restriction since we can simply use Equations (18) with (19), but we feel this example clarifies the subtleties in the scope of the generic form (13).

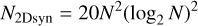

|

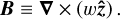

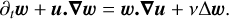

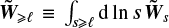

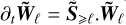

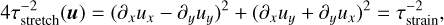

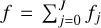

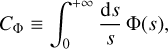

Fig. 1 Example of the performance of our method. Panel a shows the common initial (Gaussian) vorticity field used in the two other panels, namely a standard 2D incompressible hydrodynamics simulation (panel b) and one of our synthesis (panel c). Panel b shows the vorticity field after the field has been evolved for about one third of a turnover time, using more than 300 standard simulation steps. Panel c shows the resulting synthesis after only four MuScaTS steps for a total computer processing unit (CPU) cost 17 times smaller. |

2.2.4 Other cases

The evolution equations for reduced MHD, incompressible MHD, or self-gravitating isothermal gases can also be massaged into our generic form (13). However, we could not make compressible MHD strictly fit our framework, as the Lorentz term combines a product of three primitive variables (and our attempts at changing variables always led to a product of three variables).

In the context of large-scale structure formation in cosmology, Euler-Poisson equations in the dust approximation (zero pressure) are easily cast into our generic form (13) for the state vector W = (u, ρ). Indeed, Poisson equation for the gravitational potential is linear in the density. The expansion of the Universe can also be incorporated by switching to properly defined comoving variables. We use this in Section 3.3 to create a link with the multiscale Zel’dovich approximation (Bond & Myers 1996; Monaco et al. 2002; Stein et al. 2019). Nevertheless, the form (13) allows for a large variety of fluid evolution equations.

2.3 Derivation of the filtered evolution equations

In this section, we present a series of approximations leading to a simplified description of the dynamics, namely Equation (28). From Section 2.4 and onwards, the latter equation is the central focus, as, instead of trying to time integrate the more complicated full partial differential Equation (13), we mimic the dynamics from (28). Thus, we are able to generate cheap but realistic turbulent fields, as illustrated in Figure 1.

As a first step, let us recast the evolution Equation (13) into the form (24) below, which spells out how the various scales contribute to the dynamics. To do so, note that because our filtering (1) is a convolution product, it commutes with linear functions1, so we have in particular

![$\matrix{ {v {{\left[ W \right]}_\ell } = v \left[ {{W_\ell }} \right],} \hfill \cr {S{{\left[ W \right]}_\ell } = S\left[ {{W_\ell }} \right],} \hfill \cr {D{{\left[ W \right]}_\ell } = D\left[ {{W_\ell }} \right],} \hfill \cr } $](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq27.png) (21)

(21)

and, filtering (13) with a given bandpass filter φℓ,

![${\partial _t}{W_\ell } = {\varphi _\ell }*\left( { - v \left[ W \right].\nabla W + S\left[ W \right].\nabla W} \right) + D\left[ {{W_\ell }} \right].$](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq28.png) (22)

(22)

Then, using the scale decomposition (12), we separate the large and small-scale parts of the velocity field as

![$v = v \left[ {{W_{ < \ell }} + {W_{ \ge \ell }}} \right] = v \left[ {{W_{ < \ell }}} \right] + v \left[ {{W_{ \ge \ell }}} \right] = {v _{ < \ell }} + {v _{ \ge \ell }},$](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq29.png) (23)

(23)

and doing the same for S we rewrite the filtered evolution Equation (22) as

![$\eqalign{ & {\partial _t}{W_\ell }{_x} = D\left[ {{W_\ell }} \right]{_x} \cr & - \int_D {{{\rm{d}}^d}\,y} \,{\varphi _\ell }{_y}{v _{ \ge \ell \cdot }}\nabla W{_{x - y}} - \int_D {{{\rm{d}}^d}\,y} \,{\varphi _\ell }{_y}{v _{ < \ell \cdot }}\nabla W{_{x - y}} \cr & + \int_D {{{\rm{d}}^d}\,y} \,{\varphi _\ell }{_y}{S_{ \ge \ell \cdot }}W{_{x - y}} + \int_D {{{\rm{d}}^d}\,y} \,{\varphi _\ell }{_y}{S_{ \ge \ell \cdot }}W{_{x - y}}, \cr} $](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq30.png) (24)

(24)

where Ɗ is the space domain. We expressed convolution products with a notation of the form 」… to help the reader keep track of which variable each field depends on. In particular bear in mind that (24) is evaluated at position x.

The above lengthy but explicit formulation of the dynamics is the viewpoint from which we now proceed to a series of simplifying assumptions, which will result in the approximate evolution Equation (28).

Our first approximation consists in considering that the factors v≥ ℓ and S≥ ℓ appearing inside two of the integrals in (24) are slowly varying in space compared to the filter φℓ, which can be understood as a scale separation approximation. Since these factors presumably remain approximately constant over the local kernel of φℓ, we pull them out of the integrals and write

![$\matrix{ {{\partial _t}{W_\ell } \simeq - {v _{ \ge \ell \cdot }}\nabla {W_\ell } + {S_{ \ge \ell \cdot }}{W_\ell } + D\left[ {{W_\ell }} \right]} \cr { - \int_D {{{\rm{d}}^d}\,y\,{\varphi _\ell }{_y}{v _{ < \ell \cdot }}\nabla W{_{x - y}} + } \int_D {{{\rm{d}}^d}\,y\,{\varphi _\ell }{_y}{S_{ < \ell \cdot }}W{_{x - y}}.} } \cr } $](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq31.png) (25)

(25)

In so doing, we have also taken the ∇ sign out of the integrals, an operation allowed by the property of the gradient of a convolution product ∂i(ƒ * 𝑔) = f * ∂i𝑔 for multivariable functions ƒ and 𝑔. The last two terms on the right hand side are solely due to scales ℓ and below, and represent respectively advection and deformation from small scales convolved with the filter at scale ℓ.

As a second approximation, we consider that these last two terms evolve more rapidly in time than the previous large-scale terms, and somewhat chaotically, i.e. scales smaller than ℓ introduce some stochastic perturbations which affect the scale ℓ. Schematically:

![$\eqalign{ & {\partial _t}{W_\ell } \simeq - {v _{ \ge \ell }}\nabla {W_\ell } + {S_{ \ge \ell }}{W_\ell } + D\left[ {{W_\ell }} \right] \cr & \,\,\,\,\,\,\,\,\,\,\,\,\,\, + {\rm{smaller}}\,{\rm{scales}}\,{\rm{stochasticity}}{\rm{.}} \cr} $](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq32.png) (26)

(26)

Nevertheless, we expect the evolution to remain coherent over some time τℓ at a given scale ℓ. Thereafter, we either prescribe or estimate this timescale τℓ from the larger scale fields, and we provide several ways to estimate it in Section 2.4.1 below. For now, since τℓ controls the timescale over which two initially adjacent fluid particles diverge from each other, it can be seen as the inverse of the largest positive Lyapunov exponent under the combined effects of the advection flow v≥ ℓ and the deformation matrix S≥ ℓ.

This sensitivity to the initial conditions implies that it is not quite necessary to integrate precisely the flow equations during more than a coherence time. Indeed, if the conditions had been slightly different one coherence time before, the current state of the fluid might now be completely different, to a point these initial conditions might as well be chosen randomly. Therefore, rather than solving a complicated stochastic equation, we also assume that the effect of the chaos in the small scales is equivalent to randomly reshuffle initial conditions every coherence time τℓ.

Thanks to this hypothesis, we do not need to integrate the evolution from the beginning, but only during the length of the last coherent time interval. At each scale ℓ, we hence assume that the chaotic evolution can be approximated by deterministically integrating to the targeted time t from random conditions at time t − τℓ during a coherence time interval τℓ . We denote this change by adding tildes over the vectors subject to this approximation. As a result, we approximate the evolution of the field as:

![${\partial _t}{\tilde W_\ell } = - {v _{ \ge \ell }}\nabla {\tilde W_\ell } + {S_{ \ge \ell }}{\tilde W_\ell } + D\left[ {{{\tilde W}_\ell }} \right],$](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq33.png) (27)

(27)

starting from random conditions  at intermediate time

at intermediate time  (these intermediate times might hence depend on scale).

(these intermediate times might hence depend on scale).

The law of  still needs to be specified. The chaos induced by the small scales might well be nontrivial, but in a first step we are going to assume that it follows a Gaussian law. Hence we assume that

still needs to be specified. The chaos induced by the small scales might well be nontrivial, but in a first step we are going to assume that it follows a Gaussian law. Hence we assume that  is a filtered version

is a filtered version  of a Gaussian field W0, for which the spectrum needs to be prescribed. Further-more, to reflect the property that neighbouring scales are in fact not independent (we expect the behaviour of the fluid to be continuous across scales), we assume that the fields

of a Gaussian field W0, for which the spectrum needs to be prescribed. Further-more, to reflect the property that neighbouring scales are in fact not independent (we expect the behaviour of the fluid to be continuous across scales), we assume that the fields  are filtered versions of a single random field W0.

are filtered versions of a single random field W0.

We approximate the evolution equation one step further by using the advection velocity field  computed from the synthetic state variable

computed from the synthetic state variable  in Equation (27), and similarly for S≥ℓ, for which we use

in Equation (27), and similarly for S≥ℓ, for which we use  . Finally, the line of reasoning detailed above ultimately leads to the following approximate evolution equation for the dynamics at a given scale ℓ

. Finally, the line of reasoning detailed above ultimately leads to the following approximate evolution equation for the dynamics at a given scale ℓ

Interestingly, it is almost in the initial generic form (13), except that the advection velocity and deformation matrix now depend on all scales above ℓ.

In the following, we introduce yet more approximations, now tailored to solve approximately the approximate evolution Equation (28) we just derived, i.e. we present our turbulence synthesis procedure. At this stage, the reader may legitimately be worried that such an accumulation of (even small) losses of information is too drastic, and can eventually only move us far away from the correct evolution. If so, we invite the reader to recall Figure 1 (or to have a glimpse at the more detailed Figures 3 and 4) were we showed a non-trivial case were the synthesis and the full simulation do match very well. Therefore, to some extent the whole procedure does keep enough relevant information to be of practical use, i.e. we have meaningful examples where it seems to extract the essence of the dynamics.

2.4 Turbulence synthesis

Our last Equation (28) is in closed form for all scales above ℓ: the evolution for  at scale ℓ can be solved once all scales s above ℓ are known. We can hence sweep all scales from the largest down to the smallest and compute

at scale ℓ can be solved once all scales s above ℓ are known. We can hence sweep all scales from the largest down to the smallest and compute  at all scales ℓ (we discuss how we deal with the largest scale in the numerical implementation below, see Section 2.5). The resulting synthesised field can finally be reconstructed as

at all scales ℓ (we discuss how we deal with the largest scale in the numerical implementation below, see Section 2.5). The resulting synthesised field can finally be reconstructed as  . Variants of our model will now be defined by the way we approximate the evolution Equation (28) (detailed in Section 2.4.1) and the way we estimate the coherence time τℓ (developed in Section 2.4.2).

. Variants of our model will now be defined by the way we approximate the evolution Equation (28) (detailed in Section 2.4.1) and the way we estimate the coherence time τℓ (developed in Section 2.4.2).

2.4.1 Time evolution approximations

Here, we focus on our approximations to solve the evolution Equation (28). We assume that the fields  and

and  evolve slowly compared to the fields at scale ℓ, as they are based on larger scales. We therefore assume they remain constant during the time interval τℓ. For instance, we assume that the fluid parcels move ballistically with the velocity

evolve slowly compared to the fields at scale ℓ, as they are based on larger scales. We therefore assume they remain constant during the time interval τℓ. For instance, we assume that the fluid parcels move ballistically with the velocity  during a coherence time τℓ to integrate (28) in time.

during a coherence time τℓ to integrate (28) in time.

We first focus on a case where S = D = 0 and v ≠ 0. In this case, we only need to randomly draw the initial vorticity field  at time

at time  and remap it at positions

and remap it at positions  where the fluid parcel used to be

where the fluid parcel used to be

(29)

(29)

Possible shell crossing is discussed in the Section 2.4.2.

We now focus on the case v = D = 0 (S ≠ 0). Since  is constant during τℓ, the solution of

is constant during τℓ, the solution of  is obtained as a matrix exponential:

is obtained as a matrix exponential:

(30)

(30)

For the general case, we combine the two previous steps as

(31)

(31)

and we finally apply the diffusion step as

![${\tilde W_\ell } \simeq \tilde W_\ell ^{D = 0} + {\tau _\ell }D\left[ {\tilde W_\ell ^{D = 0}} \right].$](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq58.png) (32)

(32)

We take those three steps in a particular order: advection, deformation, then diffusion. We feel it is justified by the need to use the quantities v and S at a time as advanced as possible (i.e. as close as possible to the time at which we compute the synthesised flow). Also the computation of the gradients in the deformation matrix requires a synchronous evaluation, so deformation might be better evaluated after advection. We finish by the diffusion step because it somewhat smooths the fronts generated at the convergence points of advection trajectories and where the deformation introduces stretching (a property sought for in adhesion models, for example, see Gurbatov et al. 1989). This also allows to alleviate the small scales generated outside the current filter by the non-linear action of advection and deformation (see discussion in Section 5.6, also). However, in the limit where τℓ is very small, time evolution is linear and the order does not matter.

2.4.2 Coherence time estimates

So far, we have defined the coherence time in this work as the time during which the evolution of the fluid can be considered deterministic at a given length scale. We now examine three ways to estimate this time.

1. It is at first tempting to identify it to the correlation timescale. For example, in the direct enstrophy cascade of 2D turbulence, below the injection scale, the correlation timescale is constant, equal to the injection timescale. It would then make sense to adopt τℓ = constant at all scales.

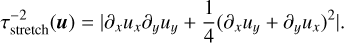

2. The notion of coherence also appeals to the sensitivity to initial conditions. The rate at which fluid trajectories deviate from each other is linked to the symmetric part of the velocity gradient matrix, which characterises the evolution of distances between nearby fluid parcels. We define the stretching time as the inverse of its largest eigenvalue which in incompressible fluids writes as:

(33)

(33)

This eigenvalue can also be seen as the largest instantaneous Lyapunov exponent for fluid trajectories. The above expression for incompressible hydrodynamics (in which ∂xux = −∂yuy) can be recast into

(34)

(34)

which expresses the stretch rate as a sum of squares of the normal and shear strains. That timescale is hence also naturally related to the deformation of fluid elements. Indeed, in some places the fluid might be rotating in a vorticity clump and its evolution at scales below the size of that vortex could be considered deterministic. On the contrary, fluid parcels in between vortices experience strong deformation through shearing motions. Adopting τℓ = τstretch(uℓ) would also ensure that fluid elements retain their integrity (i.e. they are not too deformed and it still makes sense to follow the same fluid particle).

3. Since we resort to a ballistic approximation for the advection of the fluid, our modelled fluid trajectories may cross each other after some finite time. This shell-crossing time can be defined as the inverse of the largest eigenvalue of the velocity gradient (by contrast to its symmetric part above):

(35)

(35)

Integration of ballistic trajectories for more than this timescale does not make sense. Note that this constraint is more related to our ballistic approximation than to the stochastic behaviour of the fluid, and may be relaxed once we resort to a higher order approximation. However, in the case of 2D incompressible hydrodynamics, this timescale is related to the physically motivated Okubo-Weiss (Okubo 1970; Weiss 1991; Haller 2021; Shivamoggi et al. 2024) criterion  . That last expression can also be used to show that requesting τℓ = τshell is slightly less stringent than the stretch criterion (τstretch ≤ τshell).

. That last expression can also be used to show that requesting τℓ = τshell is slightly less stringent than the stretch criterion (τstretch ≤ τshell).

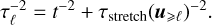

To conclude, because it is not yet clear which of these approaches is best suited, we have tested four choices for the coherence time (from less to more stringent (τstrain < τstretch ≤ τshell ):

Constant τℓ = t

Shell-crossing based

Stretch based

.

.Strain based

.

.

The minus-two powers in the above expressions take soft minima between the various timescales involved and the integration time t. Indeed, when we compare our method to actual simulations, we compute the evolution of the fluid over a finite time t from random initial conditions. In this case, it is not necessary to integrate the evolution of the synthesised flow over times longer than t.

As a final note, most of these definitions imply that the coherence time τℓ(x, t) depends on both scale, position in space and total evolution time t as long as this one is not too long compared to local times of the flow. The initial conditions  should hence not be understood as starting conditions at a synchronous time. Rather, they are random parameters which reflect the sensitivity to initial conditions.

should hence not be understood as starting conditions at a synchronous time. Rather, they are random parameters which reflect the sensitivity to initial conditions.

2.5 Numerical implementation

2.5.1 Discrete filters

A numerical implementation of the above method requires a space domain with a finite extent and a finite space resolution. As a result, the Fourier domain spans a finite number of wavenumbers, and only a finite number of scales is necessary to recover a given field. We choose to consider a cubic (square in 2D) domain of size L = 2π gridded with N pixels and we adopt periodic boundary conditions. The available wavevectors therefore span a regular grid of unit kJ = 2π/L = 1. We restrict ourselves to a discrete set of J + 1 filters  logarithmically distributed in scales in such a way that

logarithmically distributed in scales in such a way that

(36)

(36)

with λ ∈]0, 1[ the ratio between the typical scales of two consecutive filters. This parameter controls the resolution in scales. In the present application, we mostly use λ = 1/2 but we also tested a finer set of scales with  (hence with twice as many filters), without noticing much change.

(hence with twice as many filters), without noticing much change.

We request each filter to be selective in logarithmic scales, in order to be able to use the scale separation arguments at the base of our approximations. The filters  hence select concentric coronas in Fourier space and are centred around wavenumbers kj = kJλj−J with a relative width of 1/λ. In other words, the typical scale associated with filter j is

hence select concentric coronas in Fourier space and are centred around wavenumbers kj = kJλj−J with a relative width of 1/λ. In other words, the typical scale associated with filter j is

(37)

(37)

We point out that these physical scales go in increasing order from the smallest to the largest as j increases.

The discretised version of our normalisation condition (7) will simply be to enforce  for any k contained in the computational domain. Indeed, then the reconstruction formula works as

for any k contained in the computational domain. Indeed, then the reconstruction formula works as

(38)

(38)

from which we deduce  . We present two example filters we used in the present application in the Appendix B.

. We present two example filters we used in the present application in the Appendix B.

2.5.2 Algorithm

The initial Gaussian field W0 is first drawn in real space as a Gaussian white noise. We then convolve it with the desired spectrum by multiplying its Fourier coefficients.

We present below the steps of the algorithm we use to generate turbulent fields  at all scales j from the initial Gaussian field W0. We write

at all scales j from the initial Gaussian field W0. We write  and

and  .

.

To initiate the first j = J step of our iterative algorithm, at the largest scale, we simply assume  . We then parse the successive scales downwards, and we successively apply the following operations at each scale:

. We then parse the successive scales downwards, and we successively apply the following operations at each scale:

At stage j < J, the fields

are known for all i > j.

are known for all i > j.We write

. We compute the advection velocity

. We compute the advection velocity ![${v _{ \ge j}}v \left[ {{{\tilde W\limits^ }_{ \ge j}}} \right]$](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq81.png) and similarly

and similarly ![${S_{ \ge j}}S\left[ {{{\tilde W\limits^ }_{ \ge j}}} \right]$](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq82.png) .

.We estimate

(for which we might need v ≥ j if we use the local stretch or shell crossing times, for example).

(for which we might need v ≥ j if we use the local stretch or shell crossing times, for example).-

We now replace these values in the construction formulas (31) and (32). We compute the time-evolved field at scale ℓj (without diffusion) as

(39)

(39)where we use a first order linear interpolation scheme to compute

at positions in between grid points.

at positions in between grid points. We finally apply diffusion to the resulting field to get

![${\tilde W_j} = \tilde W_j^{D = 0} + {\tau _j}D\left[ {\tilde W_j^{D = 0}} \right]$](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq86.png) . We now have

. We now have  known for all i > j − 1 and we can proceed further back to operation 1 down to the next scale j − 1.

known for all i > j − 1 and we can proceed further back to operation 1 down to the next scale j − 1.

The loop stops when we hit the smallest scale labelled by j = 0. The final field then results from  .

.

For the interpolation at operation 4, we use RegularGridlnterpolator from the scipy.interplolate Python package. One of the caveats of this interpolation remapping is that it is not divergence and mean preserving. One may need to reproject the divergence free fields and adjust preserved quantities after the interpolation process. In our 2D hydrodynamical application in Section 4, we remove its average from the vorticity field to recover the zero mean. The same holds for the deformation step.

The matrix exponential at operation 4 makes use of the Padé approximant implementation from the EXPOKIT package (Sidje 1998), for which we implemented a vectorised wrapper in order to gain efficiency (essentially to call it from Python in a more efficient way for an array of vectors).

Diffusion at operation 5 is computed in Fourier assuming it proceeds over a homogenised time  averaged over the computational domain. In this simplified case, the effect of incompressible viscous diffusion ∂tw = D[w] = v∆w during time

averaged over the computational domain. In this simplified case, the effect of incompressible viscous diffusion ∂tw = D[w] = v∆w during time  is simply to multiply by exp(

is simply to multiply by exp( ) the Fourier coefficients of the initial field w. Note that because τj is not necessarily uniform over the computational domain, we resort to using a volume averaged value for τj. Otherwise, there is to our knowledge no convenient way to integrate the viscous diffusion term.

) the Fourier coefficients of the initial field w. Note that because τj is not necessarily uniform over the computational domain, we resort to using a volume averaged value for τj. Otherwise, there is to our knowledge no convenient way to integrate the viscous diffusion term.

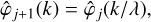

2.5.3 CPU cost scaling

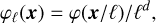

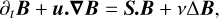

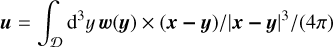

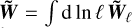

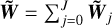

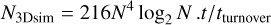

If we neglect the cost of the matrix exponentiation and the remapping (proportional to the number of pixels), most of the computational cost will amount to Fourier transforms. At each scale index j > 0 we need to Fourier transform back and forth because some operations are more efficiently performed in Fourier space (computing the advection velocity, the deformation matrix, or applying the diffusion process) while others are better done in real space (interpolation remap and deformation). To be more precise, the 2D synthesis uses ten calls to the fast Fourier transform (FFT) for each scale level: two for the advection velocity, four for the deformation matrix, two around the remap step, and two around the diffusion step. A 3D synthesis would require three for the advection velocity, nine for the deformation matrix, and six for each of the remap and diffusion steps: a total of 24 calls to a scalar FFT. In general, the method hence requires 10J (2D) or 24J (3D) Fourier transforms to generate one field. Since the CPU cost of a Fourier transform in d dimensions is on the order of Nd log2 Nd operations and J scales like log2 N, the cost of one field generation scales as

(40)

(40)

in 3D.

The cost of one time step of a pseudo-spectral code is proportional to the order of the time integrator: a Runge–Kutta order 4, such as we use in Section 4.1, requires four evaluations of the time derivative of the quantities at play. Each of these evaluation costs five FFTs in 2d (two for the velocity, two for the diagonal components of the vorticity gradient, one to get back to Fourier space), and 3+3+9+3=18 in 3D where the whole velocity gradient terms are also needed. At a Courant number of 1, the Courant–Friedriech–Lewy condition constrains the time-step to be less than tturnover/N: one requires N time steps to reach one turnover time. The cost of a 2D simulation is hence on the order of

(42)

(42)

operations for our Runge-Kutta order 4 implementation, and

(43)

(43)

in 3D.

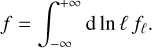

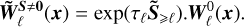

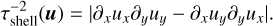

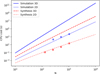

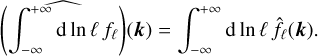

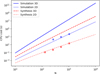

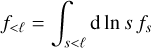

For a simulation over the length of one turnover time, the CPU time gain factor is therefore 2N/ log2(N) in 2D and 3N/ log2(N) in 3D. Both 2D and 3D scalings for simulations and MuScaTS syntheses are displayed on Figure 2. The scalings are all calibrated on a 2D N = 1024 simulation run (rightmost blue circle) using our pseudo-spectral code (see Section 4.1) compiled with Fortran on the totoro server at the mesoscale centre mesoPSL. For reference, the CPU hardware on this server is Intel(R) Xeon(R) Gold 6138 CPU, 2.00GHz. Other circles indicate the scaling of simulations at N = 256 and N = 512: they scale slightly less well than the theory predicts, because we ran our code in parallel on 32 cores, so the communication overhead in the FFT at smaller computational domains (smaller N) deteriorates the scaling. The light red squares show the CPU cost on the same hardware for a python 2D implementation of our MuScaTS synthesis.

As a final note, we point out that the current implementation could be further optimised with respect to the multiscale treatment. For a proper choice of filters, we could indeed afford using coarser grids at larger scales than for the smaller scales. This would considerably accelerate the computation at largest scales and lower the number of effective number of scales which enter the CPU cost scaling.

|

Fig. 2 CPU cost scaling estimates for 2D (light colours) and 3D (dark colours) hydrodynamics simulations (solid, blue) and MuScaTS syntheses (dashed, red). The scalings are computed according to text and calibrated on a 2D simulation (pseudo-spectral code implemented in Fortran) at N = 1024 for one turnover time (rightmost blue circle). The total CPU cost for simulations at N = 256 and N = 512 (the other two blue circles) is also indicated. Red squares stand for the CPU cost of a few 2D MuScaTS syntheses. |

3 Comparison to other methods

We investigate how our MuScaTS technique compares to selected existing works. We compare to Rosales & Meneveau (2006), Chevillard et al. (2011) and then to Zel’dovich’s multi-scale approximation.

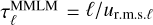

3.1 Comparison to Rosales & Meneveau (2006)

For 3D hydrodynamics, if we neglect the deformation and the diffusion terms, our method is extremely similar to the minimal multiscale Lagrangian map (MMLM) of Rosales & Meneveau (2006) provided we use τℓ as in their Equation (9):  where ur.m.s.ℓ is the prescribed r.m.s. velocity in the initial field at scale ℓ. The only remaining difference is: we would advect the vorticity field and build the velocity from a Biot-Savart formula, while their Lagrangian map is directly applied to the velocity. They have to reproject their velocity field on ∇.u = 0 while we get divergence free fields by construction. We hence expect our method should be more realistic, as both vorticity stretching effects and diffusion are retained while in effect, they neglect pressure terms and diffusion.

where ur.m.s.ℓ is the prescribed r.m.s. velocity in the initial field at scale ℓ. The only remaining difference is: we would advect the vorticity field and build the velocity from a Biot-Savart formula, while their Lagrangian map is directly applied to the velocity. They have to reproject their velocity field on ∇.u = 0 while we get divergence free fields by construction. We hence expect our method should be more realistic, as both vorticity stretching effects and diffusion are retained while in effect, they neglect pressure terms and diffusion.

In a later paper, the same authors Rosales & Meneveau (2008) noticed that they could improve their method by cutting single Lagrangian steps into smaller ones as scales become finer. They considerably improved the realism of the anomalous exponents using this approach, which they call multiscale turnover Lagrangian map (MTLM for short). We note that we could also incorporate this multi-stepping into our MuScaTS method, although it would increase its cost. Our method could perhaps also benefit from the fast algorithms designed by Malara et al. (2016) to improve the efficiency of the MTLM method.

Finally, Subedi et al. (2014) designed an incompressible MHD version of the MMLM method. MuScaTS without diffusion or deformation applied to the choice of variable W = (∇ × u, B) and using  should amount to the same model except we advance the vorticity ∇ × u rather than the velocity, which directly generates divergence free fields (we would however still have to clean the divergence of the magnetic field). The extension of Subedi et al. (2014) by Lübke et al. (2024) proceeds with advection from an already non-Gaussian field and produced convincing coherent structures. Our own implementation will advect along an already deformed field which contains physically motivated non-Gaussianities: we hence expect that the MHD version of MuScaTS should perform equally well if not better, but this remains to be demonstrated.

should amount to the same model except we advance the vorticity ∇ × u rather than the velocity, which directly generates divergence free fields (we would however still have to clean the divergence of the magnetic field). The extension of Subedi et al. (2014) by Lübke et al. (2024) proceeds with advection from an already non-Gaussian field and produced convincing coherent structures. Our own implementation will advect along an already deformed field which contains physically motivated non-Gaussianities: we hence expect that the MHD version of MuScaTS should perform equally well if not better, but this remains to be demonstrated.

3.2 Comparison to Chevillard et al. (2011) (CRV)

The link between MuScaTS and CRV is slightly less direct. We need to rework the equations quite a bit to highlight the similarities and differences.

First, we expose part of our model in a way that eases the comparison. The work of CRV is in the context of 3D Navier-Stokes incompressible equations, so in our framework we take W = (w) where w is the vorticity. We neglect diffusion, or in other words we focus on the inertial range. If we now neglect advection rather than deformation, as in CRV, then only the exponential term remains in Equation (30) which we rewrite more precisely here as

![$\tilde w_\ell ^{S \ne 0}\left( x \right) = \exp \left( {{\tau _\ell }S\left[ {{{\tilde w}_{ \ge \ell }}} \right]} \right).w_\ell ^0\left( x \right),$](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq98.png) (44)

(44)

to make clear the intricate dependence of S on the larger scales. If to parallel the work of CRV we now approximate the argument of S by its value ab initio, we get

![${\tilde w_\ell }\left( x \right) = \exp \left( {{\tau _\ell }S\left[ {w_{ \ge \ell }^0} \right]} \right) \cdot w_\ell ^0\left( x \right)$](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq99.png) (45)

(45)

or now using Equation (11), we obtain

![${\tilde w_\ell }\left( x \right) = \exp \left( {\int_{s \ge \ell } {{\rm{d}}\,\ln \,s\,} {\tau _\ell }S\left[ {w_s^0} \right]} \right).w_\ell ^0\left( x \right),$](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq100.png) (46)

(46)

and, integrating over all scales, we finally get

![${\tilde w^{{\rm{MuScaTS}}}} = \int_{ - \infty }^{ + \infty } {{\rm{d}}\,\ln } \,\ell \,\exp \left( {\int_{s \ge \ell } {{\rm{d}}\,\ln \,s\,} {\tau _\ell }S\left[ {w_s^0} \right]} \right).w_\ell ^0.$](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq101.png) (47)

(47)

This formula is our comparison point to CRV’s work below.

Then, let us reformulate part of CRV’s model. In their work, they construct a non-Gaussian random velocity field as the result of the vortex stretching of some initial Gaussian vorticity field w0. Concretely, they consider for w0 a Gaussian white noise vector (that we here denote η) and insert into a Biot-Savart-type formula a proxy for the vorticity field of the form

![${w^{{\rm{CRV}}}} = \exp \left( {{\tau _{{\rm{CRV}}}}{S^{{\rm{CRV}}}}\left[ {{w^0}} \right]} \right).{w^0},$](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq102.png) (48)

(48)

where τCRV is a parameter which controls the amount of stretching, as it relates2 to the correlation timescale of the velocity gradients, and SCRV[w0] is a matrix field encoding the stretching of w0 during a typical time τCRV. Now, as a general rule, given a vorticity field w the stretch matrix field reads3

![$S\left[ w \right]\left( x \right) \propto \int {{{\rm{d}}^3}\,y{{r\, \otimes \left( {r \times w\left( y \right)} \right) + \left( {r \times w\left( y \right)} \right) \otimes r} \over {{r^5}}}} ,$](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq103.png) (49)

(49)

where r ≡ x − y and ⊗ denotes the tensor product. In addition, an important feature which is universally observed in both experiments and numerical simulations of turbulence, is that turbulent fields are multi-fractal and have long-range correlations. This formally translates into S[w] being log-correlated in space, and a key point in CRV’s work which greatly increases the realism of their model, is to implement this feature into their formulae through a simple, effective rule4, namely by just changing the exponent in the power-law r5 of the denominator of the general form (49). Specifically, by choosing η for their w0 they adopt the rule r5 → r7/2, i.e. they consider (cf. CRV, Equation (12))

![${S^{{\rm{CRV}}}}[\eta ]\left( x \right) \propto \int {{{\rm{d}}^3}\,y{{r\, \otimes \left( {r \times \eta \left( y \right)} \right) + \left( {r \times \eta \left( y \right)} \right) \otimes r} \over {{r^{7/2}}}}} ,$](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq104.png) (50)

(50)

and this simple tweak makes their stretch field log-correlated as it should.

Hence, in CRV’s work, log-correlation appears through a cunning but somewhat artificial change of exponent, while the stretching timescale τCRV remains a mere parameter: in particular it is scale-independent. Inspired by their line of thinking, we now suggest an alternative approach in which we retain the physical scaling for the stretch, but we now introduce a scale-dependency of the stretching timescale to achieve log-correlation. Concretely, starting from expressions (48) and (49), and imposing log-correlation with a similar scaling argument as CRV’s, we now build expression (55) below, which is reminiscent of the scale-decomposition (47) of our model and may thus be interpreted in terms of a scale-dependent stretching timescale.

To improve on the physical interpretation of CRV, instead of choosing as initial condition w0 the simple Gaussian white noise η we also adopt a slightly more sophisticated Gaussian field w0 with the Kolmogorov (1941) power spectrum of the vorticity (i.e. with a spectrum scaling as  . But doing so first raises the question: how is CRV’s effective rule then modified? To answer this, we suggest the following rough scaling argument. In Fourier space, for a given corona of radius k and infinitesimal thickness dk, by definition of the spectrum

. But doing so first raises the question: how is CRV’s effective rule then modified? To answer this, we suggest the following rough scaling argument. In Fourier space, for a given corona of radius k and infinitesimal thickness dk, by definition of the spectrum  , so our choice of Kolmogorov scaling implies

, so our choice of Kolmogorov scaling implies  . Then, since the Fourier transform of a power law r−α is kα−d where d = 3 is the dimension of space, our scaling in Fourier space corresponds to a scaling w ~ r5/6−3 in position space. Hence, compared to CRV’s case, we have an additional power r5/6−3 in the numerator of the ratio in (50), so log-correlation requires that we adjust the exponent in the denominator to compensate it, i.e. we consider

. Then, since the Fourier transform of a power law r−α is kα−d where d = 3 is the dimension of space, our scaling in Fourier space corresponds to a scaling w ~ r5/6−3 in position space. Hence, compared to CRV’s case, we have an additional power r5/6−3 in the numerator of the ratio in (50), so log-correlation requires that we adjust the exponent in the denominator to compensate it, i.e. we consider

![${S^{{\rm{CRV}}}}[{w^0}]\left( x \right) \propto \int {{{\rm{d}}^3}\,y{{r\, \otimes \left( {r \times {w^0}\left( y \right)} \right) + \left( {r \times {w^0}\left( y \right)} \right) \otimes r} \over {{r^{4/3}}}}} ,$](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq108.png) (51)

(51)

where the denominator should be understood as r7/2+(5/6−3) = r4/3. This is our modification to CRV’s effective rule.

Now, to draw a parallel with the MuScaTS construction, let us build this stretch matrix SCRV[w0] from a continuous series of narrow filters, by decomposing it into various scales with (9) and using the linearity property (21). We thus have

![${S^{{\rm{CRV}}}}[{w^0}] = \int_{ - \infty }^{ + \infty } {{\rm{d}}\ln } \,\ell \,{S^{{\rm{CRV}}}}[w_\ell ^0],$](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq109.png) (52)

(52)

but in fact, to be complete, since in CRV’s model what drives the dynamics is the full argument of the matrix exponential in (48), we also need to take into account the τCRV parameter. Here instead of this mere parameter, we introduce some scale-dependent τℓ in the scale-decomposition (52) such that

![${\tau _{{\rm{CRV}}}}{S^{{\rm{CRV}}}}[{w^0}] = \int_{ - \infty }^{ + \infty } {{\rm{d}}\ln } \,\ell \,{\tau _\ell }S[w_\ell ^0],$](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq110.png) (53)

(53)

where S is the real, physical stretch (49), i.e. with a r5 denominator. Then, to reconcile the r4/3 requirement for log-correlation of (51) with the real physical r5 dependency of the stretching, we argue (pursuing the hand-wavy reasoning consisting in keeping track and matching length scales) that a power-law dependency

(54)

(54)

is adequate. Finally, using in (48) expression (53) and the scale decomposition (9) for w0, we rewrite the CRV random field in the following form, which is close to our (47),

![${w^{{\rm{CRV}}}} = \int_{ - \infty }^{ + \infty } {{\rm{d}}\ln } \,\ell \,\exp \left( {\int_{ - \infty }^{ + \infty } {{\rm{d}}\ln } \,s\,{\tau _s}S[w_s^0]} \right) \cdot w_\ell ^0,$](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq112.png) (55)

(55)

with τℓ given by (54) when the vorticity has a Kolmogorov (1941) power-law spectrum. It is in this sense that we mean that the change of power-law to enforce log-correlation in CRV could be interpreted as an integration time varying with scale, with a typical timescale τℓ ∝ ℓ11/3.

This unusual perspective on their theory enables us to explain more of the original physical grounds behind CRV’s construction. Thanks to the freedom introduced by the scale dependent stretch parameter, we can both retain the physical expression for the stretch (with 1/r5 scaling) and assimilate the generating Gaussian field as the initial vorticity (using Kolmogorov scaling instead of a white noise).

Now, comparing the above reformulations of both formalisms, we reveal three noteworthy differences. First, as can be seen in (46), we use a stretch field in the larger scales which has already been advanced, while CRV uses the initial stretch field at all scales. Second, (47) confronted to (55) shows that in our formulation the effective timescale τℓ applies to all scales above while it is local in scales in CRV. Third, the integration bounds differ: we integrate only the scales above ℓ while CRV uses the whole range of scales. In other words, our method suppresses the effects of the smaller scales deformation at a given scale. This might yield less intermittency because the smallest scales which can lead to the largest exponentiated spikes will be suppressed. On the other hand, it may be an improvement for the coherence of the resulting structures compared to Chevillard et al. (2011). Indeed, smoothing avoids strong deformations from very small scales which could result in less correlation between contiguous scales as the strongest peaks at the smallest scales might dominate every scale. In the case of the Zel’dovich Approximation, it was in fact recognised that some smoothing improved the accuracy of the approximation for a given targeted evolution time, which led to the truncated Zel’dovich Approximation (e.g. Coles et al. 1993).

Future investigations of the MuScaTS method in the 3D incompressible HD case will decide which formulation performs best. Finally, note that MuScaTS also retains both advection (neglected in CRV) and diffusion (approximated by a regularisation cut-off at small scales in CRV).

3.3 Links to the Zel'dovich multiscale approximation

In cosmology, the concept of multiscale is directly related to hierarchical formation of structures, in particular dark matter haloes. In the concordance model of large-scale structure formation, dark matter haloes are the hosts of galaxies and clusters of galaxies and their history consists of successive mergers. In this bottom-up scenario, small haloes form first and then merge together to form a population of larger haloes. The Lagrangian regions occupied by these haloes define a smoothing scale, which can itself be associated with a mass and a timescale of halo formation (Press & Schechter 1974). In this picture, mergers are equivalent to connections between Lagrangian regions.

As a result, the dynamical process at play can also be described with a multiscale approach accounting for dynamical collapse times of haloes of various masses associated with a smoothing level.

The Zel’dovich approximation (ZA) consists in a ballistic integration of initial velocities. Smoothing initial conditions to the ZA was shown to improve the solution, because it avoids the dispersion resulting from shell crossing. This led to the so-called truncated ZA (e.g. Coles et al. 1993) or higher order Lagrangian truncated perturbation theory (e.g. Bernardeau et al. 2002, and references therein), where the level of truncation is the smoothing scale. In the hierarchical formation of haloes described above, the truncated ZA (or its higher order equivalent) can be improved further by a multiscale approach : a smoothing length depending on the local position is defined. This multiscale synthesis of dark matter dynamics, has been implemented with success by different authors (see e.g. Bond & Myers 1996; Monaco et al. 2002; Stein et al. 2019). In these existing multiscale approaches, at every initial position, one finds the largest smoothing scale which does not collapse at the targeted redshift. This identifies both the smoothing length and the evolution time during which this Lagrangian region is evolved.

As mentioned in Section 2.2.4, the equations of large scale structure formation including the expansion of the Universe can be cast in our generic form (13), and we use a ballistic approximation which in this context amounts to ZA. A multiscale ZA can therefore directly emerge from our MuScaTS framework. In our MuScaTS approach, we suggest to identify the coherence time with the Press & Schechter (1974) collapse time at a given smoothing length, capped by the total time corresponding to the targeted redshift. This results in two subtle differences compared to existing ZA approaches. First, the collapse time at an intermediate scale is computed on a field which has already been advanced at the targeted redshift on the largest scales. Second, every bandpass filtered density field is evolved separately during its own timescale. This may result in different performances compared to existing methods, and it remains to be checked whether they are better or worse. However, the principle of the resulting method is still very close to the original multiscale ZA approaches.

4 Validation for 2D incompressible hydrodynamics

In the previous sections, we presented our MuScaTS framework to generate random realisations of a variety of flows. We provided a possible numerical implementation of the procedure. We also connected our synthesis to previously known generating methods in a few specific contexts.

We now turn to validate the method in the simplest possible setup: incompressible 2D hydrodynamics. Our generative procedure deforms an initial Gaussian field under the influence of the flow. We assess to what extent our synthesis is able to recover the statistics obtained when the flow evolves from an initial Gaussian field with a prescribed spectrum.

To that end, we run accurate simulations of the flow (using a pseudo-spectral code presented below in Section 4.1) starting with a large number (here, 30) of independent initial random realisations of Gaussian fields with a prescribed power-law spectrum. As remarked in the presentation of our framework above, several methodological choices need to be defined. We consider a fiducial version of our synthesis method, which we call the ‘basic’ synthesis, and introduce a few variants in Section 4.2 that span several ways of estimating the divorticity evolution and the coherence time. We quantitatively compare the performances of our syntheses first in the eyes of classical statistical indicators (Section 4.3) and second with the help of texture sensitive statistics (Section 4.4).

4.1 Reference 2D simulations

Throughout this work we use reference simulations to test our synthetic model. We briefly state here the details of our numerical implementation of a pseudo-spectral code to evolve 2D incompressible hydrodynamics.

We integrate the evolution Equation (14) on a square periodic domain. We choose the length scale normalisation such that the domain size is L = 2π and define our velocity scale such that the expectancy of the initial velocity squared is unity, namely E[〈u2〉] = 1 where E is the expectancy over all our realisations and 〈.〉 denote the average over our computational domain. Note that we do not require that the initial r.m.s. velocity is unity for every realisation, as this would destroy the Gaussianity of our initial process. The Reynolds number is hence on average ![${R_e} = E\left[ {\sqrt { < {u^2} > } } \right]L/\nu = 2\pi /\nu $](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq113.png) initially, and then decays as time proceeds while velocity is dissipated by viscous friction. Simulations are run with a pseudo-spectral method at a Courant number of 1, with a Runge-Kutta integration scheme of order 4, using a 2/3 truncation rule.

initially, and then decays as time proceeds while velocity is dissipated by viscous friction. Simulations are run with a pseudo-spectral method at a Courant number of 1, with a Runge-Kutta integration scheme of order 4, using a 2/3 truncation rule.

The initial field is chosen to be random, Gaussian with a prescribed Fourier spectrum Eu (k) ∝ kβ. More specifically, we draw a random Gaussian white noise in real space, compute the Fourier coefficients, imprint the spectrum by multiplying the resulting coefficients by Cβkβ where Cβ is chosen to comply with E[〈u2〉] = 1, and finally we get back to real space. The initial turnover timescale averaged over our realisations is hence ![$L/\sqrt {E\left[ {\left\langle {{u^2}} \right\rangle } \right]} = 2\pi $](/articles/aa/full_html/2025/11/aa53264-24/aa53264-24-eq114.png) in our units. The seed of the random noise is controlled by the integer parameter seed in the intrinsic FORTRAN random generator, which allows us to select various independent but deterministic realisations of the noise.

in our units. The seed of the random noise is controlled by the integer parameter seed in the intrinsic FORTRAN random generator, which allows us to select various independent but deterministic realisations of the noise.

The resolution is set by the number N of grid elements per side of the square domain, hence the pixel size is simply ∆x = L/N = 2π/N. The time step length is variable, defined by the Courant number parameter CFL through the relation ∆t = CFL∆x/|u|mαx. We set the Courant number to CFL = 1. The top-left panel a on Figure 3 illustrates one such initial vorticity map.

Our basic run is N = 1024 pixels aside for a viscous coefficient v = 10−4 with an initial power-law spectrum with a slope β = −3. This power-law spectrum corresponds to the power-law slope predicted in stationary 2D turbulence for the enstrophy cascade from the injection scale towards the small scales (Kraichnan 1967).

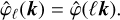

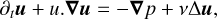

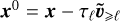

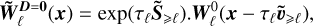

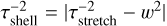

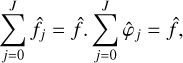

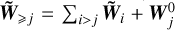

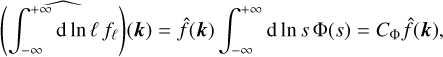

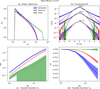

We evolve the simulation from these initial conditions during a total integration time t. The bottom-left panel e on Figure 3 illustrates the vorticity map after the initial Gaussian field has been evolved to t = 2, i.e. during about a third of a turnover timescale. Figure 4 present the same results after one turnover timescale. Clumps of vorticity quickly form, positive blobs rotate clockwise while negative ones (with w < 0) rotate counter-clockwise, displaying distinctive trailing spiral arms. Fluid in between the vorticity clumps gets sheared into filaments extremely elongated after about one turnover time (t = 6).

4.2 Basic synthesis and variants

We compare the above reference simulation to several variants of our synthesis method. We first present the parameters for our basic synthesis. It advects vorticity, using a coherence time τℓ based on the local strain time and the target ’age’ t of the simulation (see Section 2.4.2). The filtering is realised with our cosine isotropic filter (see Appendix B). We use the same resolution N, spectral index β and viscosity v as for the simulations, and we compare results at the same global evolution time t (see panels e and f in Figure 3 for t = 2 and Figure 4 at t = 6).

We now develop our investigation of the variants around our basic synthesis. In the remaining panels of Figures 3 and 4 (panels b-d and g-h), we consider several variants of a MuScaTS synthesis based on the divorticity field. In particular, we assess the relative importance of the advection and deformation (stretch in 2D) terms, both in order to prepare for the 3D case and in order to link to the previous works of CRV and Rosales & Meneveau (2006). We investigate the effect of the ordering of the advection term before or after the deformation term (see panels c and g of Figure 3). We investigate the effect of including only deformation as in CRV or only advection as in Rosales & Meneveau (2006) (see panels d and h in Figure 3). Finally, we test the effect of computing the deformation at first order instead of developing it fully through a matrix exponential (panel b in Figure 3).

Visual inspection of Figure 3 reveals that our basic synthesis (panel (f) for our synthesis on vorticity) does a rather good job at capturing the spiralling shape of the vorticity clumps, but the texture of the vorticity feels more patchy than in the simulation, specially at late times (see Figure 4). The three syntheses based on divorticity which retain both advection and deformation (panels b, c and g) seem to perform slightly less well, although the first order version of the deformation (panel b) is close to our basic synthesis (panel f). The two divorticity syntheses which skip one of these two terms (panels d and h) perform significantly less well. The stretch only case (panel d) recovers part of the filamentary texture and the advection only case (panel h) seems the worst, although the positions of the two large scale clumps of vorticity seem reproduced.

We also played with the coherence time τℓ. We tested a constant τℓ, which leads to a significant loss of realism (not shown here). And we tested a τℓ based on the local shell crossing time or the local stretch time (see Section 2.4.2), without observing significant improvement from the strain based time. Finally, we tried both the Cosine and spline filters (see Appendix B) without observing much of a difference.

Figures 3 and 4 present a simulation and a few synthesis variants for a single common initial Gaussian field. We now assess the statistical quality of each variant of our synthesis by running a reference set of simulations with 30 independent initial Gaussian conditions. In the next sections, we compute a selection of statistics on these 30 independent realisations to estimate their mean and variance. We then compare the same statistics on each variant of our syntheses generated from thirty other independent Gaussian fields. We thus get quantitative estimates of the performance of our synthesis for each choice of statistical indicators.

|

Fig. 3 Vorticity maps for various cases (see text), all based on the same original Gaussian field (displayed in panel a). The panels show the following: (a) initial conditions; (b–d) divorticity syntheses with (b) first order deformation, (c) advection then deformation, and (d) deformation only; (e) reference simulation at t = 2 (about 1/3rd turnover timescale); (f) basic synthesis for vorticity; (g, h) divorticity syntheses with (g) deformation then advection and (h) advection only. All syntheses are shown at the same time, t, as for the simulation and a coherence time based on the local strain time (See Section 2.4.2). |

4.3 Classical statistical indicators

After Kolmogorov (1941) discovered his famous power-spectrum law for 3D incompressible hydrodynamics, Kraichnan (1967) was the first to extend it to 2D incompressible hydrodynamics. He predicted that in forced 2D turbulence the velocity power spectrum should scale as k−5/3 above the injection scale (indirect energy cascade) and as k−3 below it (direct enstrophy cascade). This was later verified both in soap film experiments (Rutgers 1998; Bruneau & Kellay 2005) and in simulations (Boffetta 2007).

Intermittent corrections to these power-law behaviours were shown to be very weak in the indirect cascade (Boffetta et al. 2000). Very weak non-Gaussianities were detected in the velocity increments probability distribution functions (PDFs), whether measured in experiments (Paret & Tabeling 1998) or simulations (Boffetta et al. 2000; Pasquero & Falkovich 2002). The PDF of vorticity increments on the other hand were predicted to display exponential tails by Falkovich & Lebedev (2011). Indeed, significant deviations from Gaussianity were observed for the vorticity increments in simulations by Tan et al. (2014). As a result, we choose to focus on vorticity increments rather than velocity increments since those provide an easier measurement of intermittency compared to velocity increments.

Analogues of the exact Kármán Howarth Monin relation in 3D exist for both the indirect cascade of energy and the direct cascade of enstrophy in 2D. Eyink (1996) showed that in the indirect cascade, the energy transfer function

(56)

(56)

where brackets denote both space and ensemble averaging, has to be positive and proportional to ℓ. This is linked to slight asymmetries in the PDFs of the longitudinal velocity increments, with a bias towards positive values for δℓu|| at large values of δℓu||. On the other hand, in the direct cascade, the enstrophy transfer function

(57)

(57)

was predicted to be negative and proportional to ℓ (Paret & Tabeling 1998), see also Bernard (1999). This second result also involves asymmetries of the longitudinal velocity increments, but correlated to the vorticity increment (which is itself symmetric): it is caused by a bias for negative values of δℓu|| conditioned on large vorticity increments. In the following we examine both the energy and enstrophy transfer functions ℱu and ℱw as probes of these second order behaviours of the increments PDFs.

The above results were obtained at steady state in driven turbulence. However, here we deal with decaying turbulence, so none of these results may hold. Nevertheless, we use these classical indicators, power spectra, increments PDFs and transfer functions, as quantitative measurements to assess how our syntheses perform compared to actual simulations.

|

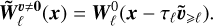

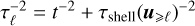

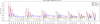

Fig. 5 Classical statistics averaged over 30 realisations for (a) power spectrum, (b) vorticity increments (for lags of 1, 4, and 32 pixels), (c) the energy transfer function, ℱu, and (d) the enstrophy transfer function, ℱw. Green is for the initial conditions (of the syntheses), red for the MuScaTS syntheses (basic run), and blue for the simulations at time t = 2. Shaded areas give the one-sigma error around the mean over the 30 independent realisations (i.e. mean plus or minus standard deviation divided by |

4.3.1 Power spectra

In panel a of Figure 5, we compare the average power spectra over all 30 realisations (reference simulations in blue and synthesis in red, while initial conditions are in green). Both the slope in the inertial range power-law behaviour and the power spectrum shape in the dissipation range seem equally well recovered. On these figures, the shaded area give the error on the mean as estimated from the dispersion over our 30 realisations. It should be stressed that these error estimates are similar between the simulations and the synthesis. In other words, the synthesis is able to reproduce not only the average, but also the variance of the power spectrum across the 30 members of our statistical ensemble. After about one turnover timescale, both the power spectrum and its variance are still well accounted for (see Figure 6).

4.3.2 Increments statistics