| Issue |

A&A

Volume 704, December 2025

|

|

|---|---|---|

| Article Number | A205 | |

| Number of page(s) | 15 | |

| Section | Numerical methods and codes | |

| DOI | https://doi.org/10.1051/0004-6361/202556785 | |

| Published online | 15 December 2025 | |

Mitigating incoherent excess variance in high-redshift 21 cm observations with multi-output cross-Gaussian process regression

1

Kapteyn Astronomical Institute, University of Groningen,

P.O. Box 800,

9700

AV

Groningen,

The Netherlands

2

LUX, Observatoire de Paris, Université PSL, CNRS, Sorbonne Université,

75014

Paris,

France

3

ASTRON,

PO Box 2,

7990

AA

Dwingeloo,

The Netherlands

4

INAF – Istituto di Radioastronomia,

Via P. Gobetti 101,

40129

Bologna,

Italy

5

School of Science, Jiangxi University of Science and Technology,

Ganzhou

341000,

PR

China

6

ARCO (Astrophysics Research Center), Department of Natural Sciences, The Open University of Israel,

1 University Road, PO Box 808,

Ra’anana

4353701,

Israel

★ Corresponding author: This email address is being protected from spambots. You need JavaScript enabled to view it.

Received:

8

August

2025

Accepted:

20

October

2025

Systematic effects that limit the achievable sensitivity of current low-frequency radio telescopes to the 21 cm signal are among the foremost challenges in observational 21 cm cosmology. The standard approach to retrieving the 21 cm signal from radio interferometric data separates it from bright astrophysical foregrounds by exploiting their spectrally smooth nature, in contrast to the finer spectral structure of the 21 cm signal. Contaminants exhibiting rapid frequency fluctuations, on the other hand, are difficult to separate from the 21 cm signal using standard techniques and the power from these contaminants contributes to low-level systematics that can limit our ability to detect the 21 cm signal. Many of these low-level systematics are incoherent across multiple nights of observation, resulting in an incoherent excess variance above the thermal noise sensitivity of the instrument. In this work, we developed a method called cross-covariance Gaussian process regression (cross-GPR) that exploits the incoherence of these systematics to separate them from the 21 cm signal, which remains coherent across multiple nights of observation. We developed and demonstrated the technique on synthetic signals in a general setting, then we applied it to gridded interferometric visibility cubes. We performed realistic simulations of visibility cubes containing foregrounds, 21 cm signal, noise, and incoherent systematics. The simulations show that the method can successfully separate and subtract incoherent contributions to the excess variance. Furthermore, its advantages over standard techniques become more evident when the spectral behavior of the contaminants resembles that of the 21 cm signal. Simulations performed on a variety of 21 cm signal shapes also reveal that the cross-GPR approach can subtract incoherent contributions to the excess variance, without suppressing the 21 cm signal. The codes underlying this article are publicly available in the Python library crossgp and will soon be integrated into the LOFAR and NenuFAR foreground removal and power spectrum estimation framework ps_eor.

Key words: methods: numerical / methods: statistical / techniques: interferometric / cosmology: observations / dark ages, reionization, first stars

© The Authors 2025

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. This email address is being protected from spambots. You need JavaScript enabled to view it. to support open access publication.

1 Introduction

One of the most promising direct probes of the high-redshift Universe is the 21 cm signal from neutral hydrogen. The three-dimensional spatial fluctuations of the 21 cm brightness temperature at high redshifts can be probed by low-frequency radio interferometers. Several instruments, such as GMRT1 (Paciga et al. 2013), LOFAR2 (Patil et al. 2017; Gehlot et al. 2019, 2020; Mertens et al. 2020, 2025; Ceccotti et al. 2025b), MWA3 (Ewall-Wice et al. 2016; Barry et al. 2019; Li et al. 2019; Trott et al. 2020; Yoshiura et al. 2021; Nunhokee et al. 2025), PAPER4 (Kolopanis et al. 2019), HERA5 (Abdurashidova et al. 2022; Adams et al. 2023), OVRO-LWA6 (Eastwood et al. 2019; Garsden et al. 2021), and NenuFAR7 (Munshi et al. 2024, 2025a) have attempted (or are currently attempting) to detect the 21 cm signal from cosmic dawn and the epoch of reionisation, two critical periods in the high-redshift Universe. The collecting area of the current generation of experiments only allows them to probe the 21 cm signal statistically in reasonable observing hours, by measuring the signal power spectrum. However, due to the steep technical challenges of recovering the faint signal from below orders of magnitude brighter foregrounds, none of these instruments have yet detected it; however, they have been able to set increasingly stringent upper limits on the 21 cm signal power spectrum.

Even if the thermal noise has been reduced to below the 21 cm signal level, there are other obstacles that prevent us from utilising the full sensitivity of the instrument necessary for detecting the 21 cm signal fluctuations. The foremost amongst them are the astrophysical foregrounds, due to Galactic and extragalactic emission. Low-frequency radio foregrounds are several orders of magnitude brighter than the expected background 21 cm signal. This creates a very steep calibration challenge, imposing stringent precision requirements on the calibration algorithms. The difficulty is amplified by the fact that there is usually poor prior knowledge of the instrumental primary beam, particularly near nulls and sidelobes far from the target field (Chokshi et al. 2024; Brackenhoff et al. 2025). The residual power from off-axis sources has been identified as one of the main causes of the excess variance above the thermal noise experienced by phase-tracking instruments such as LOFAR, NenuFAR, and MWA. For HERA, on the other hand, cross-coupling systematics have been identified as the primary limiting factor (Kern et al. 2019, 2020). There are multiple additional contaminants, such as ionospheric distortions (Vedantham & Koopmans 2015, 2016; Brackenhoff et al. 2024), incomplete sky models (Barry et al. 2016; Höfer et al. 2025), inaccurate foreground source modeling (Ceccotti et al. 2025a), and low-level radio frequency interference (Offringa et al. 2019a; Wilensky et al. 2019), which could pose systematic limitations to our ability to utilise the full sensitivity of the instruments.

The biggest challenge in observational 21 cm cosmology, however, is foreground mitigation. Broadly, foreground mitigation strategies fall into two categories: avoidance and subtraction. Foreground avoidance techniques exclude the foreground wedge, a region in the (k⊥, k∥) space where foregrounds are predominantly confined (Datta et al. 2010; Vedantham et al. 2012; Morales et al. 2012; Munshi et al. 2025c), while estimating the power spectrum of the 21 cm signal. Foreground subtraction techniques, on the other hand, use the spectral smoothness of foregrounds as priors in signal separation algorithms to model and remove foreground contamination from the data. Several foreground subtraction approaches, both parametric and non-parametric, have been proposed, among which Gaussian process regression (GPR; Mertens et al. 2018, 2024) has emerged as one of the most effective methods (Bonaldi et al. 2025). GPR models the visibility data as the sum of Gaussian processes (GPs) representing foregrounds, the 21 cm signal, noise, and any other component in the data. The spectral coherence of each component is encoded through its respective frequency-frequency covariance function, and the best-fit foregrounds are subtracted from the data.

The presently explored approaches to signal separation in 21 cm cosmology rely on the relative spectral smoothness of foregrounds, compared to the 21 cm signal, and do not attempt to subtract contaminants with rapid spectral fluctuations. However, several contaminating effects may not exhibit a smooth spectral signature, such as terrestrial or satellite radio frequency interference (Munshi et al. 2025b; Di Vruno et al. 2023; Grigg et al. 2023; Gehlot et al. 2024), the turbulent ionosphere causing calibration errors on longer baselines to leak into shorter baselines (Brackenhoff et al. 2024), and calibration errors arising from primary beam inaccuracies near nulls (Gan et al. 2022, 2023; Brackenhoff et al. 2025), all of which can contribute to excess variance in the data. Foreground mitigation algorithms based on spectral smoothness cannot easily separate these contributions from the 21 cm signal, leading to excess variance in the data that limits instrumental sensitivity. Many of these contaminants are incoherent across nights due to their stochastic nature, so their contribution averages out, with the resulting variance decreasing as more nights are combined. The excess variance observed by instruments such as LOFAR and NenuFAR has indeed been seen to integrate down over time (Mertens et al. 2020, 2025; Munshi et al. 2025a), suggesting that a significant portion is incoherent, manifesting as excess variance above the theoretical thermal sensitivity of the instrument. In contrast, the observed 21 cm signal within the main lobe of the primary beam remains coherent across multiple nights. This contrast presents an opportunity: the night-to-night incoherence can be used as a property that can help the separation of the contributions of these contaminants from the 21 cm signal contributions to the visibility data.

In many applications of GPR, we encounter multiple correlated outputs, such as repeated measurements and related signal components, which has motivated the development of multi-output GPs that aim to jointly model such outputs, incorporating information on how the outputs covary (e.g., Alvarez et al. 2012). The development of such models was largely driven by the field of geostatistics, where they are known as co-kriging methods. A central goal of these frameworks is to model the cross-covariance, which encodes how different groups of signals are correlated across outputs. Several frameworks have been proposed to model correlations between multiple outputs by expressing each output as a combination of shared latent processes, with the goal of capturing the cross-covariance structure between outputs. For example, the intrinsic co-regionalisation model (Goovaerts 1997) is the simplest, assuming that all outputs are scaled versions of the same latent function. The semiparametric latent factor model (SLFM; Teh et al. 2005) extends this by allowing each output to depend on a linear combination of multiple shared latent functions, each modeled as a GP with a distinct covariance. The linear model of co-regionalisation (LMC; Journel & Huijbregts 1976; Goovaerts 1997) generalises both by allowing the outputs to depend not only on multiple GP covariances, but also on multiple independent samples from each. Describing such cross-covariance structures between the outputs of GPs is well-suited to modeling visibility data from multiple nights of radio interferometric observations, where signal components may exhibit coherent or incoherent behavior across nights.

In this paper, we extend the frequency-frequency-only GPR method introduced in Mertens et al. (2018) to also account for temporal night-to-night (in)coherence. The extended model captures not only the covariance within an individual dataset, but also the cross-covariance between pairs of datasets, enabling it to capture the (in)coherence of specific components across multiple datasets. We apply this method to signal separation in 21 cm cosmology, using the temporal coherence of the 21 cm signal across multiple nights of observation to separate it from time-incoherent contributions to the excess variance. Subtracting such incoherent contributions along with the foreground component could bring us much closer to the instrumental thermal noise sensitivity and reduce the risk of suppressing the 21 cm signal, which is coherent between nights. Additionally, compared to the analysis by Acharya et al. (2024b) where a bias correction was performed for the excess, such a subtraction not only reduces the bias introduced by the excess variance but also decreases its sample variance, which often dominates the upper limits in 21 cm analyses.

The paper is organised as follows. Section 2 gives an overview of GPR and its application to signal separation. In Sect. 3, we describe the new method of separating signals based on coherence in a general setting and then set it in the context of 21 cm signal extraction. Section 4 demonstrates the method on simulated radio interferometric visibility cubes. In Sect. 5, we present the main results, and discuss the applicability and limitations of the current implementation of the method. Section 6 summarises the main conclusions from this paper. We summarise the main mathematical notations used in this paper in Table 1.

Summary of scientific notation.

2 Gaussian process regression

Gaussian processes (GPs) are widely used tools for modeling signals in noisy data. They are particularly useful in situations where the underlying functional form of the signal is unknown, but statistical properties such as spectral, temporal, or spatial smoothness can be described. A GP is a probability distribution over functions that can model a set of data points such that the values of a function f evaluated at any finite collection of inputs follow a multivariate normal distribution (Rasmussen & Williams 2006). Specifically, the function values f = f(x), evaluated at a vector of inputs x, are distributed as

![$\[\mathbf{f} \sim \mathcal{G} \mathcal{P}(m(\mathbf{x}), \mathbf{K})=\mathcal{N}(m(\mathbf{x}), \mathbf{K}(\mathbf{x}, \mathbf{x})),\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq1.png) (1)

(1)

where m(x) is the mean function, defined component-wise as m(xi) = 𝔼[f(xi)], usually assumed to be zero. K(x, x) is the covariance matrix with entries Kij given by a positive-definite covariance κ(xi, xj) between function values at the points xi and xj.

2.1 GPR for signal separation

In real situations, the observed data d carries an additive noise n at each set of inputs, such that d = f + n where ![$\[\mathbf{n} \sim \mathcal{N}\left(0, \sigma_{n}^{2} \mathbf{I}_{p}\right)\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq2.png) ,

, ![$\[\sigma_{n}^{2}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq3.png) being the noise variance and Ip ∈ ℝp×p being an identity matrix8 (where p is the number of elements in x). GPR is particularly well-suited for signal separation tasks, where the observed data are modeled as a sum of multiple components. Following the framework developed by Mertens et al. (2018), the total covariance function can then be expressed as a sum of individual GP covariance functions, each capturing the structure of a distinct signal component. If we consider M components, fi, with corresponding covariances, Ki, the data vector and the GP model covariance are given by

being the noise variance and Ip ∈ ℝp×p being an identity matrix8 (where p is the number of elements in x). GPR is particularly well-suited for signal separation tasks, where the observed data are modeled as a sum of multiple components. Following the framework developed by Mertens et al. (2018), the total covariance function can then be expressed as a sum of individual GP covariance functions, each capturing the structure of a distinct signal component. If we consider M components, fi, with corresponding covariances, Ki, the data vector and the GP model covariance are given by

![$\[\begin{aligned}\mathbf{d} & =\sum_{i=1}^M \mathbf{f}_i+\mathbf{n}, \\\mathbf{K} & =\sum_{i=1}^M \mathbf{K}_i.\end{aligned}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq4.png) (2)

(2)

The joint probability distribution of the observed data and the predicted values of the k–th component is then given by

![$\[\left[\begin{array}{c}\mathbf{d} \\\mathbf{f}_k\end{array}\right] \sim \mathcal{N}\left(\left[\begin{array}{l}0 \\0\end{array}\right],\left[\begin{array}{cc}\mathbf{K}+\sigma_n^2 \mathbf{I}_p & \mathbf{K}_k \\\mathbf{K}_k & \mathbf{K}_k\end{array}\right]\right).\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq5.png) (3)

(3)

GPR estimates the predictive distribution for fk conditioned on d, which is given by

![$\[\mathbf{f}_k \mid \mathbf{d}, \mathbf{x} \sim \mathcal{N}\left(\mathbb{E}\left(\mathbf{f}_k\right), \operatorname{cov}\left(\mathbf{f}_k\right)\right).\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq6.png) (4)

(4)

The predictive distribution is described by the mean 𝔼(fk) and the covariance cov(fk), which have the following expressions:

![$\[\begin{aligned}\mathbb{E}\left(\mathbf{f}_k\right) & =\mathbf{K}_k\left[\mathbf{K}+\sigma_n^2 \mathbf{I}_p\right]^{-1} \mathbf{d}, \\\operatorname{cov}\left(\mathbf{f}_k\right) & =\mathbf{K}_k-\mathbf{K}_k\left[\mathbf{K}+\sigma_n^2 \mathbf{I}_p\right]^{-1} \mathbf{K}_k.\end{aligned}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq7.png) (5)

(5)

Given we have complete knowledge of the covariance, these equations enable us to predict function values for a specific signal component.

The covariance kernels must be estimated from the information in the data itself. The standard approach is to define analytical covariance functions, which are described by a set of hyperparameters that control properties such as the variance and the coherence scale. Matern functions (Matérn 1960) form a class of such commonly used covariance functions in GPR and are defined as

![$\[\kappa_{\text {Matern }}\left(x_i, x_j\right)=\sigma^2 \frac{2^{1-\eta}}{\Gamma(\eta)}\left(\frac{\sqrt{2 \eta} r}{\ell}\right)^\eta K_\eta\left(\frac{\sqrt{2 \eta} r}{\ell}\right).\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq8.png) (6)

(6)

Here, σ2 is the variance, ℓ is the coherence scale, r = |xi − xj| is the separation in x, Γ is the Gamma function and Kη is the modified Bessel function of the second kind. The parameter η specifies the functional form, with some commonly used values being η = ∞: radial basis function (RBF) or Gaussian, η = 2.5: Matern 5/2, η = 1.5: Matern 3/2 and η = 0.5: exponential. The variance describes the span of the function values, while the coherence scale describes how quickly the correlation between the function values at a pair of points in x drops as we increase the distance between the points. The full covariance of the data then becomes a parametrised function over the set of hyperparameters θ, and the best-fit covariance function can be estimated from the data itself in a Bayesian framework. The vector θ is composed of M sets of hyperparameters, each set describing Ki. Incorporating priors on the hyperparameter estimates p(θ), the posterior distribution of the hyperparameters is obtained from Bayes’ theorem as

![$\[\log~ p(\boldsymbol{\theta} \mid \mathbf{d}, \mathbf{x}) \propto ~\log~ p(\mathbf{d} \mid \mathbf{x}, \boldsymbol{\theta})+\log~ p(\boldsymbol{\theta}).\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq9.png) (7)

(7)

Here log p(d ~ x, θ) is the log-marginal-likelihood (LML) function. For a Gaussian likelihood, the LML can be calculated explicitly and is given by

![$\[\begin{aligned}\log~ p(\mathbf{d} \mid \mathbf{x}, \boldsymbol{\theta})=-\frac{1}{2} \mathbf{d}^{\top}\left(\mathbf{K}+\sigma_{\mathrm{n}}^2 \mathbf{I}_p\right)^{-1} \mathbf{d} & -\frac{1}{2} ~\log~ \left|\mathbf{K}+\sigma_{\mathrm{n}}^2 \mathbf{I}_p\right| \\& -\frac{p}{2} ~\log~ 2 \pi.\end{aligned}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq10.png) (8)

(8)

The covariance model selection is thus performed in a Bayesian framework, by maximising the LML. The posterior distribution of the hyperparameters can be sampled using a Markov chain Monte Carlo (MCMC) routine. The covariance kernels evaluated at a sample θ in the posterior distribution can now be inserted into Eq. (5) and the function values for the k–th component can be sampled from this predictive distribution, thus allowing us to separate out the k-th signal component and determine its full covariance matrix.

2.2 GPR for foreground subtraction in 21 cm cosmology

In the context of 21 cm cosmology, GPR utilises the spectral smoothness of foregrounds to separate them from the 21 cm signal, which has a small frequency coherence scale. In this section, we describe this approach to subtracting foregrounds, developed by Mertens et al. (2018), which has been applied to LOFAR data by Gehlot et al. (2019, 2020); Mertens et al. (2020, 2025); Ceccotti et al. (2025b) and NenuFAR data by Munshi et al. (2024, 2025a). The visibility data are modeled as a sum of functions describing the foregrounds (ffg), 21 cm signal (f21), and noise (n). Often, it is insufficient to describe the data with just these components, and an additional excess variance component (fex) is needed to account for this residual variance from systematic effects. GPR is applied to the gridded complex visibility cube in the uvv space, along the frequency direction. Thus, in this specific case, x corresponds to the frequency channels. All real and imaginary visibility components across uv cells are assumed to follow the same GPR model, while the kernel hyperparameters are inferred jointly from the full dataset. This modeling was further extended by Mertens et al. (2024), where the covariance was explicitly formulated as a function of baseline length. In this framework, the visibility data d can be written as

![$\[\mathbf{d}=\mathbf{f}_{\mathrm{fg}}+\mathbf{f}_{21}+\mathbf{f}_{\mathrm{ex}}+\mathbf{n}.\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq11.png) (9)

(9)

Assuming the components to be uncorrelated, the covariance of the GP model (K) is then the sum of the covariances of the components

![$\[\mathbf{K}=\mathbf{K}_{\mathrm{fg}}+\mathbf{K}_{21}+\mathbf{K}_{\mathrm{ex}},\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq12.png) (10)

(10)

where Kfg, K21, and Kex are the covariances of the foreground, 21 cm signal, and excess components, respectively. The foreground covariance kernel and the priors on its lengthscale are chosen in such a way as to reflect the spectral smoothness of foregrounds. For the 21 cm signal, the covariance kernel should have a corresponding power spectrum that can describe a wide range of 21 cm signal power spectra. Since analytical covariance functions may struggle to capture the full diversity of 21 cm signal statistics, Mertens et al. (2024) and Acharya et al. (2024a) introduced a learned kernel approach to GPR-based foreground subtraction. In this method, a variational autoencoder (VAE) is trained on simulations to encode 21 cm power spectrum shapes. The resulting latent space defines a low-dimensional, physically informed parametrisation of the 21 cm covariance kernel, whose parameters are then treated as hyperparameters and inferred during the GPR. The excess covariance is used to capture components with rapid spectral fluctuations, which cannot be confidently distinguished from the 21 cm signal component based on the frequency behavior. The excess component is thus not subtracted from the data, resulting in 21 cm signal power spectrum limits exceeding those expected from thermal noise only. We note that using Matern kernels for the foregrounds and excess components assumes that these functions can adequately describe the covariance of the components in the data. Results by the DOTSS-21cm team in the SKA data challenge 3a (Bonaldi et al. 2025) are a recent demonstration that Matern kernels used in ML-GPR can describe foregrounds reasonably well. Acharya et al. (2024b) and Ceccotti et al. (2025b) showed that subtracting the full GP model from the data makes it largely consistent with noise, implying that the GP covariance model can describe the excess variance in actual data reasonably well. Future analyses should ideally replace both foreground and excess kernels with more physics-driven covariances, once the dominant source of the excess variance is identified.

The predictive distribution of any GP component corresponding to a sample in the posterior distribution of θ can now be estimated. Comparing the function components and the GP covariance model described in Eqs. (9) and (10) to those in Eq. (2), the predictive mean and covariance of the foreground component can be obtained using Eq. (5) as

![$\[\begin{aligned}\mathbb{E}\left(\mathbf{f}_{\mathrm{fg}}\right) & =\mathbf{K}_{\mathrm{fg}}(\boldsymbol{\theta})\left[\mathbf{K}(\boldsymbol{\theta})+\sigma_n^2 \mathbf{I}_p\right]^{-1} \mathbf{d}, \\\operatorname{cov}\left(\mathbf{f}_{\mathrm{fg}}\right) & =\mathbf{K}_{\mathrm{fg}}(\boldsymbol{\theta})-\mathbf{K}_{\mathrm{fg}}(\boldsymbol{\theta})\left[\mathbf{K}(\boldsymbol{\theta})+\sigma_n^2 \mathbf{I}_p\right]^{-1} \mathbf{K}_{\mathrm{fg}}(\boldsymbol{\theta}).\end{aligned}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq13.png) (11)

(11)

Foreground realisations generated from this predictive distribution can now be subtracted from the data cube to obtain foreground-subtracted residual cubes:

![$\[\mathbf{r}^j=\mathbf{d}-\mathbf{f}_{\mathrm{fg}}^j(\boldsymbol{\theta}),\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq14.png) (12)

(12)

where rj and ![$\[\mathbf{f}_{\mathrm{fg}}^{j}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq15.png) are the j–th residual cube and foreground realisation, respectively. The foreground subtracted power spectrum and uncertainties can be estimated from an ensemble of such residual data cubes, corresponding to a set of θ sampled from its posterior distribution. The power spectrum uncertainties estimated from this ensemble of residual cubes will then account for the spread of the posterior distribution in the hyperparameter space, as well as the measurement errors.

are the j–th residual cube and foreground realisation, respectively. The foreground subtracted power spectrum and uncertainties can be estimated from an ensemble of such residual data cubes, corresponding to a set of θ sampled from its posterior distribution. The power spectrum uncertainties estimated from this ensemble of residual cubes will then account for the spread of the posterior distribution in the hyperparameter space, as well as the measurement errors.

In this GP model, among the various components of the visibility data in Eq. (9), the foregrounds and the 21 cm signal are expected to be coherent across visibility cubes from multiple nights, while the noise is incoherent. The excess component, however, may exhibit both coherent and incoherent contributions across datasets. A GPR framework that operates on multiple datasets can explicitly incorporate this distinction to disentangle the coherent and incoherent contributions to the data. We develop such a framework in the next section.

3 GPR for multiple datasets

We first describe the framework for performing GPR on multiple datasets in a general setting. We consider two uncorrelated datasets d1 and d2 given by d1 = f1 + n1 and d2 = f2 + n2, with f1 and f2 being the corresponding function values and n1 and n2 being the noise realisations. We assume that both functions are described by the same covariance function, K, but with different sets of hyperparameters, θ1 and θ2. The joint GP model for the datasets is then given by

![$\[\left[\begin{array}{l}\mathbf{d}_1 \\\mathbf{d}_2\end{array}\right] \sim \mathcal{N}\left(\left[\begin{array}{l}0 \\0\end{array}\right],\left[\begin{array}{cc}\mathbf{K}\left(\boldsymbol{\theta}_1\right)+\sigma_1^2 \mathbf{I}_p & 0 \\0 & \mathbf{K}\left(\boldsymbol{\theta}_2\right)+\sigma_2^2 \mathbf{I}_p\end{array}\right]\right),\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq16.png) (13)

(13)

where ![$\[\sigma_{1}^{2}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq17.png) and

and ![$\[\sigma_{2}^{2}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq18.png) are the noise variances of the two datasets. This model is equivalent to having two separate GP models for the two datasets, and the distributions of θ1 and θ2 can be obtained in the same manner as described in Sect. 2.1, either by performing two separate GPR runs, or by performing a single GPR run with the data and covariances given by Eq. (13).

are the noise variances of the two datasets. This model is equivalent to having two separate GP models for the two datasets, and the distributions of θ1 and θ2 can be obtained in the same manner as described in Sect. 2.1, either by performing two separate GPR runs, or by performing a single GPR run with the data and covariances given by Eq. (13).

3.1 Separating coherent and incoherent components with cross-GPR

Given the framework of Eq. (13), we can now make one further assumption, namely, that the hyperparameters describing the two datasets are also the same, that is, θ1 = θ2 = θ. This implies that both datasets can be described by the same covariance kernel.

![$\[\left[\begin{array}{l}\mathbf{d}_1 \\\mathbf{d}_2\end{array}\right] \sim \mathcal{N}\left(\left[\begin{array}{l}0 \\0\end{array}\right],\left[\begin{array}{cc}\mathbf{K}(\boldsymbol{\theta})+\sigma_1^2 \mathbf{I}_p & 0 \\0 & \mathbf{K}(\boldsymbol{\theta})+\sigma_2^2 \mathbf{I}_p\end{array}\right]\right).\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq19.png) (14)

(14)

Performing a GPR with this model now links the hyperparameters of the two datasets together, and gives a single posterior distribution of θ. However, since the off-diagonal blocks in the covariance matrix are zero, the correlation of the component realisations between the two datasets is still not modeled. The predictive mean estimated from Eq. (5) will still, in general, be different for the two datasets, since the data vector, d, now has the two different data column vectors, d1 and d2, stacked along the rows.

We next consider a situation where the data are a combination of a coherent and an incoherent component. The coherent component has the same realisation across the two datasets, while the incoherent component has different realisations across the two datasets. Defining fcoh as the coherent component and finc as the incoherent component, the function values f1 and f2 corresponding to the two datasets can be written as

![$\[\begin{aligned}& \mathbf{f}_1=\mathbf{f}_{\mathrm{coh}}+\mathbf{f}_{\mathrm{inc}}^1, \\& \mathbf{f}_2=\mathbf{f}_{\mathrm{coh}}+\mathbf{f}_{\mathrm{inc}}^2,\end{aligned}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq20.png) (15)

(15)

where ![$\[\mathbf{f}_{\text {inc}}^{1}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq21.png) and

and ![$\[\mathbf{f}_{\text {inc}}^{2}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq22.png) are the realisations of the incoherent component for the two datasets. We note that this is a specific case of the LMC framework described by Alvarez et al. (2012). We describe this connection explicitly in Appendix A.

are the realisations of the incoherent component for the two datasets. We note that this is a specific case of the LMC framework described by Alvarez et al. (2012). We describe this connection explicitly in Appendix A.

Our aim is to include information that one component is coherent while the other is not, within the joint covariance matrix, to improve the separation of the two components. We continue with the assumption that the hyperparameters of both the coherent component (θcoh) and the incoherent component (θinc) are linked between the two datasets. We let Kcoh and Kinc be the covariances of the coherent and incoherent components, respectively. The shared covariance function can be written as

![$\[\mathbf{K}=\mathbf{K}_{\mathrm{coh}}\left(\boldsymbol{\theta}_{\mathrm{coh}}\right)+\mathbf{K}_{\mathrm{inc}}\left(\boldsymbol{\theta}_{\mathrm{inc}}\right).\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq23.png) (16)

(16)

This goes into the diagonal blocks of the covariance in Eq. (14). However, as long as the off-diagonal blocks are zero, the information about the coherence of one component and the incoherence of another is not included in the covariance structure, and the two datasets are solved for independently. We next include this information by estimating the cross-covariances between the two datasets. The cross-covariance is then given by

![$\[\begin{aligned}\operatorname{cov}\left(\mathbf{f}_1, \mathbf{f}_2\right)= & \mathbb{E}\left[\left(\mathbf{f}_{\mathrm{coh}}+\mathbf{f}_{\mathrm{inc}}^1\right) \cdot\left(\mathbf{f}_{\mathrm{coh}}+\mathbf{f}_{\mathrm{inc}}^2\right)\right] \\& -\mathbb{E}\left[\mathbf{f}_{\mathrm{coh}}+\mathbf{f}_{\mathrm{inc}}^1\right] \cdot \mathbb{E}\left[\mathbf{f}_{\mathrm{coh}}+\mathbf{f}_{\mathrm{inc}}^2\right] \\= & \mathbf{K}_{\mathrm{coh}}, \text { since } \mathbb{E}\left[\mathbf{f}_{\mathrm{inc}}^1 \cdot \mathbf{f}_{\mathrm{inc}}^2\right]=0.\end{aligned}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq24.png) (17)

(17)

Equation (14) thus gets modified to

![$\[\begin{aligned}& {\left[\begin{array}{l}\mathbf{d}_1 \\\mathbf{d}_2\end{array}\right] \sim \mathcal{N}\left(\left[\begin{array}{l}0 \\0\end{array}\right],\left[\begin{array}{cc}\mathbf{K}_{\mathrm{coh}}+\mathbf{K}_{\mathrm{inc}}+\sigma_1^2 \mathbf{I}_p & \mathbf{K}_{\mathrm{coh}} \\\mathbf{K}_{\mathrm{coh}} & \mathbf{K}_{\mathrm{coh}}+\mathbf{K}_{\mathrm{inc}}+\sigma_2^2 \mathbf{I}_p\end{array}\right]\right)} \\& \equiv \mathbf{d} \sim \mathcal{N}\left(0, \mathbf{K}_{\mathrm{full}}\right).\end{aligned}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq25.png) (18)

(18)

Thus, the GP model now correctly includes the information that one of the components of the model is coherent between the two datasets while the other is incoherent.

The predictive distribution of the coherent and incoherent components corresponding to a sample in the posterior distribution of θ = [θcoh, θinc] is then given by

![$\[\begin{aligned}& \quad\mathbb{E}\left(\mathbf{f}_{\mathrm{coh}}\right)=\left(\mathbf{1}_2^{\top} \otimes \mathbf{K}_{\mathrm{coh}}\left(\boldsymbol{\theta}_{\mathrm{coh}}\right)\right) \mathbf{K}_{\mathrm{full}}(\boldsymbol{\theta})^{-1} \mathbf{d}, \\& \operatorname{cov}\left(\mathbf{f}_{\mathrm{coh}}\right)= \mathbf{K}_{\mathrm{coh}}\left(\boldsymbol{\theta}_{\mathrm{coh}}\right)-\left(\mathbf{1}_2^{\top} \otimes \mathbf{K}_{\mathrm{coh}}\left(\boldsymbol{\theta}_{\mathrm{coh}}\right)\right) \mathbf{K}_{\mathrm{full}}(\boldsymbol{\theta})^{-1} \\&\qquad\qquad\qquad\qquad\qquad \times\left(\mathbf{1}_2 \otimes \mathbf{K}_{\mathrm{coh}}\left(\boldsymbol{\theta}_{\mathrm{coh}}\right)\right);\end{aligned}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq26.png) (19a)

(19a)

![$\[\begin{aligned}\mathbb{E}\left(\binom{\mathbf{f}_{\text {inc }}^1}{\mathbf{f}_{\text {inc }}^2}\right) & =\left(\mathbf{I}_2 \otimes \mathbf{K}_{\text {inc }}\left(\boldsymbol{\theta}_{\text {inc }}\right)\right) \mathbf{K}_{\text {full }}(\boldsymbol{\theta})^{-1} \mathbf{d}, \\\operatorname{cov}\left(\binom{\mathbf{f}_{\text {inc }}^1}{\mathbf{f}_{\text {inc }}^2}\right) & =\mathbf{I}_2 \otimes \mathbf{K}_{\text {inc }}\left(\boldsymbol{\theta}_{\text {inc }}\right)-\left(\mathbf{I}_2 \otimes \mathbf{K}_{\text {inc }}\left(\boldsymbol{\theta}_{\text {inc }}\right)\right) \mathbf{K}_{\text {full }}(\boldsymbol{\theta})^{-1}\\&\qquad\qquad\qquad\quad\quad\times\left(\mathbf{I}_2 \otimes \mathbf{K}_{\mathrm{inc}}\left(\boldsymbol{\theta}_{\mathrm{inc}}\right)\right),\end{aligned}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq27.png) (19b)

(19b)

where 12 ∈ ℝ2 is a two-element column vector of ones and I2 ∈ ℝ2×2 is a two-by-two identity matrix. We thus get a single estimate of the coherent component for both datasets and two separate estimates for the incoherent component.

3.2 Demonstration on synthetic datasets

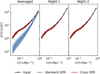

We next demonstrate how explicitly including the off-diagonal cross-covariance blocks improves our ability to separate out contributions from coherent and incoherent components in the data. For this purpose, we generated two synthetic datasets. Each dataset contains 50 signal realisations, where each signal is composed of two components: a coherent component shared between the two datasets, and an incoherent component that is independent between them. We performed GPR twice, first with the block diagonal GP model of Eq. (14) which assumes the two datasets to be independent, but with linked hyperparameters9, and second with the full GP model of Eq. (18) which includes information about the coherence and incoherence of the two components. We sampled the hyperparameter posterior distribution using the MCMC routine emcee10, and the hyperparameter estimate with the highest posterior probability was used to calculate the predictive mean and covariance of both the coherent and incoherent components, using Eqs. (19a) and (19b) respectively. We kept the prior range relatively wide and fixed between the two tests. The tests were repeated for a large number of choices of pairs of kernels. We show two such cases:

|

Fig. 1 Impact of including cross-covariances in GPR in the separation of coherent and incoherent components demonstrated on synthetic data. The left column shows the case where the coherent and incoherent covariance kernels are distinct, while the right column shows the case of the coherent and incoherent components having similar covariances. The corner plots in the top show the posterior distribution of the hyperparameters for the block diagonal approach (in blue) and the full covariance approach (in red). The four plots at the bottom show the recovery of a single realisation of the coherent component, for the block diagonal and full covariance approaches, respectively. |

Dissimilar kernels: Coherent Matern 3/2 (ℓ = 0.5, σ2 = 0.1) + Incoherent Exponential (ℓ = 2, σ2 = 0.1).

Similar kernels: Coherent Exponential (ℓ = 1, σ2 = 0.1) + Incoherent Exponential (ℓ = 0.5, σ2 = 0.1).

The results are shown in Fig. 1. The dissimilar and similar kernel examples are shown in the left and right columns, respectively. The corner plots in the top show the posterior distribution of the hyperparameters for both block diagonal and full-covariance approaches. For the dissimilar kernels (in the left column), we find that both approaches work relatively well and the posterior distributions recover the input hyperparameter values. We note that the full-covariance posteriors are slightly better constrained. However, for similar kernels (in the right column), we find that the block diagonal approach has wide degenerate posteriors, while the full covariance approach provides much better constrained and less degenerate posteriors around the input hyperparameter values. This is because, in the dissimilar kernels case, the two components are distinct enough to be separable by performing a standalone GPR on each dataset, and including the information that one kernel is coherent while the other is not does not yield significant additional benefits. However, for similar kernels, where the covariance structure along x is not distinct enough to distinguish the two components, including additional information through the cross-covariance blocks offers significant benefits in recovering the input hyperparameter values. The middle and bottom row shows the predictive mean and standard deviation for a single realisation of the coherent component, obtained at the hyperparameter estimate with the highest posterior. The full covariance approach yields a single coherent component for the two datasets, while the diagonal approach yields one coherent component for each of the two datasets. We find that for the dissimilar kernels, though the full covariance approach can describe the input signal better, the block diagonal approach can also recover the input to some extent. However, for the similar kernels case, the block diagonal approach does significantly worse than the full covariance approach.

3.3 Generalisation to N datasets

The formalism developed in Sect. 3.1 can be naturally extended to a situation where we have a large number of datasets, where the data can be expressed as a sum of coherent and incoherent components. We let N be the number of datasets. Equation (18) then can be generalised as

![$\[\mathbf{d} \sim \mathcal{N}\left(0, \mathbf{J}_N \otimes \mathbf{K}_{\mathrm{coh}}+\mathbf{I}_N \otimes \mathbf{K}_{\mathrm{inc}}+D \otimes \mathbf{I}_p\right),\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq28.png) (20)

(20)

where ![$\[\mathbf{J}_{N}=\mathbf{1}_{N} \mathbf{1}_{N}^{\top} \in \mathbb{R}^{N \times N}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq29.png) is an all-ones matrix, IN ∈ ℝN×N is an identity matrix, and

is an all-ones matrix, IN ∈ ℝN×N is an identity matrix, and ![$\[D=\operatorname{diag}\left(\sigma_{1}^{2}, \ldots, \sigma_{N}^{2}\right) \in \mathbb{R}^{N \times N}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq30.png) is a diagonal matrix containing the noise variances of individual datasets. For a sample in the posterior distribution of θ, the predictive mean and covariance of the coherent component can be calculated from Eq. (19a), with 12 replaced by 1N. Similarly, the N incoherent components can be obtained from Eq. (19b), with the column vector of the function values containing N sets of fi and I2 replaced by IN.

is a diagonal matrix containing the noise variances of individual datasets. For a sample in the posterior distribution of θ, the predictive mean and covariance of the coherent component can be calculated from Eq. (19a), with 12 replaced by 1N. Similarly, the N incoherent components can be obtained from Eq. (19b), with the column vector of the function values containing N sets of fi and I2 replaced by IN.

The computational cost of this method, however, increases steeply with the number of datasets N. The full covariance matrix Kfull(θ) has dimensions Np × Np. Computing the log marginal likelihood involves a matrix inversion which scales as 𝒪(N3p3). This rapidly becomes a bottleneck as N increases. Since we assume all nights to have the same covariance kernel, a Toeplitz or band-diagonal structure could be employed in the full covariance matrix to speed up the inversion. Alternative formalisms can also include populating the full covariance of N datasets with blocks for pairs of datasets along the diagonal, in which only the (in)coherence between the 2ith and (2i + 1) th datasets is used in performing GPR. However, since we are essentially performing a separate GPR for each pair of datasets, the computational cost reduces to 𝒪(Np3). Situations where N > 2 have not been explored in this study and are left for future work.

3.4 Cross-GPR in 21 cm signal extraction

In this section, we describe the cross-covariance approach in the context of gridded visibility cubes. As described in Sect. 2.2, in 21 cm cosmology analyses, there are often components in the data in addition to the foregrounds, 21 cm signal, and noise, commonly referred to as excess components. This excess is usually described by a covariance kernel with rapid spectral fluctuations. As a result, the excess component is not subtracted from the data, since it carries a risk of subtracting the 21 cm signal itself. However, in multiple 21 cm cosmology analyses (Mertens et al. 2020, 2025; Munshi et al. 2025a), it has been seen that integrating multiple nights of observation not only reduces the thermal noise but also the excess variance. Cross-coherence analyses performed with both LOFAR (Mertens et al. 2025) and NenuFAR (Munshi et al. 2025a) also show that a large portion of the excess component is incoherent across multiple nights of observation, particularly within the EoR window. This incoherent excess variance could have multiple possible origins, such as calibration errors, bright source residuals due to ionospheric effects, and transient radio frequency interference. The 21 cm signal field, on the other hand, is coherent over multiple nights of observations.

The cross-GPR formalism developed in Sect. 3.1 thus maps ideally onto this problem. The 21 cm signal, foregrounds, and any coherent portion of the excess constitute the coherent components, and the noise and incoherent portion of the excess constitute the incoherent components. The coherent excess could arise from effects such as cross-coupling between stations, persistent RFI, and primary beam model inaccuracies, and is, conservatively, not subtracted from the data. The gridded visibility cubes for two nights of observations d1 and d2 are then given by

![$\[\begin{aligned}& \mathbf{d}_1=\mathbf{f}_{\mathrm{fg}}+\mathbf{f}_{21}+\mathbf{f}_{\mathrm{ex}^{\text {coh }}}+\mathbf{f}_{\mathrm{ex}^{\text {inc }}}^1+\mathbf{n}_1, \\& \mathbf{d}_2=\underbrace{\mathbf{f}_{\mathrm{fg}}+\mathbf{f}_{21}+\mathbf{f}_{\mathrm{ex}^{\text {coh }}}}_{\text {Coherent }}+\underbrace{\mathbf{f}_{\mathrm{ex}^{\text {inc }}}^2+\mathbf{n}_2.}_{\text {Incoherent }}\end{aligned}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq31.png) (21)

(21)

Here, fexcoh is the coherent excess component and ![$\[\mathbf{f}_{\text {ex }}^{1}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq32.png) and

and ![$\[\mathbf{f}_{\mathrm{ex}^{\text {inc }}}^2\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq33.png) are the incoherent excess components from the two nights. Following Eq. (18), the joint GP model covariance of the two nights is then given by

are the incoherent excess components from the two nights. Following Eq. (18), the joint GP model covariance of the two nights is then given by

![$\[\mathbf{K}_{\text {model }}=\left[\begin{array}{cc}\mathbf{K}_{\mathrm{fg}}+\mathbf{K}_{21}+\mathbf{K}_{\mathrm{ex}}^{\mathrm{coh}}+\mathbf{K}_{\mathrm{ex}}^{\mathrm{inc}} & \mathbf{K}_{\mathrm{fg}}+\mathbf{K}_{21}+\mathbf{K}_{\mathrm{ex}}^{\mathrm{coh}} \\\mathbf{K}_{\mathrm{fg}}+\mathbf{K}_{21}+\mathbf{K}_{\mathrm{ex}}^{\mathrm{coh}} & \mathbf{K}_{\mathrm{fg}}+\mathbf{K}_{21}+\mathbf{K}_{\mathrm{ex}}^{\mathrm{coh}}+\mathbf{K}_{\mathrm{ex}}^{\mathrm{inc}}\end{array}\right],\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq34.png) (22)

(22)

in which the off-diagonal blocks receive contributions only from the coherent components. The joint distribution of the two data cubes is then

![$\[\begin{aligned}& {\left[\begin{array}{l}\mathbf{d}_1 \\\mathbf{d}_2\end{array}\right] \sim \mathcal{N}\left(\left[\begin{array}{l}0 \\0\end{array}\right], \mathbf{K}_{\text {model }}+\left[\begin{array}{cc}\sigma_1^2 \mathbf{I}_p & 0 \\0 & \sigma_2^2 \mathbf{I}_p\end{array}\right]\right),} \\& \equiv \mathbf{d} \sim \mathcal{N}\left(0, \mathbf{K}_{\text {full }}\right),\end{aligned}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq35.png) (23)

(23)

and θ contains the hyperparameters for all coherent as well as incoherent components. Since the aim is to subtract the foreground component as well as the incoherent excess component from both data cubes, we estimate the predictive distribution of the sum of the foreground and incoherent excess components for the two data cubes. The mean and covariance of this distribution, for the two nights of observations, are given by

![$\[\begin{aligned}\mathbb{E}\left(\left[\begin{array}{l}\mathbf{f}_{\mathrm{fg}}+\mathbf{f}_{\mathrm{ex}^{\text {inc}}}^1 \\\mathbf{f}_{\mathrm{fg}}+\mathbf{f}_{\mathrm{ex}^{\text {inc}}}^2\end{array}\right]\right) & =\mathbf{K}_{\mathrm{fg}, \mathrm{ex}^{\text {inc}}}(\boldsymbol{\theta}) \mathbf{K}_{\mathrm{full}}(\boldsymbol{\theta})^{-1} \mathrm{~d}, \\\operatorname{cov}\left(\left[\begin{array}{l}\mathbf{f}_{\mathrm{fg}}+\mathbf{f}_{\mathrm{ex}^{\text {inc}}}^1 \\\mathbf{f}_{\mathrm{fg}}+\mathbf{f}_{\mathrm{ex}^{\text {inc}}}^2\end{array}\right]\right) & =\mathbf{K}_{\mathrm{fg}, \mathrm{ex}^{\text {inc}}}(\boldsymbol{\theta})-\mathbf{K}_{\mathrm{fg}, \mathrm{ex}^{\text {inc}}}^{\text {inc}}(\boldsymbol{\theta}) \mathbf{K}_{\mathrm{full}}(\boldsymbol{\theta})^{-1} \mathbf{K}_{\mathrm{fg}, \mathrm{ex}^{\text {inc}}}(\boldsymbol{\theta}), \\\text {where}\quad \mathbf{K}_{\mathrm{fg}, \mathrm{ex}^{\text {inc}}}(\boldsymbol{\theta}) & =\left[\begin{array}{cc}\mathbf{K}_{\mathrm{fg}}(\boldsymbol{\theta})+\mathbf{K}_{\mathrm{ex}}^{\text {inc }}(\boldsymbol{\theta}) & \mathbf{K}_{\mathrm{fg}}(\boldsymbol{\theta}) \\\mathbf{K}_{\mathrm{fg}}(\boldsymbol{\theta}) & \mathbf{K}_{\mathrm{fg}}(\boldsymbol{\theta})+\mathbf{K}_{\mathrm{ex}}^{\text {inc }}(\boldsymbol{\theta})\end{array}\right].\end{aligned}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq36.png) (24)

(24)

This distribution can be used to generate realisations for the sum of foreground and incoherent excess components for both nights. These are subtracted from the input data cubes to yield the foreground and incoherent excess subtracted residual cubes, expressed as

![$\[\begin{aligned}& \mathbf{r}_1^j=\mathbf{d}_1-\mathbf{f}_{\mathrm{fg}}^j(\boldsymbol{\theta})-\mathbf{f}_{\mathrm{ex}}^{1, j}(\boldsymbol{\theta}), \\& \mathbf{r}_2^j=\mathbf{d}_2-\mathbf{f}_{\mathrm{fg}}^j(\boldsymbol{\theta})-\mathbf{f}_{\mathrm{ex}}^{2, j}(\boldsymbol{\theta}),\end{aligned}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq37.png) (25)

(25)

where ![$\[\mathbf{r}_{1}^{j}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq38.png) and

and ![$\[\mathbf{r}_{2}^{j}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq39.png) are the j–th residual cubes for the two nights, with

are the j–th residual cubes for the two nights, with ![$\[\mathbf{f}_{\text {fg}}^{j}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq40.png) being the coherent foreground component realisation, and

being the coherent foreground component realisation, and ![$\[\mathbf{f}_{\mathrm{ex}^{\text {inc}}}^{1, j}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq41.png) and

and ![$\[\mathbf{f}_{\mathrm{ex}^{\text {inc}}}^{2, j}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq42.png) being the incoherent excess component realisations for the two nights. The power spectrum and uncertainties for either the individual night data cubes or the combined cube are estimated from an ensemble of such residual cubes, produced by sampling the hyperparameter posterior distribution and the predictive distribution of the GP components, as described at the end of Sect. 2.2. We note that not only the foregrounds and incoherent excess, but any component or sum of components can be recovered similarly, by placing the coherent component covariances in both diagonal and off-diagonal blocks and the incoherent component covariances in only the diagonal blocks.

being the incoherent excess component realisations for the two nights. The power spectrum and uncertainties for either the individual night data cubes or the combined cube are estimated from an ensemble of such residual cubes, produced by sampling the hyperparameter posterior distribution and the predictive distribution of the GP components, as described at the end of Sect. 2.2. We note that not only the foregrounds and incoherent excess, but any component or sum of components can be recovered similarly, by placing the coherent component covariances in both diagonal and off-diagonal blocks and the incoherent component covariances in only the diagonal blocks.

4 Application to simulated data

In this section, we demonstrate the cross-GPR approach on simulated visibility cubes. Such visibility cubes in uvv space are obtained by gridding the visibility data obtained from radio interferometric observations (Offringa et al. 2019b). In the standard approach, gridded data cubes from multiple nights are combined by computing a weighted average (using the uvv grid weights), and GPR is performed on this averaged data cube to subtract smooth spectrum foregrounds.

We note that performing two independent GPR runs on the two datasets, as described by Eq. (13), is not equivalent to performing a single GPR on the averaged data. Instead, throughout Sect. 3, we have considered the case where the GP model hyperparameters are linked between the two datasets, following Eq. (14), and this is equivalent to a single frequency-only GPR performed on the averaged data. In Sect. 4.1, we compare the cross-GPR approach against a frequency-only GPR performed on the averaged data. In Sect. 4.2, in addition to this, we also investigate the case of independent frequency-only GPR runs performed separately on the individual nights. We note that, unlike standard approaches used in LOFAR and NenuFAR analyses, where the excess component is retained, in our tests, the excess component was subtracted to assess its impact.

4.1 Separating 21 cm signal and incoherent excess

We consider a realistic scenario of an observation with the radio telescope NenuFAR. For this purpose, we simulated gridded visibility cubes of two nights of observations. The data cube contains coherent foreground power, which is made up of an intrinsic foreground component representing smooth spectrum foregrounds within the field of view and a mode-mixing component that represents off-axis source foreground power. We note that here the mode-mixing component is assumed to be night-to-night coherent if the two nights are observed in the same LST range. The excess variance component was assumed to be incoherent across the two nights. We included a 21 cm signal component with a variance equal to 0.1% of the data variance, making sure it is above the thermal noise variance. This higher signal-to-noise ratio was used for illustration and comparison purposes only. The 21 cm visibility cube was generated from the VAE trained on 21 cm simulations at z = 20 used by Munshi et al. (2024). Finally, we simulated incoherent noise cubes for the two nights. For all components except the 21 cm signal component, the kernel function, and their associated lengthscale and variance values are inspired by those obtained by Munshi et al. (2025a) from actual data11. The GP covariance model is summarised in Table 2. We report all σ2 parameters in log10 scale, and all ℓ parameters in linear scale. Power spectra were estimated from the visibility cubes using ps_eor12. Figure 2 shows the cylindrical power spectra of the different components given as input to the simulation. The foreground component has high power at low k∥ and has a sharp drop in power with increasing k∥13. The 21 cm and excess components are much weaker, and their power drop-off at high k∥is much slower. It is evident from the cylindrical power spectra that the 21 cm signal and excess component covariances are similar and might be difficult to disentangle based on spectral smoothness alone. The noise power for each night is approximately an order of magnitude below the peak excess power.

Given the simulated data, we next compare the performance of the cross-GPR approach developed in this paper to the more standard GPR approach, which only uses the frequency-frequency covariance within a single visibility cube. The averaged data and noise cubes obtained from these simulated visibility cubes for the two nights were given as input in the standard approach to sample the hyperparameter posterior distribution, and estimate the residuals and power spectra. We refer to this as the “standard GPR” case. Next, the data and noise cubes for both nights were given as input to the cross-GPR formalism developed in this paper to sample the hyperparameter posterior distribution, and estimate the residuals for each night, and corresponding power spectra for individual nights and averaged nights. We refer to this as the “Cross-GPR” case. In both cases, we used the same covariance functions, priors, and MCMC parameters. It was also ensured that the MCMC runs for both the standard and cross-GPR cases converged, in order to obtain an accurate representation of the posterior distribution in each case.

The top panel of Fig. 3 shows the posterior distribution of the hyperparameters for standard GPR (in blue) and cross-GPR (in red). The recovery of the intrinsic (![$\[\ell_{\text {int}}, \sigma_{\text {int}}^{2}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq61.png) ) and mode mixing (

) and mode mixing (![$\[\ell_{\text {mix}}, \sigma_{\text {mix}}^{2}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq62.png) ) foreground hyperparameters is comparable in both standard and cross-GPR. This is because they have distinct spectral behaviour, which is sufficient to disentangle them, as was seen in Sect. 3.2. We note, however, that the mode-mixing hyperparameter recovery, unlike in the cross-GPR case, is slightly biased in the standard GPR case. The remaining hyperparameters describe the 21 cm signal

) foreground hyperparameters is comparable in both standard and cross-GPR. This is because they have distinct spectral behaviour, which is sufficient to disentangle them, as was seen in Sect. 3.2. We note, however, that the mode-mixing hyperparameter recovery, unlike in the cross-GPR case, is slightly biased in the standard GPR case. The remaining hyperparameters describe the 21 cm signal ![$\[\left(x_{1}, x_{2}, \sigma_{21}^{2}\right)\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq63.png) and the excess (ℓex,

and the excess (ℓex, ![$\[\sigma_{\mathrm{ex}}^{2}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq64.png) ). Here, cross-GPR performs dramatically better in recovering the input values compared to standard GPR. This could be expected since the excess and 21 cm signal components have similar spectral behavior (Fig. 2) and are thus difficult to disentangle based on the spectral behavior alone, as seen in Sect. 3.2 for similar kernels. Specifically, we find that in the standard GPR,

). Here, cross-GPR performs dramatically better in recovering the input values compared to standard GPR. This could be expected since the excess and 21 cm signal components have similar spectral behavior (Fig. 2) and are thus difficult to disentangle based on the spectral behavior alone, as seen in Sect. 3.2 for similar kernels. Specifically, we find that in the standard GPR, ![$\[\sigma_{21}^{2}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq65.png) is underestimated while

is underestimated while ![$\[\sigma_{\mathrm{ex}}^{2}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq66.png) is overestimated. This suggests that the excess component absorbs a portion of the 21 cm signal. The corner plot in the top panel of Fig. 3 exhibits such a degeneracy between

is overestimated. This suggests that the excess component absorbs a portion of the 21 cm signal. The corner plot in the top panel of Fig. 3 exhibits such a degeneracy between ![$\[\sigma_{21}^{2}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq67.png) and

and ![$\[\sigma_{\mathrm{ex}}^{2}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq68.png) and also between

and also between ![$\[\sigma_{21}^{2}\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq69.png) and x2. This is not the case in cross-GPR, where the recovery is unbiased. Thus, including information about the coherence or incoherence of components between nights through the cross-GPR approach can break the degeneracies between the hyperparameters, particularly for components with similar spectral behaviour, such as the 21 cm signal and excess components. We note that the cross-GPR method makes no additional assumptions beyond those of standard GPR, except for acknowledging that one component of the data is incoherent, which allows it to break degeneracies and more effectively separate the signals.

and x2. This is not the case in cross-GPR, where the recovery is unbiased. Thus, including information about the coherence or incoherence of components between nights through the cross-GPR approach can break the degeneracies between the hyperparameters, particularly for components with similar spectral behaviour, such as the 21 cm signal and excess components. We note that the cross-GPR method makes no additional assumptions beyond those of standard GPR, except for acknowledging that one component of the data is incoherent, which allows it to break degeneracies and more effectively separate the signals.

The bottom row of Fig. 3 shows how well the different components are recovered by comparing their spherical power spectra. The shaded bands represent the 1σ and 2σ uncertainties that account for the spread of the hyperparameter posterior distribution. The foreground power spectrum is recovered by both the standard and cross-GPR approach relatively well (the blue and red lines are not clearly visible in the plot since they overlap). However, standard GPR underestimates the 21 cm signal component power and overestimates the excess power, because of the respective biases in their corresponding variances. Both the 21 cm signal and the excess component power spectra are recovered well in the cross-GPR approach. Cross-GPR allows us to estimate the recovered power spectra of incoherent components for each night, and this is shown in the two rightmost panels. The input power spectra are seen to be recovered well here as well.

Finally, we subtracted both the foreground and excess components from the data cubes that were used as the input to the GPR. We note that the upper limits analyses performed by Mertens et al. (2020), Mertens et al. (2025), Munshi et al. (2024), Munshi et al. (2025a), and Ceccotti et al. (2025b) using standard GPR do not subtract the excess component, because in the frequency-only approach, it cannot be safely disentangled from the 21 cm signal. For standard GPR, this was done on the averaged data. For cross-GPR, the components for each night obtained using Eq. (24) were subtracted from the respective nights and averaged to obtain the ensemble of averaged residual data cubes, from which the power spectra and uncertainties were estimated following Sect. 3.4. Figure 4 shows the resulting spherical power spectra with uncertainties, compared with the respective input power spectra. A standard GPR oversubtracts the excess component and thus underestimates the residual power spectrum. The recovery in cross-GPR, on the other hand, is unbiased, in both the averaged data and in individual nights.

Covariance model used in GPR with linked hyperparameters for the two nights.

|

Fig. 2 Cylindrical power spectra of the different input components constituting the simulated visibility cubes. The foreground and 21 cm signal components are coherent and have a common realisation for both nights. The excess and noise components are incoherent and have different realisations for the two nights. |

4.2 Dependence on 21 cm signal power spectrum shapes

In this section, we assess how the recovery of the hyperparameter distributions and the power spectra depends on the degree of similarity between the shape of the 21 cm signal and the excess component power spectra. We repeated the simulations described in the previous section for a series of 21 cm signal shapes. We sampled 25 different shapes of the signal from the trained VAE, corresponding to 5 × 5 grid of uniformly distributed points in the two-dimensional latent space (x1, x2 ∈ [−2, 2]). For each such signal shape, a 21 cm signal visibility cube was generated and added to the coherent foregrounds visibility cube and incoherent excess and noise cubes for both nights. The standard and cross-GPR were then run in the same manner as described in Sect. 4.1. In addition to running standard GPR on the averaged data and cross-GPR on the set of two nights, here we also investigated the case where standard GPR is run on each of the nights separately.

In Fig. 5, we show the hyperparameter recovery results, in the form of peak normalised histograms for each hyperparameter marginalised over all 25 simulations. The input true value of the hyperparameter was subtracted before computing the histogram for each signal shape so that it is centered at zero for unbiased recovery. The histograms for the three sets of standard GPR runs are shown in different shades of blue, while the histogram for cross-GPR hyperparameter recovery is shown in red. We find that the foreground hyperparameters are relatively well recovered by both standard and cross-GPR, agreeing with the results of Sect. 4.1. Also, similar to what is seen in Fig. 3, the mode-mixing foreground variance is slightly less biased in the cross-GPR approach. However, we find that the x1 parameter is poorly recovered in both the standard and cross-GPR approaches, likely due to the weak dependence of the trained VAE on x1. This was also observed in Fig. 3, where the range of x1 is much larger than that of x2. The recovery of x2 is also suboptimal in both cases, although the cross-GPR results appear slightly better constrained around zero. The 21 cm signal variance recovery is, however, significantly improved in the cross-GPR approach, where the standard GPR underestimates the 21 cm signal variance, indicating that a portion of the 21 cm signal is absorbed by the excess component. The most significant improvement is seen in the recovery of both the lengthscale and variance of the incoherent excess component. The cross-GPR approach accurately recovers the input values, while the standard GPR results remain largely unconstraining. This is expected, as the explicit information on the nature of the variance provided to the model is precisely the incoherence of the excess component.

Once the hyperparameter posterior distribution was obtained, the foregrounds and excess were subtracted from the input data, and the residual power spectrum was estimated in the manner described in Sect. 4.1, for each signal shape. Finally, a bias correction of the thermal noise was made by subtracting the thermal noise power spectrum. The resulting power spectrum uncertainties should bracket the input 21 cm power spectrum for unbiased recovery. To estimate this, we computed the z-score value as a function of k for each simulation, defined as

![$\[\mathrm{z}\text{-}\operatorname{score}(k)=\frac{\Delta_{\mathrm{rec}}^2(k)-\Delta_{\mathrm{inp}}^2(k)}{\sigma_{\Delta_{\mathrm{rec}}^2}(k)},\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq70.png) (26)

(26)

where ![$\[\Delta_{\text {rec}}^{2}(k)\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq71.png) and

and ![$\[\sigma_{\Delta_{\text {rec}}^{2}}(k)\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq72.png) are the residual noise bias corrected power spectrum and 1σ uncertainty, while

are the residual noise bias corrected power spectrum and 1σ uncertainty, while ![$\[\Delta_{\text {inp}}^{2}(k)\]$](/articles/aa/full_html/2025/12/aa56785-25/aa56785-25-eq73.png) is the input 21 cm signal power spectrum. A negative value of the z-score indicates 21 cm signal loss. The z-scores computed in this manner are shown in Fig. 6. The top row shows the dependence of the z-score on k. Each box describes the spread of z-scores for the 25 selected input signal shapes. The gray bands indicate the 1σ and 2σ ranges. We find that the standard GPR residuals systematically underestimate the 21 cm signal at most k modes, with a large number of z-score values below −2, indicating suppression of the signal beyond the 2σ level. At some large k modes, for GPR performed on individual nights, a significant overestimation is also seen with z-score values well above 2, hence the bias is scale-dependent. The cross-GPR does not show such a signal loss, with the recovery at most k modes being well constrained in the 2σ bands independent of the k mode. A few simulations show a positive bias at small k, but it is still lower than the standard GPR results, and such outliers can be expected given the number of simulations. We note that such an overestimation is not as concerning as an underestimation, particularly in the context of setting upper limits on the power spectrum.

is the input 21 cm signal power spectrum. A negative value of the z-score indicates 21 cm signal loss. The z-scores computed in this manner are shown in Fig. 6. The top row shows the dependence of the z-score on k. Each box describes the spread of z-scores for the 25 selected input signal shapes. The gray bands indicate the 1σ and 2σ ranges. We find that the standard GPR residuals systematically underestimate the 21 cm signal at most k modes, with a large number of z-score values below −2, indicating suppression of the signal beyond the 2σ level. At some large k modes, for GPR performed on individual nights, a significant overestimation is also seen with z-score values well above 2, hence the bias is scale-dependent. The cross-GPR does not show such a signal loss, with the recovery at most k modes being well constrained in the 2σ bands independent of the k mode. A few simulations show a positive bias at small k, but it is still lower than the standard GPR results, and such outliers can be expected given the number of simulations. We note that such an overestimation is not as concerning as an underestimation, particularly in the context of setting upper limits on the power spectrum.

To more precisely investigate the dependence of the signal recovery on the input 21 cm signal shape, the bottom panel of Fig. 6 illustrates the dependence of the z-score on the signal shape. For each signal shape, we computed the mean z-score over k. The normalised power spectrum representing each signal shape is shown with its color indicating the corresponding value of the mean z-score. As a reference, we also show a normalised power spectrum shape of the excess component as dashed black lines. In standard GPR, a large number of signal shapes are significantly suppressed. We find that the signals that are the most suppressed have a power spectrum shape similar to the reference excess component. This supports the findings of Sects. 3.2 and 4.1, which show that signals similar to the excess are more difficult to recover in the standard approach. In contrast, the cross-GPR approach more effectively separates such signals. In fact, the trend appears reversed: signals resembling the excess component show a slight positive bias, suggesting that the 21 cm component absorbs part of the excess. However, a slight positive bias is less concerning as long as we are in the regime of setting upper limits on the 21 cm power spectrum, since the signal is not absorbed. None of the signals show a significant negative bias, with all z-scores closer to zero and lying within the 2σ bounds. The absolute values of the z-score, averaged over both k bins and shapes of the signal are 4.6, 4.8, and 5.7 for night 1, night 2, and the averaged night simulations, respectively, with a standard GPR. The corresponding values obtained with cross-GPR are 1.6, 1.6, and 1.5, indicating a significant decrease in the absolute bias.

|

Fig. 3 Comparison of the performance of standard and cross-GPR in recovering the input components. The standard and cross-GPR results are shown in blue and red colors, respectively. Top: corner plot showing the posterior distribution of the GPR hyperparameters. The input hyperparameter values are indicated with black lines. Bottom: input and recovered spherical power spectra of the different components. |

|

Fig. 4 Power spectra of the residual data after the foregrounds and excess components have been subtracted. The standard and cross-GPR results are shown in blue and red colors, respectively. |

|

Fig. 5 Recovery of the input hyperparameters for simulations performed for a variety of 21 cm signal shapes. The peak-normalised histograms marginalised over all signal shapes, computed after subtracting the input values, are shown. The different panels correspond to the different hyperparameters. The results from the three runs of standard GPR are shown in different shades of blue, while the cross-GPR results are shown in red. A vertical line is plotted at Recovered = Input to indicate perfect recovery. |

|

Fig. 6 Distribution of z-scores for simulations performed for a variety of 21 cm signal shapes. The two columns show the results for standard and cross-GPR. The top panel shows the dependence of the z-score on k, for the different nights. The gray bands indicate the 1σ and 2σ levels. The bottom panel shows the dependence of the mean z-score on the 21 cm signal shape. The normalised power spectra for the different signal shapes are shown with colors indicating the mean z-score. The shape of the excess component power spectrum is indicated in each panel with dashed black lines. |

5 Discussion

In this section, we outline the key features of the novel cross-GPR method developed in this paper and assess its current limitations. We also discuss its implications for future analyses in 21 cm cosmology.

5.1 Main features

We developed a generalised framework that explicitly incorporates (in)coherence between specific signal components across multiple datasets to disentangle them using GPR. Our method extends the single-dataset GPR formulation by introducing cross-covariances between multiple datasets to encode whether a given component is coherent or incoherent. Our simulations demonstrate that this additional dimension significantly improves our ability to separate signals with similar spectral behavior.

This is particularly relevant in 21 cm cosmology, where signal recovery is complicated due to the presence of foregrounds and various systematics, some of which might not exhibit the spectral smoothness that is required by standard frequency-only GPR approaches to separate them from the 21 cm signal. In the context of 21 cm cosmology, the multiple datasets in the cross-GPR approach correspond to different nights of observations, where the foregrounds and 21 cm signal are coherent across nights, but a portion of the contributions to the excess variance are not. The night-to-night (in)coherence used in this method effectively provides an additional signal subspace, orthogonal to spectral smoothness. Our simulations show that this cross-GPR approach can break degeneracies between the coherent 21 cm signal and the incoherent excess variance. This enables us to more confidently subtract incoherent contributions to the excess variance, without suppressing the cosmological 21 cm signal. The framework retains the flexibility of standard GPR, including the use of physically informed kernels and machine-learned 21 cm signal covariances, while adding another discriminator, the night-to-night (in)coherence.

5.2 Implications for 21 cm cosmology analyses

Multiple interferometers attempting a statistical detection of the 21 cm signal suffer from excess variance above the thermal noise, which typically has a small frequency coherence scale that cannot be reliably separated from the 21 cm signal. Even though the incoherent portion of this excess variance integrates down as multiple nights of observation are averaged, it prevents us from exploiting the full sensitivity of the instrument if left in the data. This framework provides a robust algorithm for subtracting such incoherent contributions to the excess variance. Such a subtraction removes modeled realisations of the incoherent component (and foregrounds) from the data itself, thus reducing both its bias and sample variance. If the excess variance after foreground subtraction is primarily incoherent, this approach can significantly improve the sensitivity to the 21 cm signal by bringing the residuals closer to the thermal noise sensitivity. Additionally, the method offers a new diagnostic that enables us to analyze the coherence properties of residuals in visibility cubes across nights. This can provide additional insights into the nature of the dominant systematics in a given instrument.

Building on the frequency-frequency GPR framework already in use for instruments such as LOFAR and NenuFAR, this method operates on gridded visibility cubes, making it directly applicable to any phase tracking interferometer such as LOFAR, NenuFAR, MWA, and the upcoming SKA14 (Dewdney et al. 2009), which employ the reconstructed power spectrum approach. Additionally, this method is general and can also be extended to HI intensity mapping experiments, or drift-scan 21 cm cosmology instruments such as HERA, which employ the delay spectrum approach to estimate the 21 cm power spectrum (Parsons et al. 2012). For delay spectrum approaches, cross-GPR could extend the GPR applications on calibrated visibilities from a given LST bin, as performed by Ghosh et al. (2020), to data from multiple nights at the same LST or across sets of redundant baselines. A key next step is thus to apply this to real observational data, which is particularly straightforward for instruments such as LOFAR and NenuFAR, which already use standard GPR in their data processing pipelines. This could lead to deeper and robust upper limits on the 21 cm signal power spectrum with these instruments.

5.3 Limitations and future improvement