| Issue |

A&A

Volume 703, November 2025

|

|

|---|---|---|

| Article Number | A222 | |

| Number of page(s) | 25 | |

| Section | Cosmology (including clusters of galaxies) | |

| DOI | https://doi.org/10.1051/0004-6361/202453461 | |

| Published online | 25 November 2025 | |

Hydrogen intensity mapping with MeerKAT: Preserving cosmological signal by optimising contaminant separation⋆

1

INAF – Osservatorio Astronomico di Trieste, Via G.B. Tiepolo 11, 34131 Trieste, Italy

2

IFPU – Institute for Fundamental Physics of the Universe, Via Beirut 2, 34151 Trieste, Italy

3

Instituto de Física de Cantabria (IFCA), CSIC-Univ. de Cantabria, Avda. de los Castros s/n, E-39005 Santander, Spain

4

Jodrell Bank Centre for Astrophysics, Department of Physics & Astronomy, The University of Manchester, Manchester M13 9PL, UK

5

Department of Physics and Astronomy, University of the Western Cape, Robert Sobukwe Road, Cape Town 7535, South Africa

6

Shanghai Astronomical Observatory, Chinese Academy of Sciences, 80 Nandan Road, Shanghai 200030, China

7

Instituto de Astrofisica e Ciências do Espaço, Universidade do Porto CAUP, Rua das Estrelas, PT4150-762 Porto, Portugal

8

Institute of Astronomy, Madingley Road, Cambridge CB3 0HA, UK

9

Liaoning Key Laboratory of Cosmology and Astrophysics, College of Sciences, Northeastern University, Shenyang 110819, China

10

Institute for Astronomy, University of Edinburgh, Royal Observatory, Blackford Hill, Edinburgh EH9 3HJ, UK

11

Higgs Centre for Theoretical Physics, School of Physics and Astronomy, University of Edinburgh, Edinburgh EH9 3FD, UK

12

Observatoire de la Côte d’Azur, Laboratoire Lagrange, Bd de l’Observatoire, CS 34229, 06304 Nice cedex 4, France

⋆⋆ Corresponding author: This email address is being protected from spambots. You need JavaScript enabled to view it.

Received:

16

December

2024

Accepted:

24

August

2025

Removing contaminants is a delicate, yet crucial step in neutral hydrogen (H I) intensity mapping and often considered the technique’s greatest challenge. Here, we address this challenge by analysing H I intensity maps of about 100 deg2 at redshift z ≈ 0.4 collected by the MeerKAT radio telescope, an SKA Observatory (SKAO) precursor, with a combined 10.5-hour observation. Using unsupervised statistical methods, we removed the contaminating foreground emission and systematically tested, step-by-step, some common pre-processing choices to facilitate the cleaning process. We also introduced and tested a novel multiscale approach: the data were redundantly decomposed into subsets referring to different spatial scales (large and small), where the cleaning procedure was performed independently. We confirm the detection of the H I cosmological signal in cross-correlation with an ancillary galactic data set, without the need to correct for signal loss. In the best set-up we achieved, we were able to constrain the H I distribution through the combination of its cosmic abundance (ΩH I) and linear clustering bias (bH I) up to a cross-correlation coefficient (r). We measured ΩH IbH Ir = [0.93 ± 0.17] × 10−3 with a ≈6σ confidence, which is independent of scale cuts at both edges of the probed scale range (0.04 ≲ k ≲ 0.3 h Mpc−1), corroborating its robustness. Our new pipeline has successfully found an optimal compromise in separating contaminants without incurring a catastrophic signal loss. This development instills an added degree of confidence in the outstanding science we can deliver with MeerKAT on the path towards H I intensity mapping surveys with the full SKAO.

Key words: methods: data analysis / methods: statistical / cosmology: observations / large-scale structure of Universe

© The Authors 2025

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. This email address is being protected from spambots. You need JavaScript enabled to view it. to support open access publication.

1. Introduction

Remarkably promising yet challenging to detect, mapping the cosmos with the fluctuations in 21-cm radiation from neutral hydrogen (H I) is set to revolutionise the study of the Universe’s large-scale structure (Bharadwaj et al. 2001; Chang et al. 2008; Loeb & Wyithe 2008). The excellent redshift resolution of intensity maps covering vast areas enables us to characterise the structures’ expansion history and growth, providing constraints on its dark energy, dark matter, and neutrino mass properties (Bull et al. 2015; Villaescusa-Navarro et al. 2017; Carucci 2018; Obuljen et al. 2018; Witzemann et al. 2018; Berti et al. 2022).

However, at the same frequencies of the redshifted 21-cm line, other astrophysical sources, such as our Galaxy, shine with considerably higher intensities (by four to five orders of magnitude), adding gigantic foregrounds to the sought-after signal (Ansari et al. 2012; Alonso et al. 2014). That huge dynamic range between the cosmological signal and the foregrounds makes any tiny miscalibration or instrumental systematics produce catastrophic leakages, mixing up signals, and rendering their separation rather difficult (Shaw et al. 2015; Carucci et al. 2020; Matshawule et al. 2021; Wang et al. 2022).

Nevertheless, many instruments and collaborations worldwide are actively involved in (or have already completed) H I intensity mapping surveys. Some of them have successfully detected the signal by using secondary tracer information (Pen et al. 2009; Chang et al. 2010; Masui et al. 2013; Switzer et al. 2013; Wolz et al. 2017, 2022; Anderson et al. 2018; Tramonte & Ma 2020; Li et al. 2021a; Cunnington et al. 2023a; Amiri et al. 2023, 2024; Meerklass Collaboration 2025), keeping pace with more and more stringent upper limits (Ghosh et al. 2011; Chakraborty et al. 2021; Pal et al. 2022; Elahi et al. 2024), and even detecting the signal on its own, albeit at small (non-cosmological) scales (Paul et al. 2023). Finally, other radio telescopes have been (sometimes purposely) planned to perform radio intensity mapping and either construction or systematic characterisation is ongoing (Xu et al. 2015; Hu et al. 2020; Crichton et al. 2022; Abdalla et al. 2022; Li et al. 2023).

In the observational works cited above, the instruments used are varied (e.g. single-dish radio telescopes, phased array feeds, packed interferometers, and arrays used as a collection of single dishes). Contaminant removal strategies, too, differ substantially. Some teams have opted for foreground ‘avoidance’ and tried to filter the subset of the data with the strongest cosmological signal. Others use what the signal-processing community refers to as ‘blind source separation’ algorithms to try to be as agnostic as possible to the nature of the contaminants. Here, we opt for the latter.

This article focuses on the contaminant removal strategy adopted by the MeerKLASS collaboration, which runs H I intensity map observational campaigns with the MeerKAT telescope (Santos et al. 2016; Wang et al. 2021; Meerklass Collaboration 2025). In particular, our starting point is the detection of signal in cross-correlation between MeerKAT radio intensity maps and galaxies from the WiggleZ Dark Energy Survey at redshift z ≈ 0.4 reported in Cunnington et al. (2023a), which we refer to as CL23 in the remainder of the text.

In theory, astrophysical foregrounds are smooth along frequency, compared to the noisy spectral structure of the H I signal; hence, they are easily separable from the signal, for instance, via a principal component analysis (PCA), holding limited spectral degrees of freedom. In practice, bandpass fluctuations, chromatic beam response, and leakage of polarised foregrounds into the unpolarised signal render the spectrally smooth assumptions invalid. In this work, we address whether the hypotheses behind PCA-like methods are still satisfied with observations and how to pre-process the data to make contaminant removal moreefficient.

Building up an efficient cleaning pipeline is challenging. Sometimes, the optimum pre-processing choices are unclear and cannot be validated, given that simulations lag behind the complexity of data. The main idea of this paper is to update the cleaning strategy in CL23, test pre-processing choices, and report the conditions under which we re-detect the signal. We, therefore, use the CL23 detection as a benchmark and test pipeline features directly on observations. In particular, we want to assess and mitigate the main drawbacks that we have so far experienced with a PCA analysis: signal loss (e.g. in CL23, up to 80% of the signal power was reconstructed a posteriori at the largest scale probed) and the ambiguity of the cleaning level choice (which increases the variance linked to themeasurement).

We have structured the paper as follows. Section 2 describes the H I intensity mapping observations used in our study. Section 3 presents a mathematical framework for the contaminant subtraction problem and defines our cleaning approach. It also introduces a novel multiscale algorithm, mPCA. Section 4 outlines and justifies the data pre-processing steps. Section 5 details the results of the various contaminant separations performed. Section 6 explains how we compute the power spectra and the theoretical model we assume for their fitting. Section 7 showcases the cross-correlation of the cleaned intensity maps with the WiggleZ galaxies, constraining the clustering amplitudes, with Table 1 and Fig. 16 presenting a summary. Finally, Sect. 8 inspects the cubes cleaned with the new mPCA approach more closely, revealing improved component separation compared to standard PCA. We discuss our results and their implications in Sect. 9 and provide our concluding thoughtsin Sect. 10.

Results of the least-squares fits of the cross-power spectra of the MeerKAT H I IM maps and the WiggleZ galaxy at redshift z ≈ 0.4.

|

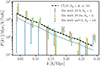

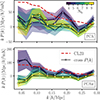

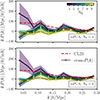

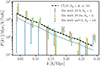

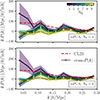

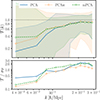

Fig. 16. Cross-power spectra between WiggleZ galaxies and MeerKAT H I intensity maps at redshift z ≈ 0.4. Maps have been cleaned by a PCA and PCAw analysis with Nfg = 4 (blue circles and orange diamonds respectively) and by mPCA with NL, NS = 4, 8 (green squares). We show error bars with 1σ uncertainties and the resulting best-fit curves (solid blue for PCA, dotted orange for PCAw and dashed green for mPCA). The black dashed line is the fitted model from CL23 (same data, different cleaning strategy) that instead was enhanced by a transfer function correction. |

2. The data set

The observations we use belong to the MeerKAT single-dish H I intensity mapping pilot survey run in 2019 and have already been described in Wang et al. (2021) and used by Irfan et al. (2022, 2024), Cunnington et al. (2023a), Engelbrecht et al. (2025). Below, we summarise the instrument, observation strategy, and pipeline used to compose the intensity maps.

MeerKAT is a radio telescope in the Upper Karoo desert of South Africa that will eventually become part of the final mid-instrument of the SKAO, SKA-Mid. It comprises 64 dishes of Ddish = 13.5 m in diameter that harbour three receivers (UHF, L, and S bands). Our data were obtained with the L-band receiver (900 − 1670 MHz) using a subset of 250 channels belonging to the range 971 < ν < 1023 MHz (corresponding to redshifts 0.39 < z < 0.46 for the 21-cm emission from H I), which is especially free of radio frequency interference (RFI) (Wang et al. 2021).

MeerKAT single-dish observations follow a fast scanning strategy at constant elevation to minimise the effect of 1/f noise and retain a continual ground pickup. Dishes move at 5 arcmin s−1 along azimuth, scanning about 10 deg in 100 s. The survey targeted a patch of ∼200 deg2 in the WiggleZ 11 h field (153° < RA < 172° and −1° < Dec < 8°) (Blake et al. 2010; Drinkwater et al. 2010, 2018), away from the galactic plane (where astrophysical foregrounds are brightest) and overlapping with 4031 WiggleZ galaxies, whose positions we cross-correlate with the intensity maps. Observations occurred during six nights between February and July 2019. After observing a bright-flux calibrator, each complete scan (i.e. observational block) across the sky patch took about 1.5 hours. We repeated it seven times, resulting in 10.5 hours of combined data.

Wang et al. (2021) detailed the data reduction pipeline for the time-ordered data (TOD) and subsequent map-making. It includes three steps for flagging RFI, bandpass and absolute calibration using known point sources (3C 273, 3C 237, and Pictor A), and the calibration of receiver gain fluctuations based on interleaved signal injection from a noise diode to remove the long-term gain fluctuations due to 1/f noise (Li et al. 2021b). The calibrated total intensity (in Kelvin) TOD are projected into map space (composed by square pixels of 0.3 deg width) adopting the flat-sky approximation and assuming uncorrelated noise. Finally, the calibrated temperature maps are combined for all scans and dishes at all frequencies by pixel-averaging, yielding the final data cube shown in the left panel of Fig. 1.

|

Fig. 1. Left panel: Temperature sky map averaged along the frequency range considered, i.e. 971 < ν < 1023 MHz. We highlight the footprint of the WiggleZ galaxies in magenta, the smaller footprint CF where we perform the analysis of this work in cyan, and in yellow the Tukey window function we use for the power spectrum computations (dashed and dotted for the zero and 50% boundaries.). Right panel: Normalised histograms of the sky temperature of the data cubes for the original footprint (OF) in solid blue with respect to the cropped CF in dashed orange. The histograms are computed from the average map for each cube, to marginalise the frequency-dependent evolution of the temperature field. The double-peak structure reflects the galactic synchrotron gradient (low versus high RA) present in our sky patch, as shown in the left panel. |

Observing in single-dish mode yields a map with resolution inversely proportional to the dish diameter. Specifically, the full-width-half-maximum (FWHM) of the central beam lobe for the channel of frequency, ν, is given by (Matshawule et al. 2021):

with c the speed of light. At our median frequency, it corresponds to θFWHM = 1.28 deg, namely, the size of 3 − 4 pixels.

Following CL23, as a result of the map-making and pixel-averaging steps, we estimated the pixel noise variance in the maps,  , which is inversely proportional to the number of hits (time) stamps. The inverse of the pixel noise variance is the inverse-variance weight maps,

, which is inversely proportional to the number of hits (time) stamps. The inverse of the pixel noise variance is the inverse-variance weight maps,  , that we use later in our analysis.

, that we use later in our analysis.

We used the ‘ABBA’ method (Wang et al. 2021) to remove the sky signal in order to estimate the channel-averaged thermal noise per pixel of the data cube. When considering data within their original footprint, we estimated the noise level to be of about 2.8 mK; it decreases to 2 mK when cropping data to a more conservative footprint (Fig. 1, left panel, cyan contour).

3. Statistical framework

We assembled the observed scans so that for each frequency channel, ν, we had a map of the total brightness temperature, X. For each given position on the sky (i.e. each pixel, p), the observed temperature is the sum of the cosmological 21 cm signal from H I (TH I), of the contaminants (TC), and of uncorrelated noise (TN):

In the separation process, we estimated TC and subtracted it from the original maps to yield the cleaned maps. We estimated TC by linearly decomposing it as a sum of Nfg foreground components (in the signal-processing literature typically called sources, S) modulated by a frequency-dependent amplitude, A:

We compressed all the maps in a data cube, X, with Npix × Nch dimensions, with Npix the number of pixels in each map and Nch the number of maps (frequency channels). By merging Eqs. (2) and (3), we can write X in its matrix form as

We call A the mixing matrix that regulates the contribution of the Nfg components S in the resulting ‘mixed’ signal. Then, H is the cosmological signal due to the neutral hydrogen 21-cm emission, to be added to a thermal (Gaussian) noise contribution, N. It follows that A has Nch × Nfg, while S has Nfg × Npix dimensions. The problem of contaminant subtraction reduces to determining the foreground driven  so that Xclean = X –

so that Xclean = X –  can estimate the cosmological H I brightness temperature plus the Gaussian noise contribution as accurately as possible. In practice, we only need to determine

can estimate the cosmological H I brightness temperature plus the Gaussian noise contribution as accurately as possible. In practice, we only need to determine  , as getting Xclean is equivalent to projecting

, as getting Xclean is equivalent to projecting  on the data cube X (Carucci et al. 2020):

on the data cube X (Carucci et al. 2020):

in other words,  acts as a filter on the data set.

acts as a filter on the data set.

Solving Eq. (4) to find the estimate  is an ill-posed inverse problem. We need some extra assumptions to move forward. Here, we focus on the Singular Value Decomposition (SVD) and the related principal component analysis (PCA). Here, we also introduce the multiscale analyses (dubbed mSVD and mPCA), which treat the spatial scales of the maps independently. Next, we see how

is an ill-posed inverse problem. We need some extra assumptions to move forward. Here, we focus on the Singular Value Decomposition (SVD) and the related principal component analysis (PCA). Here, we also introduce the multiscale analyses (dubbed mSVD and mPCA), which treat the spatial scales of the maps independently. Next, we see how  is derived according to the different algorithms used in this work (PCA, SVD, mPCA, and mSVD) and their weighted counterparts (PCAw, SVDw, mPCAw, and mSVDw) when using also the noise information contained in the wH I(ν,p) maps in the separation process.

is derived according to the different algorithms used in this work (PCA, SVD, mPCA, and mSVD) and their weighted counterparts (PCAw, SVDw, mPCAw, and mSVDw) when using also the noise information contained in the wH I(ν,p) maps in the separation process.

3.1. Principal component analysis and singular value decomposition

Overall, PCA is a dimensionality reduction technique that identifies a meaningful basis (in our case, that of the Nfg components) to re-express the data set; the principal components should exhibit the fundamental structure of data. Its use is extensive in scope, spanning multitudinous disciplines (see Jolliffe & Cadima 2016 for a general review). PCA assumes that the  decomposition maximises decorrelation among the new components, eliminating second-order dependencies. Those new orthogonal components are primarily responsible for the variance in the data, thereby revealing important structural features. It can be demonstrated that the directions corresponding to the largest variances are also associated with higher signal-to-noise ratios (Miranda et al. 2008), which holds true for the contaminants present in our observations.

decomposition maximises decorrelation among the new components, eliminating second-order dependencies. Those new orthogonal components are primarily responsible for the variance in the data, thereby revealing important structural features. It can be demonstrated that the directions corresponding to the largest variances are also associated with higher signal-to-noise ratios (Miranda et al. 2008), which holds true for the contaminants present in our observations.

We can obtain the PCA decomposition as the solution to an eigenvalue-eigenvector problem or equivalent to singular value decomposition (SVD) of the centred data cube since SVD is the generalisation of the eigendecomposition (Shlens 2014). Indeed, when we are not mean-centring the maps, we say we are performing an SVD data decomposition (in the literature, SVD is also referenced as an ‘uncentred PCA’).

In practice, computing the PCA of the data set X entails (i) subtracting the mean at each frequency to get the centred Xctr,

and (ii) computing the eigenvectors of the frequency-frequency covariance matrix. Instead, with SVD, we can go directly to step (ii), without carrying out the mean-centring. A priori, we do not know whether PCA or SVD is the best approach in our context; both have been used to characterise and remove contaminants from H I intensity mapping data sets (e.g. Switzer et al. 2013; Anderson et al. 2018 used SVD, CL23 used PCA). The statistics literature is not definitive either (e.g. see discussion in Miranda et al. 2008), as the best decomposition depends on the specific problem under consideration; it is common to test different algorithms directly on data and check the solutions (see, e.g. Alexandris et al. 2017). Here, we also adopted the latter strategy: whether or not to subtract the mean from maps is one of the pre-processing steps we tested in this work; hence, we refer to the PCA or SVD solutions of the contaminant subtraction procedure throughout the work.

Another common pre-processing choice within the PCA framework in the H I intensity mapping context is the weighted PCA (PCAw). This procedure consists of computing the covariance matrix of the weighted data cube, wH IX, through the inverse noise of the maps to downplay the influence of the pixels we are less confident about. As first realised by Switzer et al. (2013), it is convenient not to increase the rank of the data matrix to prevent the frequency structure in the weights from altering the data covariance. Hence, we recast wH I(ν,p) into its 2D projection wH I(p), taking the mean along each line of sight. The weights echo the integration time per pixel; hence, their separability in frequency-pixel is genuine1; with the latter projection, we enforced it to be mathematically true.

In the analysis of this work, we aim to test the ‘weighting’ in combination with the other pre-processing steps and this is why we coupled it to either the PCA or SVD.

3.1.1. Summary of PCA-based pipelines.

Schematically, the contaminant subtraction pipeline we followed consists of the following steps.

-

We compute the dot product R = DD⊺, with

-

We compute the eigenvectors V of R and order them by their eigenvalues, from largest to smallest.

-

We use the first Nfg eigenvectors (first Nfg columns of V) to define the mixing matrix

; namely,

; namely,  is a subset of V:

is a subset of V:

Having defined  , the solution Xclean is obtained through Eq. (5).

, the solution Xclean is obtained through Eq. (5).

3.2. The multiscale approach: Different scales require different treatment

A typical concern when cleaning H I intensity maps with methods based on Eq. (4) is that it becomes necessary to increase the aggressivity of the subtraction (by setting higher Nfg) to decrease variance in the large spatial scales of the maps. Meanwhile, on small scales, there are no dramatic changes during this process, resembling an ‘overfitting’ condition (e.g. see Fig. 5 in Wolz et al. 2017). Non-observational studies have also begun to spot (and quantify) this trend as more realistic simulations were involved. For instance, from Fig. 15 in Carucci et al. (2020), we can see that a more aggressive cleaning was employed to recover more signal power at large scales at the cost of removing a significant percentage of the signal on the intermediate and small scales. Furthermore, Carucci et al. (2020) demonstrated that the wavelet domain is an optimal, multiscale framework for analysing H I intensity mapping data, enabling a sparse (compact) representation of the astrophysicalforegrounds.

Building upon these previous findings, in this work, we want to test the multiscale cleaning with observations. We treated it as an extra pre-processing choice and, thus, we considered a multiscale PCA (mPCA) and the related mSVD and weighted versions, mPCAw and mSVDw. As we sketch in Fig. 2, once we decomposed the data cubes in different scales, we solved two independent contaminant subtraction problems, entailing different mixing matrices and allowing fordifferent Nfg.

|

Fig. 2. Flowchart describing the multiscale contaminant subtraction. |

3.2.1. Starlet filtering.

The wavelet decomposition offers a straightforward framework for analysing multiscale data. Here, we used the isotropic undecimated wavelet, also known as the starlet transform (Starck et al. 2007), which has proven to be well adapted to an efficient description of astrophysical images (e.g. Flöer et al. 2014; Joseph et al. 2016; Offringa & Smirnov 2017; Peel et al. 2017; Irfan & Bobin 2018; Ajani et al. 2020, and, in particular, Carucci et al. 2020 for the single-dish (low-angular-resolution) H I intensity maps). Starlets have the advantages of (i) representing an exact decomposition; (ii) being non-oscillatory both in real space and in Fourier space, they are efficient at isolating map features represented in either domain; (iii) having compact support in real space, which enables them to prevent systematic effects due to masks andborders.

An intensity map at frequency, ν′, can be decomposed by this transform into a so-called ‘coarse’ version of it, XL(ν′,p), plus several images of the same size at different resolution wavelet scales, j,

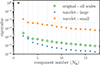

where we dropped the ν′ dependency for brevity. Wavelet-filtered maps, Wj, represent the features of the original map at dyadic (powers of two) scales, corresponding to a spatial resolution of the size of 2j pixels, with the largest scale of XL corresponding to the size of 2jmax + 1 pixels2. In our analysis, we set jmax = 1 after verifying that using higher wavelet scales does not manifestly improve our results. Hence, applying the starlet decomposition on the observed data cube gives rise to two new cubes,

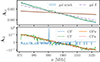

which we refer to as the large-scale and small-scale data sets. We display them in the first two panels of Fig. 3, averaged along frequency, within the smaller ‘conservative’ footprint (later defined in Sect. 4): those maps are the wavelet-filtered equivalents of the cyan-contoured map in the left panel of Fig. 1.

|

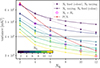

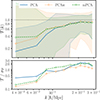

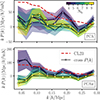

Fig. 3. First two panels: Wavelet-filtered large (first panel) and small scale (second) temperature sky map within the conservative footprint (CF) and averaged along all frequencies; i.e. the sum of the two maps above gives precisely the original one (within the cyan contour) in Fig. 1. Third panel: Spherically averaged power spectra of the cubes (large scale in solid blue, small in dashed orange) normalised by the variance of each cube. With a green dotted line, we plot the damping term of the telescope beam (refer to the y-axis at the right of the panel). Bottom panels: Cylindrical power spectra of the large (left panel) and small-scale cubes (right). Since the wavelet filtering is performed in the angular direction, the difference among the resulting cubes is mostly visible along k⊥ (x-axis). The beam suppression also acts in this direction: we plot its expected damping term with iso-contour solid lines corresponding to 50, 20, and 5% suppression, from left toright. |

Since we went on to later analyse data in three-dimensional Fourier space, we plotted the power spectra of those cubes in the third panel of Fig. 3: the description of ‘large’ and ‘small’ scales holds in k− space, also; although there is no simple, sharp boundary. We compared the behaviour of those three-dimensional spectra with the expected beam suppression with a dotted green line, assuming it is frequency-independent and Gaussian (following Eq. (1) and the modelling later described by Eq. (14)). The values of the damping effect of the beam are on the y-axis on the right of the panel. The large-scale XL has most of its power for k ≲ 0.15 h/Mpc, where the telescope beam is suppressing up to 70% of the sky signal, then it flattens. The small-scale XS lacks power at small k, reaching a peak at k ≈ 0.08 h/Mpc, corresponding to ≈30% of beam damping. It might be more intuitive to look at the two-dimensional power spectra in the bottom panels of Fig. 3 (left for XL and right for XS) since the wavelet filtering is applied in angular space. Most of the power of XL is at the largest k⊥ where the beam damping is below 50%. The XS power is significant after that 50% bound and slowly decreases.

3.2.2. Summary of the multiscale cleaning.

Once we split the data into large-scale and small-scale cubes, we performed two independent contaminant subtractions, as described in Sect. 3.1. These subtractions result in two solutions, XL, clean and XS, clean, which we summed to get the actual cleaned cube of the multiscale analysis, a unique whole-scale solution, as per Eq. (7). We schematically summarise this process in Fig. 2.

The multiscale framework can be coupled to any cleaning method. Here, we focus on the PCA-based algorithms already presented. Hence, when we apply PCA (SVD) to both XL, clean with NL components removed and XS, clean with NS, we refer to mPCA (mSVD). We can also weight XL and XS with the inverse-variance weights, hence resulting in the weighted counterparts, mPCAw and mSVDw. We notice from Fig. 3 that the large scale keeps the dimensionality of the cube, so we can apply an SVD to it (i.e. without subtracting the mean temperature value from the maps). The small-scale maps are inherently zero-centred, so using a SVD on them is equivalent to applying a PCA.

3.3. General consequences of the component separation

We expect the cosmological H I signal to be far less correlated in frequency and subdominant in amplitude relative to the foregrounds. These points have two immediate consequences in the separation process.

First of all, (i) the linear decomposition of AS in Eq. (4) is an inconvenient description of the H I signal and when forcing that decomposition on data, leakage of some of the H I signal into the AS product is unavoidable. Specifically, if, on the one hand, foregrounds can be restricted to a subset of these modes, on the other, the H I signal spreads across all modes. It, therefore, becomes crucial to perform the separation task with the smallest possible number Nfg of components. For instance, Cunnington et al. (2021) report an H I signal loss in a PCA analysis of the order 10% and 60% when Nfg is set to 5 and 30, respectively, and finds the latter results almost independent of the complexity of the foregrounds considered in their simulation. Point (i) holds for any separation method based on Eq. (4), although different algorithms may lead to different amounts of signal loss.

Furthermore, (ii) the cosmological signal is inherently coupled to the uncorrelated noise component – such as the thermal part of the instrumental noise – hence, we do not attempt to separate H + N at the cleaning stage3. In what follows, with ‘recovered signal’, ‘cleaned data cube’, and similar expressions, we mean the estimate of the cosmological H I signal plus the uncorrelated component of the noise, Xclean.

3.3.1. Considering the number of components Nfg.

PCA-like methods are dubbed ‘blind’ or ‘unsupervised’: no prior information on the cosmological signal is required. However, we do not know a priori the optimal number of components Nfg needed to describe the contaminants and preserve as much cosmological signal as possible.

Typically, as an exploratory analysis, we can plot the eigenvalues of the covariance eigendecomposition in decreasing order, as we do in Fig. 4. The number of plotted values before the last substantial drop in the magnitude of eigenvalues suggests how many components are needed to ‘explain’ the data, that is to say, to account for the largest part of the data variance. Indeed, in the contexts where PCA is successful, the change in magnitude among the eigenvalues is unquestionably visible (in the literature, these plots are called ‘scree plots’). Works based on simulations show evident scree plots; the scree becomes less and less pronounced when additional systematics are modelled on top of astrophysical foregrounds (e.g. Carucci et al. 2020), highlighting the mode-mixing at play. Setting Nfg unambiguously in observations is challenging. Methods that automatically find the appropriate sub-space where contaminants live typically need prior information, for instance, on the signal covariance (Shaw et al. 2014; Olivari et al. 2016).

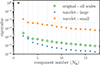

|

Fig. 4. Normalised eigenvalues of the frequency-frequency covariance matrix of the data cube. Circles refer to the original cube, plus signs to the wavelet-filtered large-scale cube, and ‘x’ crosses for the small-scale cube. The large-scale eigenvalues drop down faster with Nfg: the PCA assumption holds better in this case, and a few modes are enough to describe the large-scale data set. These values correspond to mean-centred maps cropped on the conservative footprint, although the eigenvalues behaviour does not change significantly for the other cases. |

Here, we reverse the argument and claim that if different pre-processing or filtering choices lead to various ‘scree’ eigenvalues behaviour, those that emphasise the magnitude drop are scenarios where the PCA assumptions hold better, resulting into an efficient decomposition. For instance, looking at Fig. 4, the large-scale cube is the data set for which the PCA assumptions hold better (blue plus signs); hence, we can anticipate that on the large scale, PCA will be more efficient – a small NL will be enough to describe contaminants – compared to the small-scale counterpart and the PCA without any wavelet filtering. For each cleaning method, we determined the values of Nfg (or NL, NS) by identifying those that yield the best cross-correlation measurements with the external galactic data set.

4. Pre-processing steps

Here, we discuss the pre-cleaning choices we made to optimise the contaminants-signal separation, highlighting the differences in comparison to the CL23 analysis we have built upon.

4.1. PCA-informed footprint.

In the left panel of Fig. 1, we show the original footprint, OF, of the data set. With a solid cyan line, we highlight the smaller ‘conservative’ footprint, CF. We have defined the CF by analysing the first four components  found by PCA, projecting

found by PCA, projecting  on X, for the OF analysis; we show these first four components in the first column of Fig. 5. We have drawn iso-contours in normalised intensity in the component maps and found that most of the pixel variance captured by the PCA decomposition was contained in the pixels outside the CF, marked by a solid black boundary. The PCA framework is known to be prone to outlier mishandling4, hence the need to suppress their influence either by removing them from the data set, adopting the CF, or by performing a weighted PCAw since the outlier pixels correspond to those with higher noise5. A close look at the second column of Fig. 5 suggests that the weighting scheme might not be sufficient and that the complete pixel-flagging choices (last two columns) deliver more reasonable principal components (see later in Sect. 5 a more in-depth discussion on what we mean by a ‘reasonable’ morphology of the sources). We later compare the two options more quantitatively, weighting versus cropping.

on X, for the OF analysis; we show these first four components in the first column of Fig. 5. We have drawn iso-contours in normalised intensity in the component maps and found that most of the pixel variance captured by the PCA decomposition was contained in the pixels outside the CF, marked by a solid black boundary. The PCA framework is known to be prone to outlier mishandling4, hence the need to suppress their influence either by removing them from the data set, adopting the CF, or by performing a weighted PCAw since the outlier pixels correspond to those with higher noise5. A close look at the second column of Fig. 5 suggests that the weighting scheme might not be sufficient and that the complete pixel-flagging choices (last two columns) deliver more reasonable principal components (see later in Sect. 5 a more in-depth discussion on what we mean by a ‘reasonable’ morphology of the sources). We later compare the two options more quantitatively, weighting versus cropping.

|

Fig. 5. First four components |

We show the temperature histograms of the data cubes in the right panel of Fig. 1. The pixels that have been cut out with the new footprint, after looking at the PCA-derived components, are indeed outliers of the original data temperaturedistribution.

4.2. No extra channel flagging.

CL23 performed an extra flagging round on the channels displaying high variance peaks before the cleaning. After channels have been discarded, either we inpaint the missing maps, or we ignore their absence and perform the cleaning on the chopped data cube. CL23 did the latter. However, the PCA decomposition boils down to recovering templates that, modulated in frequency, sum up to the full data cube. When the modulation in frequency is spoiled by the missing channels, the decomposition becomes trickier; in other words, it becomes harder for component analyses of this kind to determine mixing matrices with discontinuities, as shown in Carucci et al. (2020), Soares et al. (2022). Here, we chose to keep all the 250 channels in the analysis. In Appendix A, we checked whether extra flagging could have been beneficial in the set-up of this analysis, ultimately finding it to be detrimental, in agreement with simulation-based works.

Additionally, retaining the full frequency range in the analysis allows us to compensate for the information lost with the CF cropping: we worked with a comparable number of voxels as in CL23, however, with a lower estimated noise(Sect. 2).

4.3. No extra smoothing of the maps.

Cleaning algorithms based on Eq. (4) perform better when maps share a common resolution. Indeed, in the decomposition, the templates  get modulated in frequency through

get modulated in frequency through  ; however, the mixing matrix

; however, the mixing matrix  cannotaccommodate for resolution differences at the map level, as it gives only an overall amplitude adjustment to the

cannotaccommodate for resolution differences at the map level, as it gives only an overall amplitude adjustment to the  components in the frequency direction. Given this intrinsic limitation of the

components in the frequency direction. Given this intrinsic limitation of the  decomposition, accurate beam deconvolution should be ideally performed before the cleaning. CL23 opted for smoothing all maps with a frequency-dependent Gaussian kernel to achieve a common resolution 1.2 times worse than the largest resolution (i.e. with a FWHM that is 1.2 times greater than that of the lowest frequency map, assuming it is Gaussian). CL23 were not the first to opt for the ‘reconvolution’ choice (e.g. Masui et al. 2013; Wolz et al. 2017, 2022; Anderson et al. 2018), and, besides downgrading the maps to a common resolution, the extra smoothing is essentially a low-pass filter that removes the small-scale information in the maps, which one can relate to spurious artifacts. However, the effects of the channel-dependent Gaussian smoothing on the properties of the real-beam-convolved H I field have not been fully understood yet. Spinelli et al. (2022) performed different cleaning methods on simulated data with and without the frequency-dependent smoothing and found the latter practice to be detrimental in all the cases analysed (see also Matshawule et al. 2021 for the effects of a realistic beam in the cleaning process). Here, we opted for the simplest option: leave the maps’ resolutions as they are. Moreover, the beam resolution does not change dramatically within the frequency range we use (1.24 − 1.31 deg), and the level of uncertainties in our measurement allows us to nevertheless model the theoretical expectation for the three-dimensional power spectrum with a Gaussian beam relative to the median frequency of the data cube (Sect. 6).

decomposition, accurate beam deconvolution should be ideally performed before the cleaning. CL23 opted for smoothing all maps with a frequency-dependent Gaussian kernel to achieve a common resolution 1.2 times worse than the largest resolution (i.e. with a FWHM that is 1.2 times greater than that of the lowest frequency map, assuming it is Gaussian). CL23 were not the first to opt for the ‘reconvolution’ choice (e.g. Masui et al. 2013; Wolz et al. 2017, 2022; Anderson et al. 2018), and, besides downgrading the maps to a common resolution, the extra smoothing is essentially a low-pass filter that removes the small-scale information in the maps, which one can relate to spurious artifacts. However, the effects of the channel-dependent Gaussian smoothing on the properties of the real-beam-convolved H I field have not been fully understood yet. Spinelli et al. (2022) performed different cleaning methods on simulated data with and without the frequency-dependent smoothing and found the latter practice to be detrimental in all the cases analysed (see also Matshawule et al. 2021 for the effects of a realistic beam in the cleaning process). Here, we opted for the simplest option: leave the maps’ resolutions as they are. Moreover, the beam resolution does not change dramatically within the frequency range we use (1.24 − 1.31 deg), and the level of uncertainties in our measurement allows us to nevertheless model the theoretical expectation for the three-dimensional power spectrum with a Gaussian beam relative to the median frequency of the data cube (Sect. 6).

We check in Appendix A what reconvolution could do in terms of the final measurements. We find no improvements and even a detrimental effect in the case of the multiscale cleaning.

5. Results of the component separation

5.1. Performing the cleaning within the conservative footprint

5.1.1. Mixing matrix

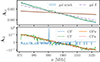

We started by applying the PCA cleaning (as per the algorithm described in Sect. 3.1) in the OF and CF. The mixing matrix has all the information for getting to the PCA solution; hence, we started by looking at the derived mixing matrices. In Fig. 6, we show the first two modes (components at the top for the first and bottom panel for the second) of the PCA-like decompositions. Especially for the PCA analysis within the OF (blue solid lines), the second principal mode  (bottom panel) is completely dominated by sharp peaks at specific channels: the PCA decomposition uses this mode to describe the large variance fluctuations in a subset of the maps. In contrast, the behaviour of

(bottom panel) is completely dominated by sharp peaks at specific channels: the PCA decomposition uses this mode to describe the large variance fluctuations in a subset of the maps. In contrast, the behaviour of  gets closer to the expected power-law-like when we take weights into account with PCAw (solid orange line). When analysing the CF (green and red lines), modes 1 and 2 are also spectrally smooth, as the galactic diffuse emissions that should dominate the maps.

gets closer to the expected power-law-like when we take weights into account with PCAw (solid orange line). When analysing the CF (green and red lines), modes 1 and 2 are also spectrally smooth, as the galactic diffuse emissions that should dominate the maps.

|

Fig. 6. Normalised first two modes derived by the PCA analysis performed on the original footprint OF (solid blue line) and the conservative footprint CF (solid orange), and their weighted version ‘OFw’ (solid green) and ‘CFw’ (red dashed). For comparison, in the first mode plot (top panel), we show a proxy for the spectral index of the galactic free-free emission (dash-dotted, spectral index of −2.13, Dickinson et al. 2003) and a range of possible galactic synchrotron spectral indexes (blue shaded area, between −3.2 and −2.6, Irfan et al. 2022). |

While there is no one-to-one relationship between PCA-derived components and actual astrophysical objects, it is noteworthy that the first mode of PCA, when run on the CF, exhibits a frequency behaviour that closely resembles the diffuse synchrotron emission of the Milky Way (Irfan et al. 2022). However, a principal component doing better at isolating the galactic synchrotron does not necessarily indicate that the residuals are cleaner; a first principal component that combines, e.g. free-free and synchrotron emissions, would also reflect the expecteddominant contributions in the data. Indeed, all first modes displayed in the top panel of Fig. 6 ‘have something to do’ with the galaxy, which is expected and reassuring.

The SVD mixing matrices (with or without weighting and for the different footprints) are extremely similar to the PCA counterparts; hence, we do not show them here.

5.1.2. Sources

The modes (i.e. the columns of  ) described above are associated with the corresponding components:

) described above are associated with the corresponding components:

We show the first four in Fig. 5, from top to bottom; the first two columns refer to the analysis with the OF, last two columns to the CF, without and with weighting. Components associated with the OF analysis are different than those associated with the CF. Even though the PCA components are not straightforward to interpret as they cannot be exactly paired to physical emissions, they are nevertheless informative – as for their associated frequency behaviour described above. For example, can we see the galactic morphology influence in them? For the OF case, components 1, 3 and 4 display the left-to-right gradient that is linked to the synchrotron at this sky position (Irfan et al. 2022), whereas, for the PCA solution for the CF, the synchrotron gradient is visually present for the first component only. The other three likely mainly capture the morphology of some non-astrophysical systematics, given e.g. the sharp horizontal boundaries6 of component 3 and the zigzag structure of component 4. In the CF case, components that look visually galactic are linked to smooth modes (see above and in Fig. 6). In contrast, the situation is more confusing for the OF case: galactic-like components are associated with non-smooth modes, too. Indeed, when working with the OF, we are witnessing more ‘mode-mixing’.

Again, we do not show SVD results for brevity. The SVD solutions appear somewhat different regarding the  decomposition; however, these differences are not appreciable enough to make claims at this stage of whether the SVD analysis is more or less optimal than PCA (e.g. the pre-processing choice of using the CF rather than the OF has a much more significant impact). We let the cross-correlation measurements quantify the PCA-SVD distinctness.

decomposition; however, these differences are not appreciable enough to make claims at this stage of whether the SVD analysis is more or less optimal than PCA (e.g. the pre-processing choice of using the CF rather than the OF has a much more significant impact). We let the cross-correlation measurements quantify the PCA-SVD distinctness.

5.1.3. Variance

We expect the variance to decrease as a function of Nfg. Furthermore, to minimise cosmological signal loss that increases with Nfg, we would aim to reach the expected noise level of the data with the smallest Nfg possible. We recall here that noise dominates the cosmological signal in this data set. Hence, even though this exercise cannot tell us how much residual contamination is still present in the cubes, we can compare the PCA-based pipelines as the faster (i.e. lower Nfg), they reach the noise level, the more optimal. We do so with the solid lines in Fig. 7. The blue line, corresponding to a PCA analysis on the original footprint OF, reaches the OF noise level (pink stripe) at Nfg = 23 − 24; whereas the green line (PCA on the CF) reaches the CF noise level (grey) at Nfg = 7 − 8. Performing the PCA decomposition on the CF leads to a more efficient signal component separation.

|

Fig. 7. Variance in the PCA-cleaned cubes as a function of Nfg. The solid blue line corresponds to a PCA cleaning applied to the original footprint OF that, if cropped a-posteriori on the smaller CF, corresponds to the variance displayed by the solid orange line. The solid green line corresponds to PCA performed directly on the CF. We compare these results with a weighted PCAw with dotted lines, in the OF case (red), OF-later-cropped (violet) and CF (brown). The horizontal stripes mark the estimated noise level of the OF (pink) and CF (grey) cubes. These results do not change for an SVD analysis. |

By working on the CF, we are suppressing the high variance pixels at the beginning of our analysis, namely, before the cleaning; thus, we might want to consider whether: (i) the previous result is due to the missing outlier pixels rather than a more efficient cleaning and (ii) performing a weighted PCAw on the full OF could allow us to suppress the influence of the high-variance pixels in the signal separation, without cropping the maps. We tested both hypotheses and checked whether they lead to a cleaning efficiency comparable to (or even better than) the PCA applied on the CF.

Regarding hypothesis (i), we cropped the cleaned cubes obtained with the PCA on the OF to the CF, by trimming the maps after the cleaning. The results are shown in orange in Fig. 7. We note that we are not improving the OF results, as we keep reaching the noise (in grey for the CF) around Nfg = 21 − 22. The extra channel flagging is a condition for PCA to deliver a better decomposition. The filtering solution differs from the OF case for the retained pixels, as previously checked by looking at the modes and components of the decomposition.

To check hypothesis (ii), we employed PCAw. The results are shown by the red dotted line in Fig. 7: we improve upon the plain PCA solution (blue line) by being more effective and reaching the noise floor at Nfg = 15 − 16, but not as efficiently as in the CF case (green line). Instead, performing a weighted PCAw for the CF case (dotted violet line) does not improve upon the plain PCA. The weight map inside the CF is indeed more uniform, making it less worthwhile to apply the weighting.

We have demonstrated that if data do not have an approximately uniform weight across the cube, then a weighted decomposition is worthwhile in the PCA framework, although it is not as crucial as removing the outlier pixels in the temperature distribution altogether.

Summarising this section, we have found that a PCA analysis on the small CF is more effective than the OF and than its weighted version. Similar conclusions apply to SVD. We continued working solely with the CF in the remainder of this work.

5.2. Cleaning spatial scales independently

In this section, we take a closer look at mixing matrices, sources, and variance of the multiscale cleaning solutions. We focus mostly on mPCA, as we find no appreciable differences with respect to mSVD.

5.2.1. Mixing matrix

Overall, mPCA corresponds effectively to two PCA analyses, PCA-L and PCA-S, performed independently on the maps’ large and small spatial scales and acting on them separately. We started by looking at the two mixing matrices,  and

and  , and see which modes they will project out from the data. We plot the first four modes of both matrices in Fig. 8, solid blue and dotted green for

, and see which modes they will project out from the data. We plot the first four modes of both matrices in Fig. 8, solid blue and dotted green for  and

and  respectively, and compare them with the plain PCA solution too (empty orange circles). Each panel from top to bottom refers to a mode. The first two modes are almost identical for PCA and PCA-L; instead, the last two differ mainly in the position of the peaks. Since these modes are projected on the entire data cube for PCA and only on the coarse scale of the maps for PCA-L, this finding implies that, in the case of the plain PCA, the large-scale information drives the decomposition, imposing its solution (filtering) also on the small scale of the maps. Indeed, the first two modes of the small-scale driven PCA-S follow the same trend but are much more fluctuating: they are accommodating for channel amplitude fluctuations of these first modes occurring just on the small scale.

respectively, and compare them with the plain PCA solution too (empty orange circles). Each panel from top to bottom refers to a mode. The first two modes are almost identical for PCA and PCA-L; instead, the last two differ mainly in the position of the peaks. Since these modes are projected on the entire data cube for PCA and only on the coarse scale of the maps for PCA-L, this finding implies that, in the case of the plain PCA, the large-scale information drives the decomposition, imposing its solution (filtering) also on the small scale of the maps. Indeed, the first two modes of the small-scale driven PCA-S follow the same trend but are much more fluctuating: they are accommodating for channel amplitude fluctuations of these first modes occurring just on the small scale.

|

Fig. 8. Normalised first four modes (from the top to the bottom panel) derived by the PCA analysis (empty orange circles) and the multiscale mPCA (lines). With mPCA, we derive two mixing matrices, |

5.2.2. Sources

The modes discussed above correspond to the components  and

and  that we show in Fig. 9, where the left column is for the large-scale analysis and the rest for the small-scale. We can compare these maps with the whole-scale

that we show in Fig. 9, where the left column is for the large-scale analysis and the rest for the small-scale. We can compare these maps with the whole-scale  components shown in the third column of Fig. 5. The

components shown in the third column of Fig. 5. The  maps indeed reflect the large scale of S, namely, they are coarse-grained versions of the S maps. Again, this confirms the hypotheses that (i) the large-scale information drives the plain PCA analysis when working with the whole-scale original cube; (ii) the smooth astrophysical emissions acting as foregrounds correspond to morphologically wide, large-scale regions, compared to the sky area and the intrinsic spatial resolution of the observed maps.

maps indeed reflect the large scale of S, namely, they are coarse-grained versions of the S maps. Again, this confirms the hypotheses that (i) the large-scale information drives the plain PCA analysis when working with the whole-scale original cube; (ii) the smooth astrophysical emissions acting as foregrounds correspond to morphologically wide, large-scale regions, compared to the sky area and the intrinsic spatial resolution of the observed maps.

|

Fig. 9. First four normalised components |

It is harder to interpret the  . They are indeed descriptions of the small scale decomposition, breaking down the ‘stripes’ and ‘zigzag’ seen in

. They are indeed descriptions of the small scale decomposition, breaking down the ‘stripes’ and ‘zigzag’ seen in  ; except maybe the first

; except maybe the first  component, where we do see hints of the point sources present in our footprint (Wang et al. 2021). It is indeed this first SS that corresponds to a mode with a similar trend with what is seen in the mixing matrices

component, where we do see hints of the point sources present in our footprint (Wang et al. 2021). It is indeed this first SS that corresponds to a mode with a similar trend with what is seen in the mixing matrices  and

and  : we expect it to carry some information of the astrophysical foregrounds. To a lesser extent, this is true also for the second

: we expect it to carry some information of the astrophysical foregrounds. To a lesser extent, this is true also for the second  component: it is associated with a smooth mode, close to the second mode of

component: it is associated with a smooth mode, close to the second mode of  and

and  ; yet in this case we cannot recognise an astrophysically motivated morphology. Probably, the latter is because these astrophysical emissions have leaked in a systematics-driven component. For concision, we do not show the modes 5–8 associated with those last four

; yet in this case we cannot recognise an astrophysically motivated morphology. Probably, the latter is because these astrophysical emissions have leaked in a systematics-driven component. For concision, we do not show the modes 5–8 associated with those last four  components: they are spiky and oscillating around zero (modes with short correlation in frequency), so it is hard to relate them to any motivated signal component or systematic contaminant. We might ask whether these small-scale fluctuating components are actual cosmological signal. Even though we always incur some signal loss, these are still the first eight principal components, those of highest variance, and typically smoother than components that would be next in the decomposition. The PCA-S is no more and no less harmful in terms of signal loss than PCA-L. It is just acting on smaller scales, as we explicitly check in Sect. 7.6, where we test on simulations the filtering effect of mPCA.

components: they are spiky and oscillating around zero (modes with short correlation in frequency), so it is hard to relate them to any motivated signal component or systematic contaminant. We might ask whether these small-scale fluctuating components are actual cosmological signal. Even though we always incur some signal loss, these are still the first eight principal components, those of highest variance, and typically smoother than components that would be next in the decomposition. The PCA-S is no more and no less harmful in terms of signal loss than PCA-L. It is just acting on smaller scales, as we explicitly check in Sect. 7.6, where we test on simulations the filtering effect of mPCA.

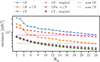

5.2.3. Variance

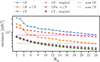

We look at the variance of the cleaned mPCA cubes as a function of the number of components removed. Although we decided to work with the CF footprint only, in Fig. 10 we show the variance of the OF cubes cleaned with mPCA, to check the effects of cropping and of weighting for mPCA (and mSVD) too. As in Fig. 7, the solid line in blue (green) corresponds to an mPCA cleaning applied to the OF (CF), to be compared with the weighted counterpart in dotted red (brown) and to the effect of cropping the OF cleaned results to the CF (solid orange of mPCA and dotted violet for weighted-mPCA); pink (grey) bands are the expected noise floor for the OF (CF) observed cubes. On the x-axis we show the number of modes removed, fixing NL = NS. The conclusions that we can draw are similar (but stronger) to those we drew from Fig. 7: (i) weighting alone is not sufficient for dealing with the high-variance pixels, and (ii) the cropping is indeed beneficial for the mPCA solution to be more descriptive hence efficient for our data cube. We do not show any other weighted mPCAw (or mSVD, mSVDw) results, as we do not find appreciable differences with respect to mPCA.

|

Fig. 10. Variance in the mPCA cleaned cubes as a function of the number of components removed when setting NL = NS (=Nfg, as in the x-axis). The legend is the same as in Fig. 7. These results do not change for an mSVD analysis. |

It is informative to compare Figs. 7 and 10: our first direct comparison of the multiscale cleaning versus the standard PCA. Even when working within the OF, the mPCA residual cube reaches the noise floor (in pink) with only Nfg = 12 − 13 components removed. These results do not take full advantage of the multiscale method that entails two independent subtractions, since we are imposing NL = NS.

We look at the effect of varying NL and NS independently in Fig. 11, for the CF only. With the dotted lines, we let NS vary and keep NL fixed, given by the colour of the line (inset colour scale); it is the other way around for the solid lines (NL varying, NS fixed). Increasing NL is the primary driver of a variance drop for NL ≤ 4; after four modes removed on the large scale, the variance decrease slows down (keeping NS fixed). Conversely, the contribution of the increase of NS to reduce the variance is more constant, also beyond the range of mode numbers explored in Fig. 11. With the empty magenta symbols, we highlight the cases where the cleaning has been performed with NL = NS for a quicker comparison with the PCA results (solid red line). We demonstrate once again that applying PCA separately on the spatial scales (i.e. mPCA) is not equivalent to applying it on the whole-scale original cube, even when the number of components removed is the same.

|

Fig. 11. Variance in the mPCA cleaned cubes varying the number of components removed. With dotted lines and filled symbols, we refer to cleaning by fixing the number of the large-scale components removed, NL, given by the colour of the line (inset colour scale), and with solid lines and empty symbols, the other way around: NS is kept fixed (colour) and NL is varying (x-axis). With empty magenta symbols we highlight the cubes cleaned with NL = NS, for a more direct comparison with the PCA results, shown by the red squares and solid line. We show the estimated uncorrelated noise level with a grey band. |

The results shown so far have been primarily qualitative, looking at the properties of the PCA and mPCA decompositions. In Sect. 9, we discuss how demonstrative these tests are and what we have learnt from them. In the next section, we present more quantitative results and evaluate the cleaned cubes by measuring their cross-correlation signal with the WiggleZ galaxies.

6. Cross-power spectrum: Estimation and theoretical modelling

We analysed the cosmological signal present in the maps through its cross-power spectrum with the position of the 4031 WiggleZ galaxies, PH I,g. For the estimator and theoretical modelling, we closely followed CL23.

6.1. Cross-power spectrum estimation

The MeerKAT data cube is in celestial coordinates and receiver channel frequency (RA, Dec, ν). To perform fast Fourier transform (FFT) calculations on data, we re-grid the cube onto regular three-dimensional Cartesian coordinates x with lengths lx1, lx2, lx3 in h−1 Mpc, assuming a Planck18 cosmology (Planck Collaboration I 2020) and voxel volume, Vcell.

The Fourier transforms of the H I temperature maps δTH I and the galaxy number field ng are defined as

where Ng = ∑ng is the total number of galaxies,  is the weighted Fourier transform of the normalised selection function, Wg, which takes care of the incompleteness of the galaxy survey, as designed by the WiggleZ collaboration (Blake et al. 2010), and the weights wH I(x) and wg(x) are the inverse variance map introduced in Sect. 2 and the optimal weighting of Feldman et al. (1994), for the H I and galaxy fields respectively.

is the weighted Fourier transform of the normalised selection function, Wg, which takes care of the incompleteness of the galaxy survey, as designed by the WiggleZ collaboration (Blake et al. 2010), and the weights wH I(x) and wg(x) are the inverse variance map introduced in Sect. 2 and the optimal weighting of Feldman et al. (1994), for the H I and galaxy fields respectively.

Additionally, to limit the ringing in the spectra calculations (i.e. spurious correlations between adjacent k-bins), we apply tapering functions that smoothly suppress the cleaned data to zero at the edges. CL23 apodised the cube in the frequency direction using a Blackman window (Blackman & Tukey 1958); in the angular direction, the weight maps wH I(x) fall off at the edges due to scan coverage and effectively act as tapering, hence no further tapering was needed. In this analysis, we keep the Blackman choice in the line-of-sight direction, but since we use a smaller footprint – the CF – the weights within the CF no longer act as tapering. Instead, we adopt a Tukey window function7 to maximise the number of pixels whose intensity information is retained in the power spectrum computation (Harris 1978); we display it in the left panel of Fig. 1 with the yellow curves–dotted and dashed for when the window reaches 0.5 and 0, respectively.

Finally, we define the cross-power spectrum estimator as

All spectra are averaged into bandpowers |k| ≡ k yielding the final spherically averaged power spectra measurements that we compare against theory.

Our cleaned intensity maps are highly noise-dominated (see Sect. 2, i.e. the noise level is of about 2 − 3 mK), hence we use Gaussian statistics to analytically estimate the uncertainties of the cross-spectrum measurements:

where Nm is the number of modes in each k-bin and  is the number density of galaxies. The auto-spectra of the fields,

is the number density of galaxies. The auto-spectra of the fields,  and

and  , are computed in the same fashion of Eq. (10), namely,

, are computed in the same fashion of Eq. (10), namely,

where we included a shot noise term in the galaxy auto-spectrum, whereas  includes (and is dominated by) thermal noise. CL23 compared these analytical error estimations to the ones calculated from cross-correlating the MeerKAT data with the random WiggleZ catalogues used to derive the selection function, finding good agreement. In Sect. 7, we further test the Gaussian error assumption by means of a reduced χ2 analysis and comparing them directly with the data-driven jackknife errors, finding also good agreement.

includes (and is dominated by) thermal noise. CL23 compared these analytical error estimations to the ones calculated from cross-correlating the MeerKAT data with the random WiggleZ catalogues used to derive the selection function, finding good agreement. In Sect. 7, we further test the Gaussian error assumption by means of a reduced χ2 analysis and comparing them directly with the data-driven jackknife errors, finding also good agreement.

6.2. Theoretical modelling

Our fiducial cosmological model is Planck18. We assume it to compute the linear matter power spectrum Pm, derived through CAMB (Lewis et al. 2000), and all other cosmological quantities (see below). We work at the effective redshift zeff = 0.42, corresponding to the median frequency of the data cube.

We adopt the following cross-power spectrum model:

is the mean H I temperature of the field that, at fixed cosmology, depends on the overall neutral hydrogen cosmic abundance ΩH I (Furlanetto et al. 2006):

is the mean H I temperature of the field that, at fixed cosmology, depends on the overall neutral hydrogen cosmic abundance ΩH I (Furlanetto et al. 2006):

where Ωm and ΩΛ are the density fractions for matter and the cosmological constant, respectively. bH I and bg are the H I and galaxy large-scale linear biases and r is their cross-correlation coefficient. We account for linear matter redshift-space distortions (RSD) with the (1 + fμ2)2 factor (Kaiser 1987), where f is the growth rate of structure and μ is the cosine of the angle from the line-of-sight. The Gaussian damping in the last term approximates the smoothing effect of the telescope beam; in particular, we compute the corresponding standard deviation (Matshawule et al. 2021)

through the angular resolution FWHM parameter θFWHM of Eq. (1), and multiply it by the comoving angular diameter distance to the effective redshift–median frequency–of the observed data set, yielding Rbeam = 10.8 Mpc h−1. Given the current noise in the data and uncertainties of the measurements, we find the above modelling of beam smearing to be sufficient for thisanalysis.

For a direct comparison, we sample the power spectrum template in Eq. (14) onto the same Fourier-space grid as the data, and convolve it with the same window functions. Together with fixing cosmology, we set the galaxy bias  (Blake et al. 2011). The only free parameter left to be fitted against data is the combination ΩH I bH Ir, namely, the amplitude of the cross-power spectrum of Eq. (14).

(Blake et al. 2011). The only free parameter left to be fitted against data is the combination ΩH I bH Ir, namely, the amplitude of the cross-power spectrum of Eq. (14).

For reference, CL23 measured ΩH I bH Ir = (0.86 ± 0.10) × 10−3, derived using a transfer function (𝒯) approach to compensate for the signal loss, namely, enhancing the measurements by about 80% for k < 0.15 h/Mpc down to 20% at the smallest scale considered. We did not perform the 𝒯-correction on the spectra measured in this analysis, for reasons that will become clear in later sections, yet delivering a cross-spectra in agreement (and even with higher amplitude) with CL23.

7. Capitalising on our benchmark: Measuring the correlation with the WiggleZ galaxies

We assessed the new cleaning pipeline choices and methods described in this work, confronting the resulting cleaned cubes with the cross-correlation measurement already reported in CL23. To focus on the cleaning strategy’s influence on the results, here we use the same tools presented in CL23 for computing and modelling PH I,g(k), fitting its amplitude, and evaluating the goodness of the fit. The only exception to the previous statement is related to using a 2D window function before the Fourier transform that replaces the effective tapering due to the weight map of the OF (see Sect. 6).

In many figures in this section, we report the CL23 result as a reference. In particular, we show the fitted model of Eq. (14) with the amplitude derived by CL23 – not the actual PH I,g(k) data points – for clarity and to overcome the fact that in CL23 maps had been smoothed, effectively reducing their native resolutions that instead we keep here. However, the reader has to keep in mind that CL23 derived the final power spectrum with the transfer function, 𝒯, procedure, whereas we stress that none of the results of the present work have a 𝒯-correction applied. It might seem an unfair comparison, but it will make our point: we have improved the cleaning procedure so that the cosmological signal loss is more under control.

7.1. The cross-power spectrum

We start with the PCA-like methods’ cleaned cubes. We show the cross-P(k) in Fig. 12 in the top for a simple PCA analysis, in the bottom for its weighted version PCAw. Both SVD results, weighted and not, are in between those presented; that is to say: PCA and PCAw bracket the range of the PCA-like analyses. In each panel, we show the cross-P(k) for different cubes cleaned with different numbers Nfg of removed components, colour coded from dark (Nfg = 4) to light (Nfg = 9). The lines and empty symbols are the measurements, and the corresponding shaded areas are their 1σ uncertainties. Besides the encouraging agreement of some of these results with CL23 (red dashed line), the overall behaviour of these power spectra is promising (especially for PCAw) as our interpretation follows. We do not show any Nfg < 4 results as they are widely oscillating, namely, too much foreground contamination is still in the maps, and no measurement is possible. With the Nfg = 4 cleaned cube, the PH I,g(k) eventually reaches a reasonable ‘shape’; indeed, besides the amplitude, the consistency with CL23 indicates, first of all, a theoretically expected P(k) behaviour. As contaminant suppression becomes more aggressive (increasing Nfg) the cosmological signal is increasingly suppressed as well, and the PH I,g(k) gradually loses power. Eventually, for Nfg = 6 − 7, we are fully compatible with a null spectrum: the correlation is lost.

|

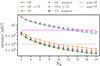

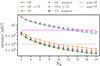

Fig. 12. Cross-power spectra between WiggleZ galaxies and MeerKAT H I intensity maps at redshift z ≈ 0.4 for the PCA and PCAw cleaned cubes in the top and bottom panel, respectively. Maps are cleaned by removing different numbers of PCA (and PCAw) modes, from dark, Nfg = 4, to light colour lines, Nfg = 9; 1σ error bars are shown with the corresponding shaded areas. The red dashed line is the fitted model from CL23 (same data, different cleaning strategy) that instead includes a 𝒯-correction for the signal loss; as a rule-of-thumb, the uncorrected CL23 best-fit would show an 80% lower amplitude at the largest scale probed (e.g. below the 1σ boundary of the Nfg = 4 and 5 PCAw measurements for k ≲ 0.12 h/Mpc in the bottom panel). We plot the product kP(k) to accentuate the small scales that would otherwise be flattened by the much higher low-k measurements. |

Next, we computed the cross-P(k) of the mPCA-cleaned cubes. The results are shown in Fig. 13 where, with the same colour codes of Fig. 12, we show different cleaning with dark to light colour lines and areas (mean values and 1σ errors) and the red dashed line is the CL23 fitted model. For the mPCA case, we can choose how many modes to remove on the large (NL) and – independently – on the small scale (NS); the spectra in the figure have been derived varying NL and keeping NS fixed to 4 (top panel) and 8 (bottom). We find a similar–and actually clearer–trend as what we described above for Fig. 12: the cleaned cube that gives the highest PH I,g(k) amplitude corresponds to a ‘sweet spot’ when varying the Nfg parameters. For brevity, we decided not to show results for varying NS; we find that the large scale NL is the primary driver of the overall amplitude of the cross-P(k).

|

Fig. 13. Cross-power spectra between WiggleZ galaxies and MeerKAT H I intensity maps cleaned by mPCA at redshift z ≈ 0.4. We keep the number of modes removed at small scale fixed at NS = 4 (top panel) and NS = 8 (bottom), and we remove different numbers of large-scale modes (from dark, NL = 4, to light colour lines, NL = 9), with 1σ error bars (corresponding shaded areas). The red dashed line is the fitted model from CL23 (same data, different cleaning strategy) that instead includes a 𝒯 correction for the signal loss; as a rule-of-thumb, the uncorrected CL23 best-fit would show an 80% lower amplitude at the largest scale probed (e.g. below the 1σ boundary of the NL = 4, measurements for k ≲ 0.14 h/Mpc). We plot the product kP(k) to accentuate the small scales that would otherwise be flattened by the much higher low-k measurements. |

7.2. Least-squares fitting

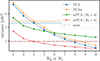

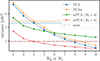

Next, we conducted a least-squares fit to the measured PH I,g(k) with the model described in Sect. 6, and measure their amplitude ΩH I bH Ir. We assumed a Gaussian covariance, effectively ignoring correlation among adjacent k-bins8. We fit for one parameter, the amplitude of the cross-P(k), and worked with 11 k-bins (data points), yielding 10 degrees of freedom. The results are in Fig. 14 for the PCA cubes and Fig. 15 for mPCA as a function of the number Nfg of modes removed.

|

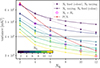

Fig. 14. Sensitivity of our results to the PCA-like contaminant subtractions as function of how many modes have been removed from the original cube. In the top panel, we show the reduced χ2 for each foreground cleaned cross-power spectra relative to its best-fitting model; in the bottom panel, the corresponding best-fitting ΩH I bH Ir values and their 1σ error bars. Filled symbols, circles and squares, are for an SVD a PCA cleaning respectively; their weighted counterparts corresponds to empty triangles and diamonds. In the bottom panel, the shaded red region highlights the CL23 best-fitting value and 1σ uncertainty that was obtained with a PCAw Nfg = 30 cleaning and a 𝒯-correction (the ‘uncorrected’ CL23 amplitude would lay between 0.2 − 0.3 × 10−3). We highlight in grey the Nfg = 4 results that correspond to our best and reference PCA result: the smallest Nfg to reach a plausible χ2. |

|

Fig. 15. Sensitivity of our results to the mPCA contaminant subtraction as function of how many modes have been removed from the original cube. The top panel shows the reduced χ2 for each foreground cleaned cross-power spectra relative to its best-fitting model. The bottom panel the corresponding best-fitting ΩH I bH Ir values and their 1σ error bars. All quantities are plotted as a function of the Nfg pair of the mPCA analysis, with NL on the x-axis and NS colour- and symbol-coded spanning from NS = 3 in violet to NS = 10 in yellow. For comparison, the shaded red region highlights the CL23 best-fitting value and 1σ uncertainty, that was obtained with a 𝒯-correction (the ‘uncorrected’ CL23 amplitude would lay between 0.2 − 0.3 × 10−3). We highlight in grey the NL, NS = 4, 8 results that correspond to our best and reference mPCA result. Results from mSVD and the weighted mSVDw and mPCAw show negligible differences. |